Releases: mage-ai/mage-ai

Release 0.8.11 | The Mandalorian Release

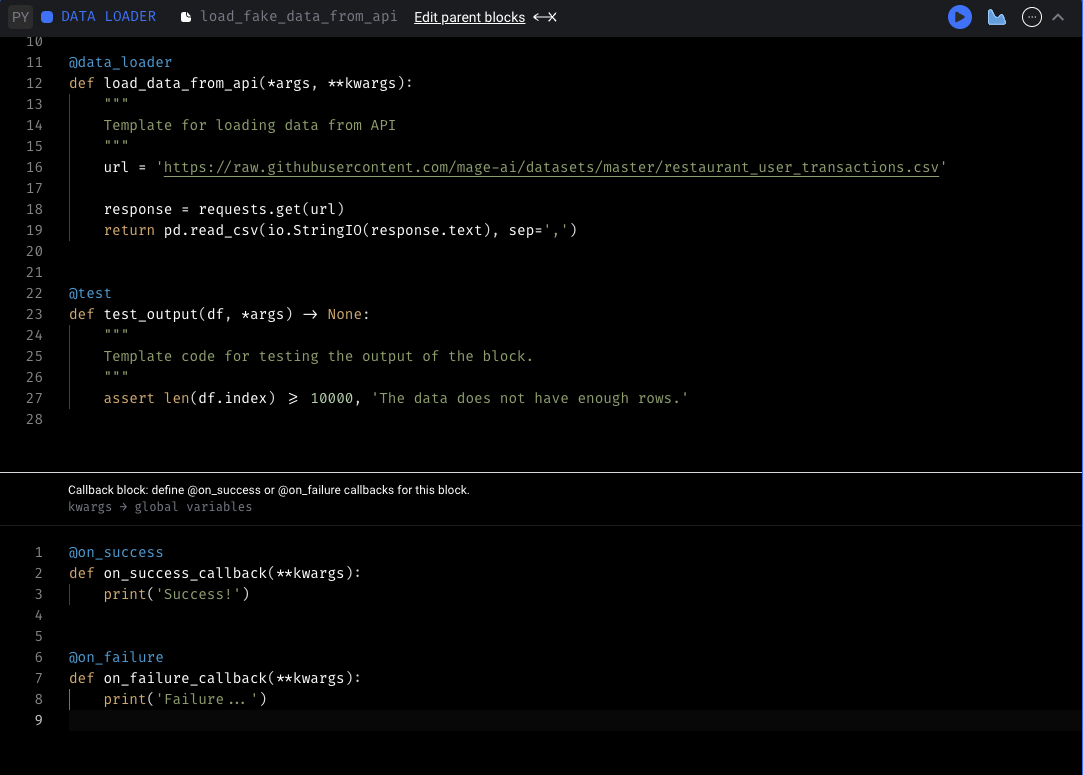

Configure callbacks on block success or failure

-

Add callbacks to run after your block succeeds or fails. You can add a callback by clicking “Add callback” in the “More actions” menu of the block (the three dot icon in the top right).

-

For more information about callbacks, check out the Mage documentation

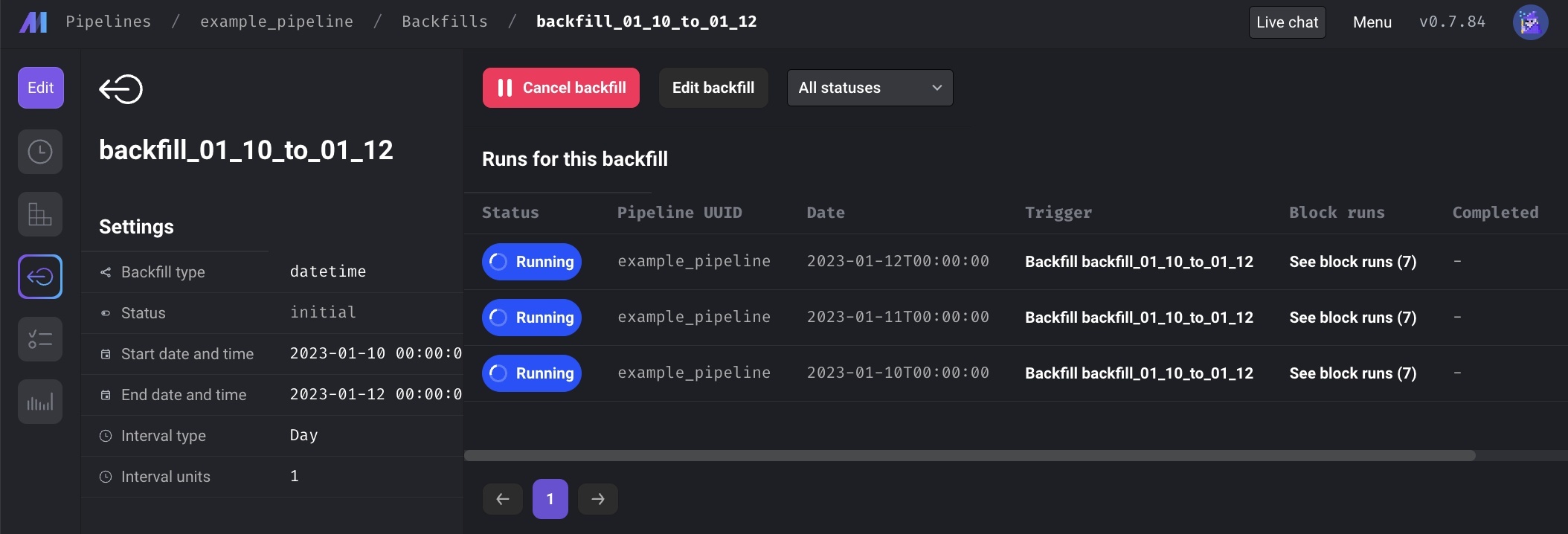

Backfill improvements

-

Show preview of total pipeline runs created and timestamps of pipeline runs that will be created before starting backfill.

-

Misc UX improvements with the backfills pages (e.g. disabling or hiding irrelevant items depending on backfill status, updating backfill table columns that previously weren't updating as needed)

Dynamic block improvements

- Support dynamic block to dynamic block

- Block outputs for dynamic blocks don’t show when clicking on the block run

DBT improvements

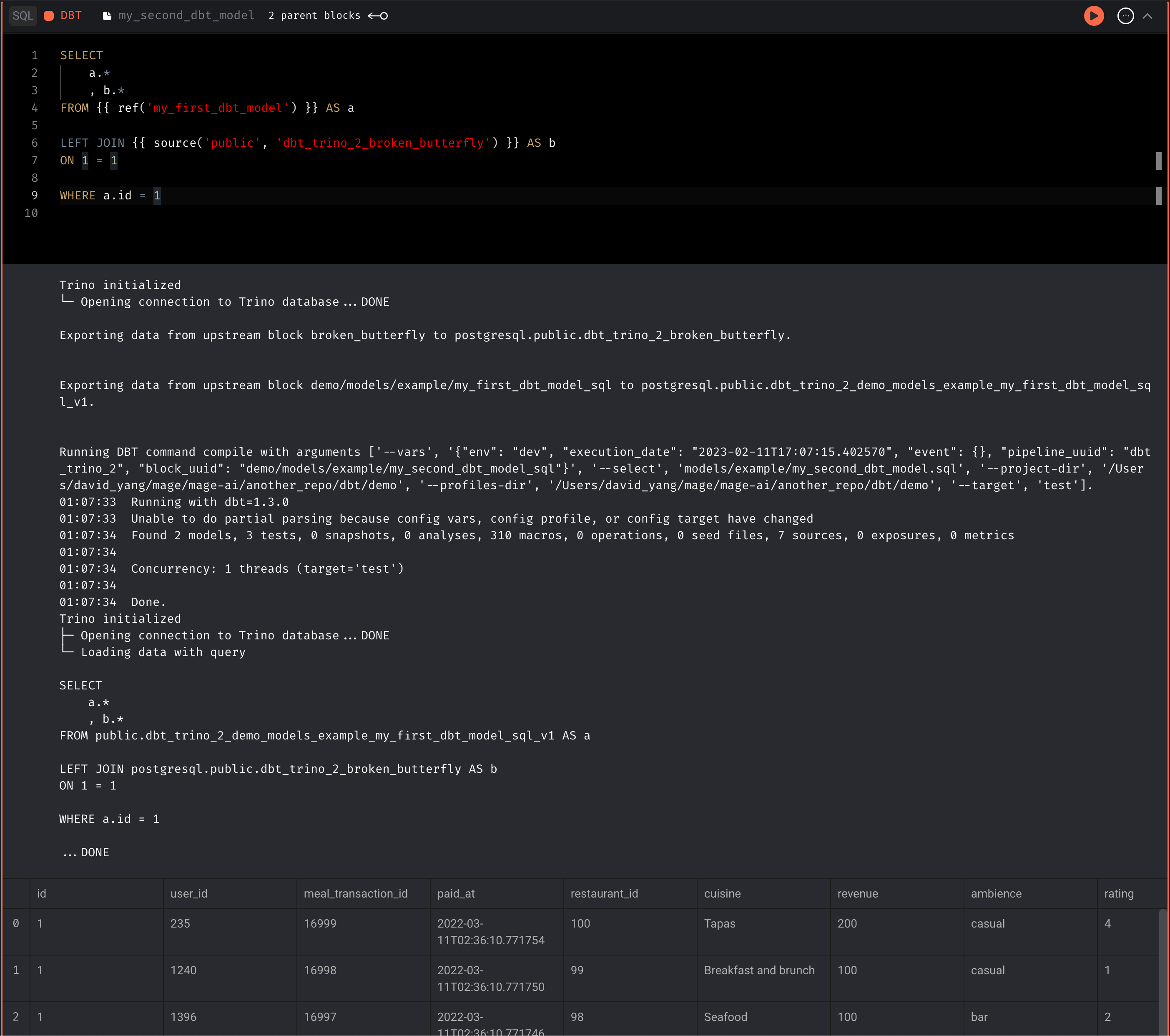

- View DBT block run sample model outputs

- Compile + preview, show compiled SQL, run/test/build model options, view lineage for single model, and more.

- When clicking a DBT block in the block runs view, show a sample query result of the model

- Only create upstream source if its used

- Don’t create upstream block SQL table unless DBT block reference it.

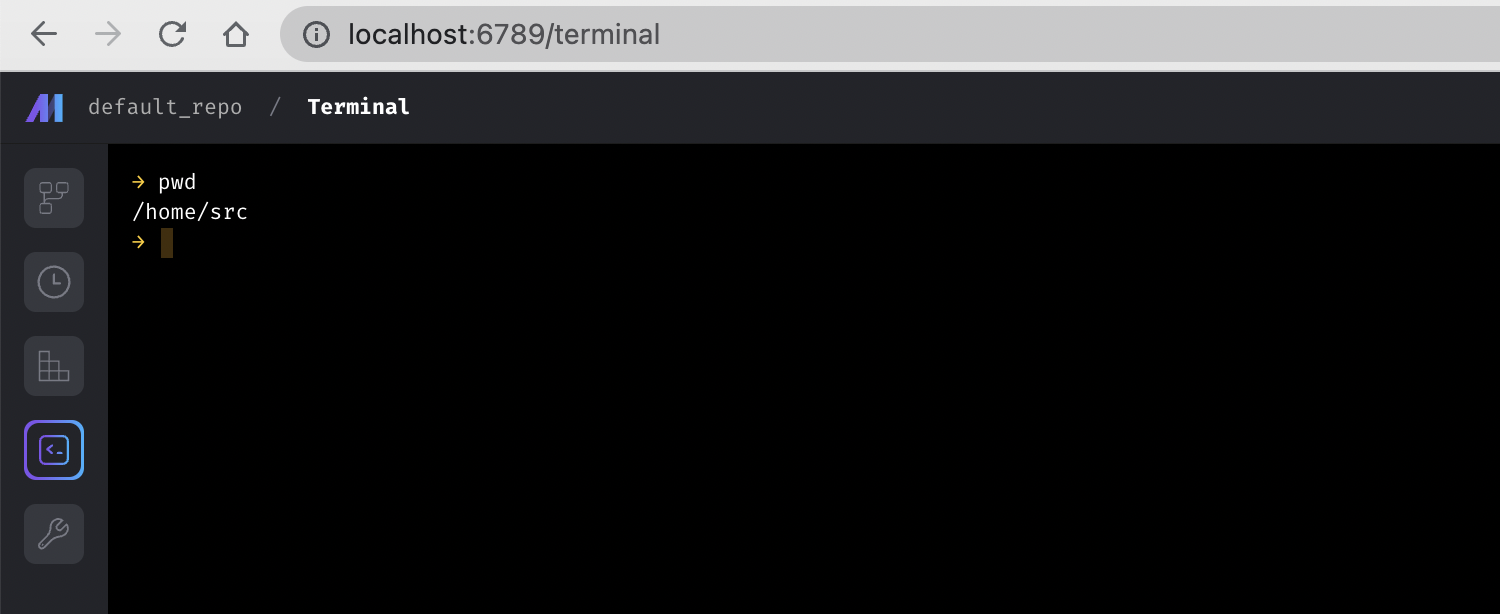

Handle multi-line pasting in terminal

multi-line.pasting.terminal.mp4

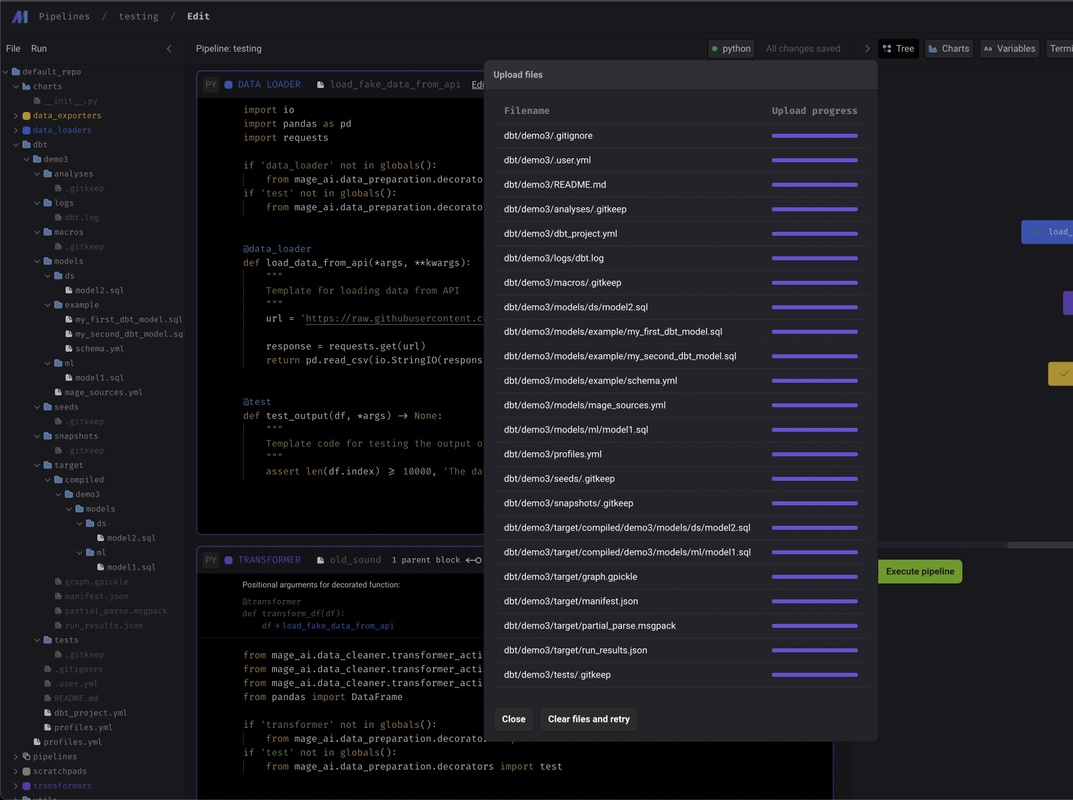

File browser improvements

- Upload files and create new files in the root project directory

- Rename and delete any file from file browser

Other bug fixes & polish

-

Show pipeline editor main content header on Firefox. The header for the Pipeline Editor main content was hidden for Firefox browsers specifically (which prevented users from being able to change their pipeline names on Firefox).

-

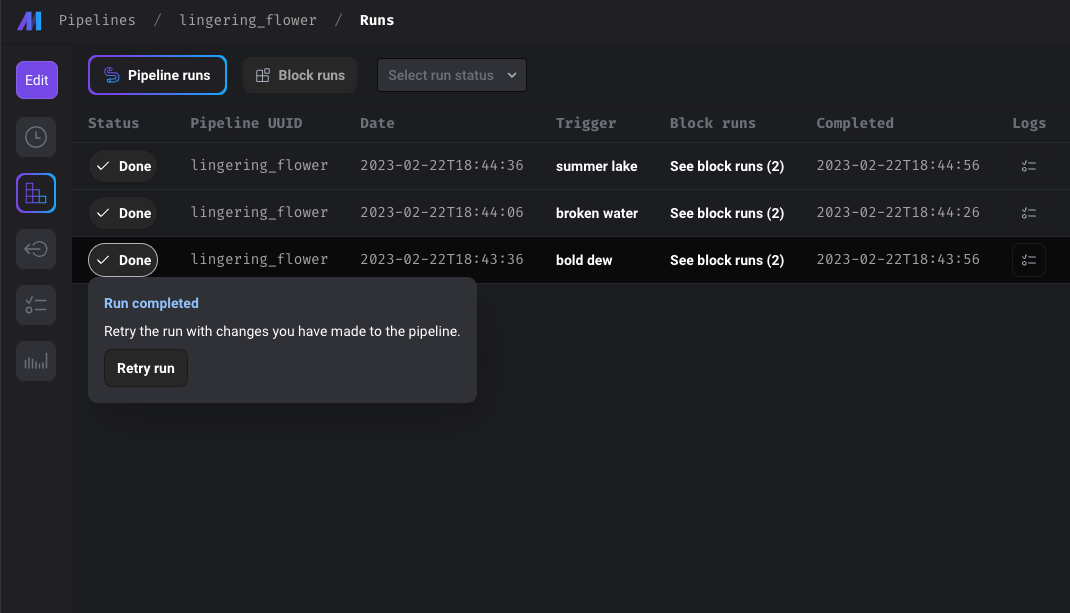

Make retry run popup fully visible. Fix issue with Retry pipeline run button popup being cutoff.

-

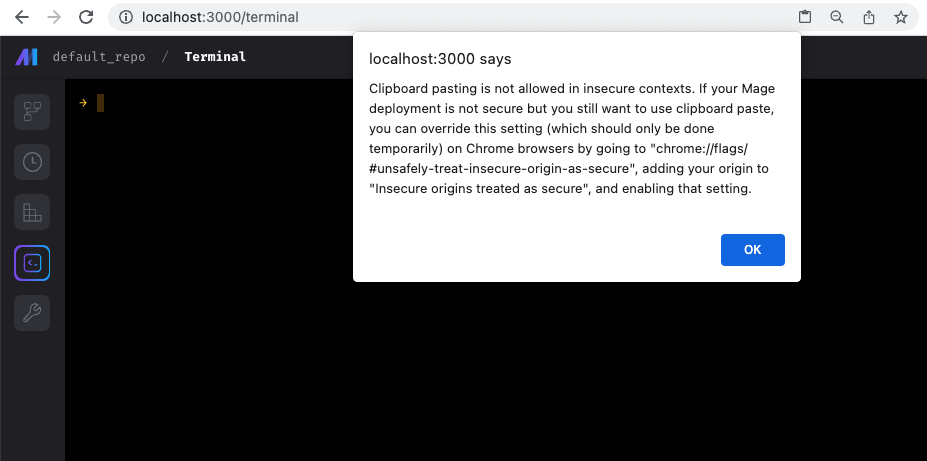

Add alert with details on how to allow clipboard paste in insecure contexts

-

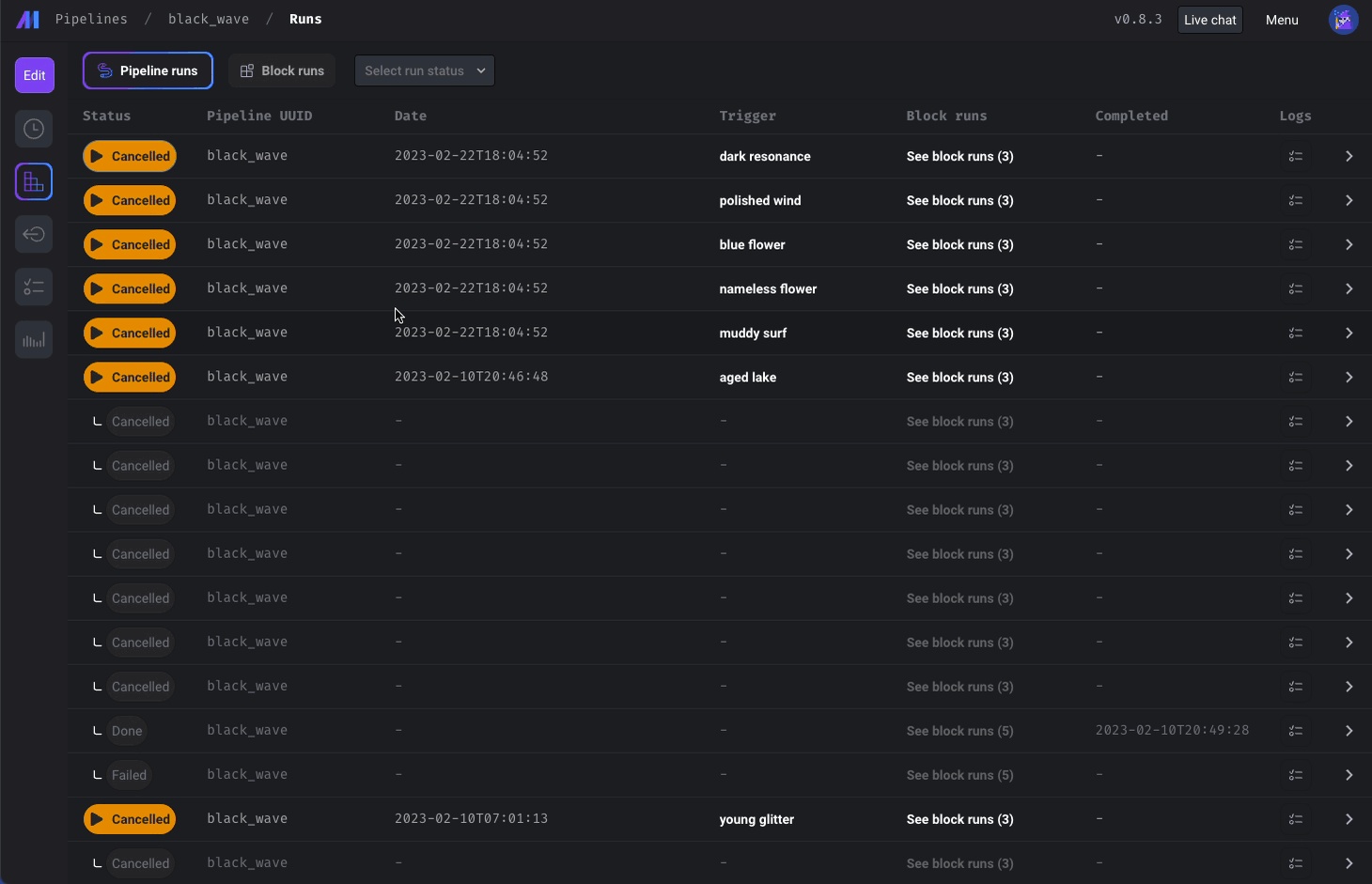

Show canceling status only for pipeline run being canceled. When multiple runs were being canceled, the status for other runs was being updated to "canceling" even though those runs weren't being canceled.

-

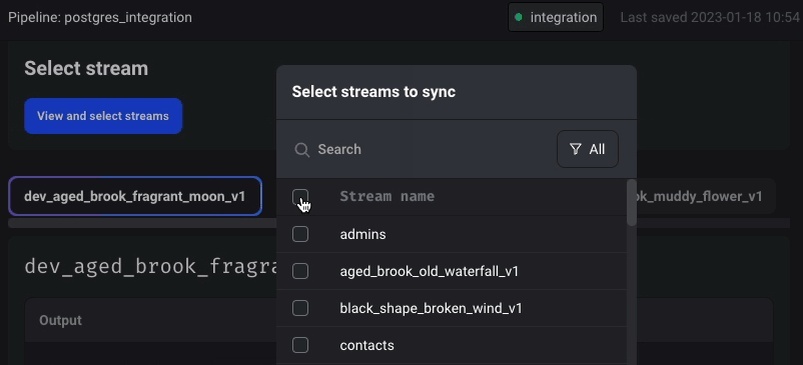

Remove table prop from destination config. The

tableproperty is not needed in the data integration destination config templates when building integration pipelines through the UI, so they've been removed. -

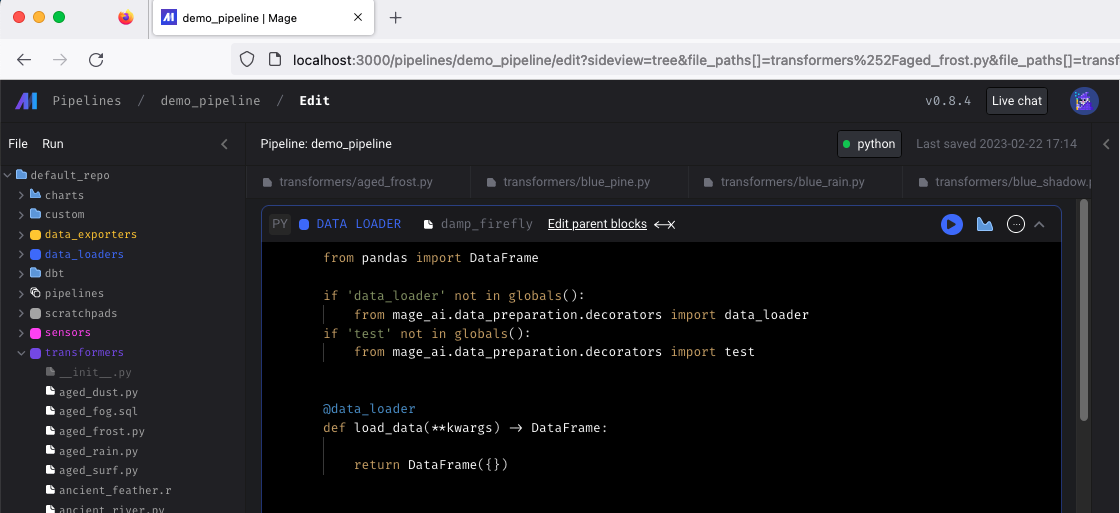

Update data loader, transformer, and data exporter templates to not require DataFrame.

-

Fix PyArrow issue

-

Fix data integration destination row syncing count

-

Fix emoji encode for BigQuery destination

-

Fix dask memory calculation issue

-

Fix Nan being display for runtime value on Syns page

-

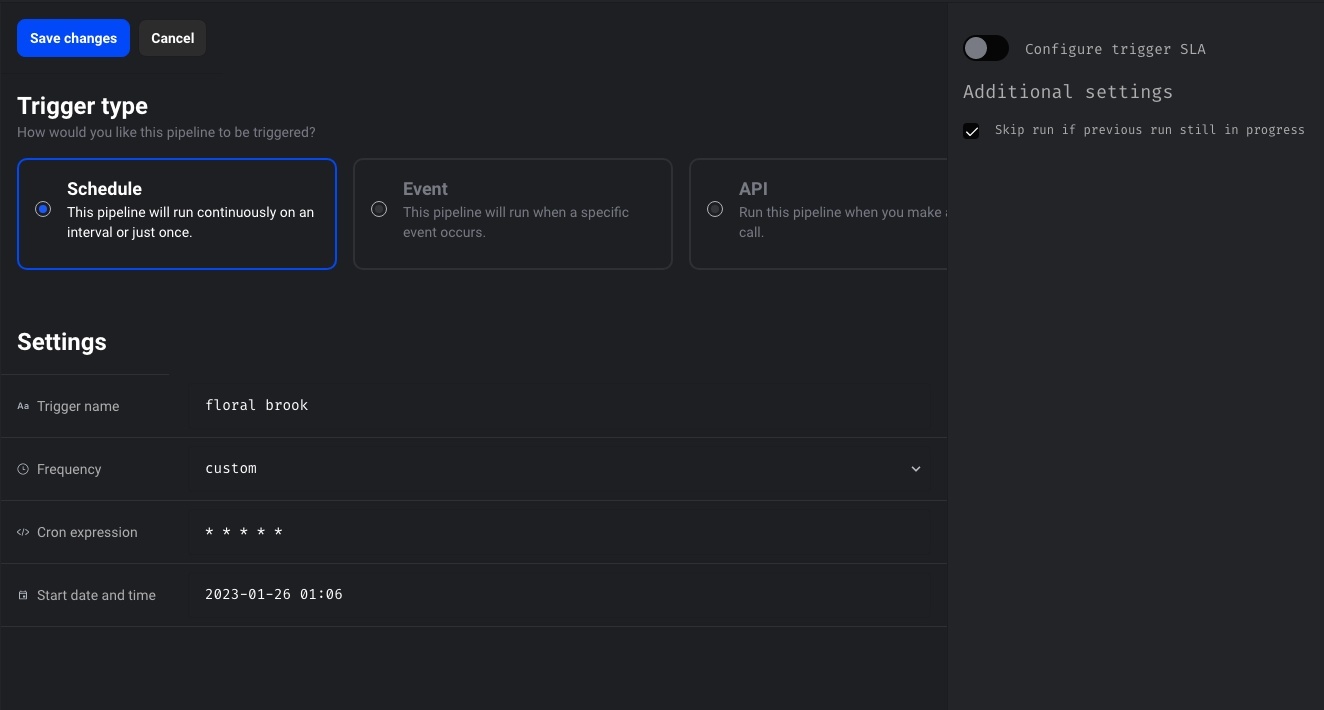

Odd formatting on Trigger edit page dropdowns (e.g. Fequency) on Windows

-

Not fallback to empty pipeline when failing to reading pipeline yaml

View full Changelog

Release 0.8.3 | Everything Everywhere All at Once Release

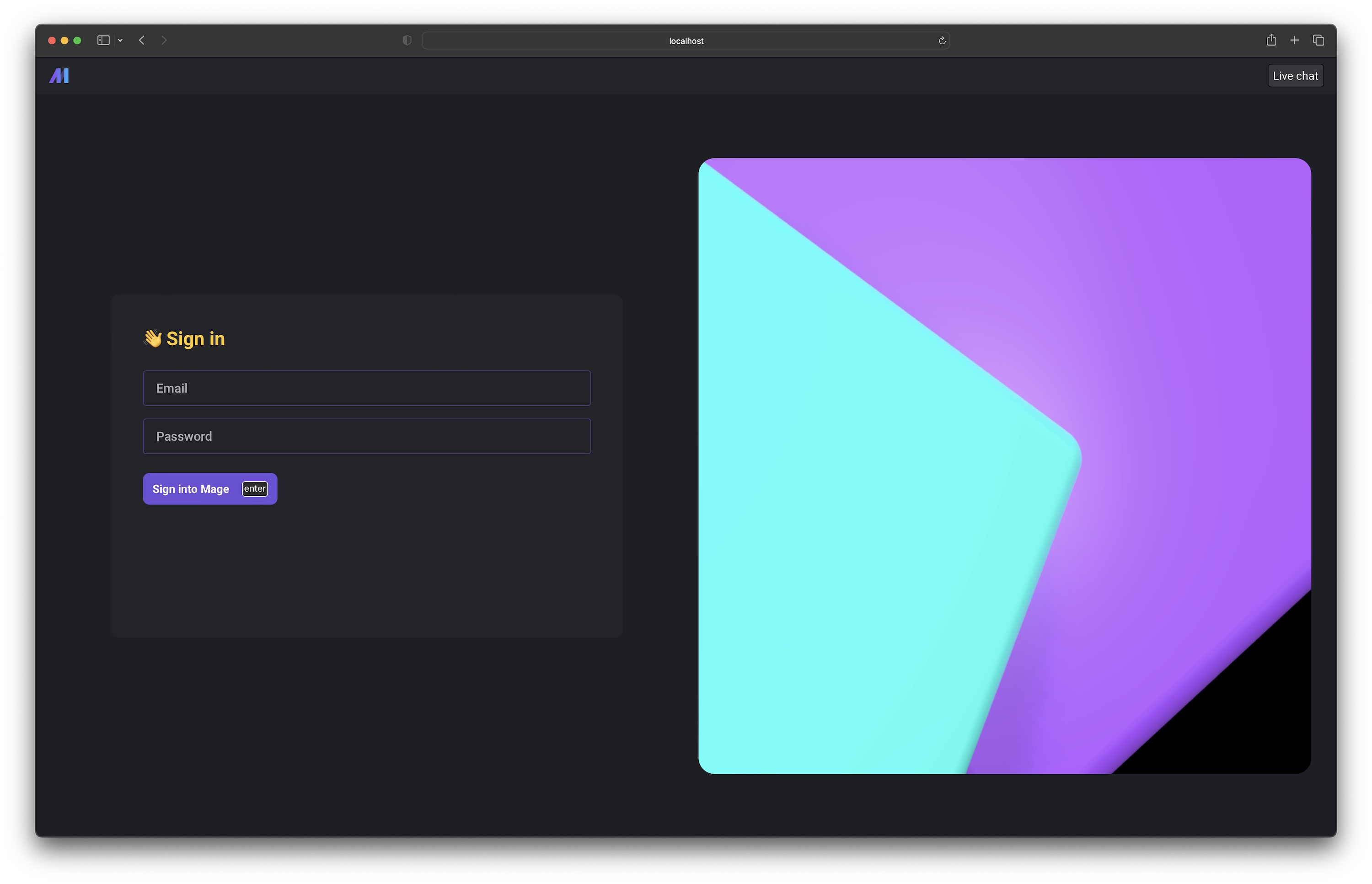

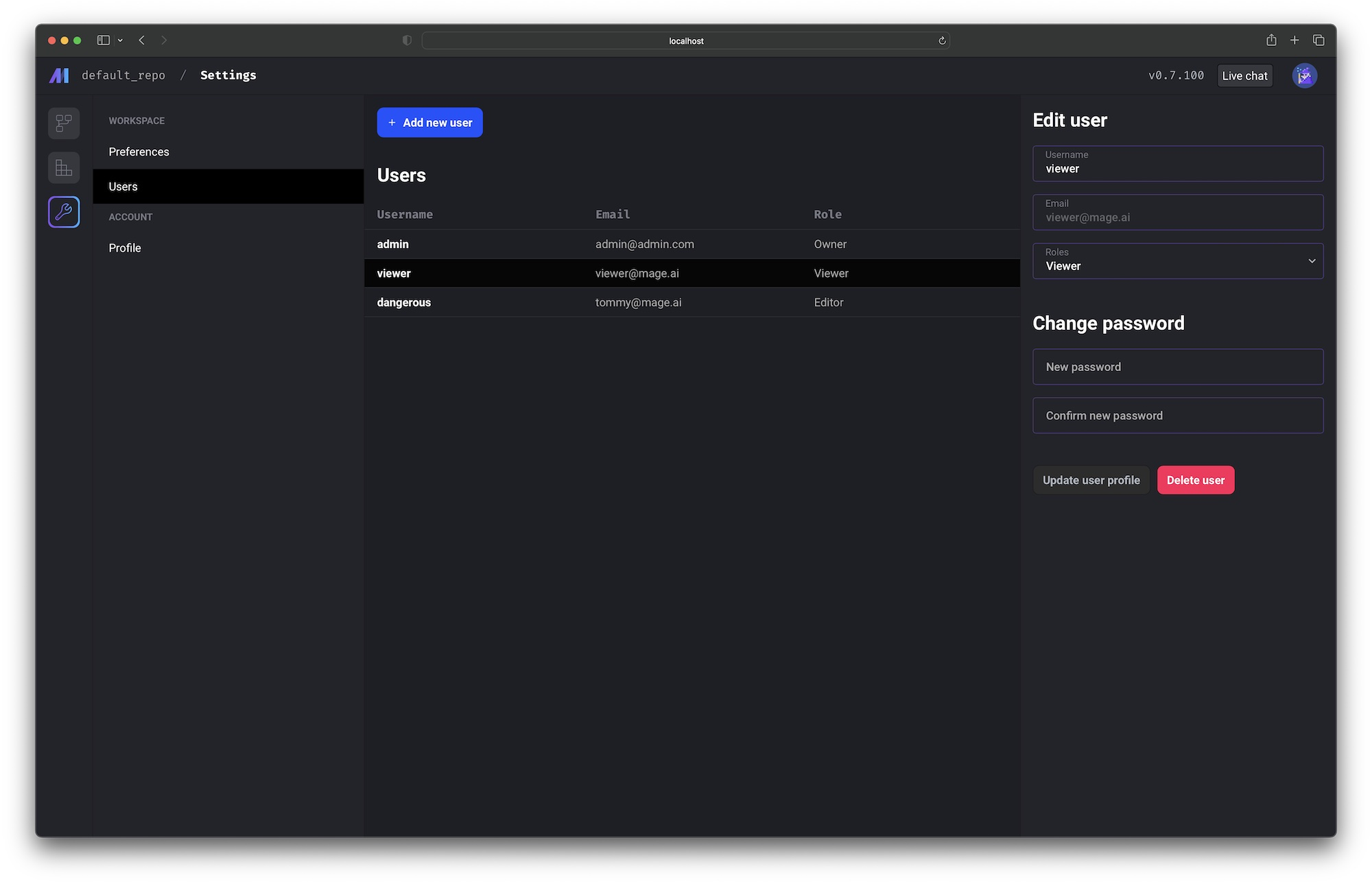

User login, management, authentication, roles, and permissions

User login and user level permission control is supported in mage-ai version 0.8.0 and above.

Setting the environment variable REQUIRE_USER_AUTHENTICATION to 1 to turn on user authentication.

Check out the doc to learn more about user authentication and permission control: https://docs.mage.ai/production/authentication/overview

Data integration

New sources

New destinations

Full lists of available sources and destinations can be found here:

- Sources: https://docs.mage.ai/data-integrations/overview#available-sources

- Destinations: https://docs.mage.ai/data-integrations/overview#available-destinations

Improvements on existing sources and destinations

- Update Couchbase source to support more unstructured data.

- Make all columns optional in the data integration source schema table settings UI; don’t force the checkbox to be checked and disabled.

- Batch fetch records in Facebook Ads streams to reduce number of requests.

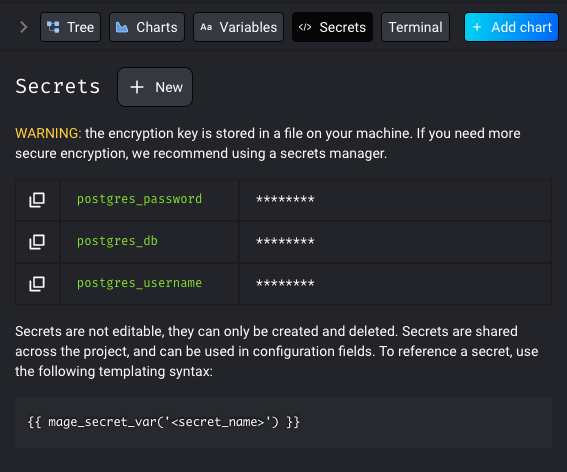

Add connection credential secrets through the UI and store encrypted in Mage’s database

In various surfaces in Mage, you may be asked to input config for certain integrations such as cloud databases or services. In these cases, you may need to input a password or an api key, but you don’t want it to be shown in plain text. To get around this issue, we created a way to store your secrets in the Mage database.

Check out the doc to learn more about secrets management in Mage: https://docs.mage.ai/development/secrets/secrets

Configure max number of concurrent block runs

Mage now supports limiting the number of concurrent block runs by customizing queue config, which helps avoid mage server being overloaded by too many block runs. User can configure the maximum number of concurrent block runs in project’s metadata.yaml via queue_config.

queue_config:

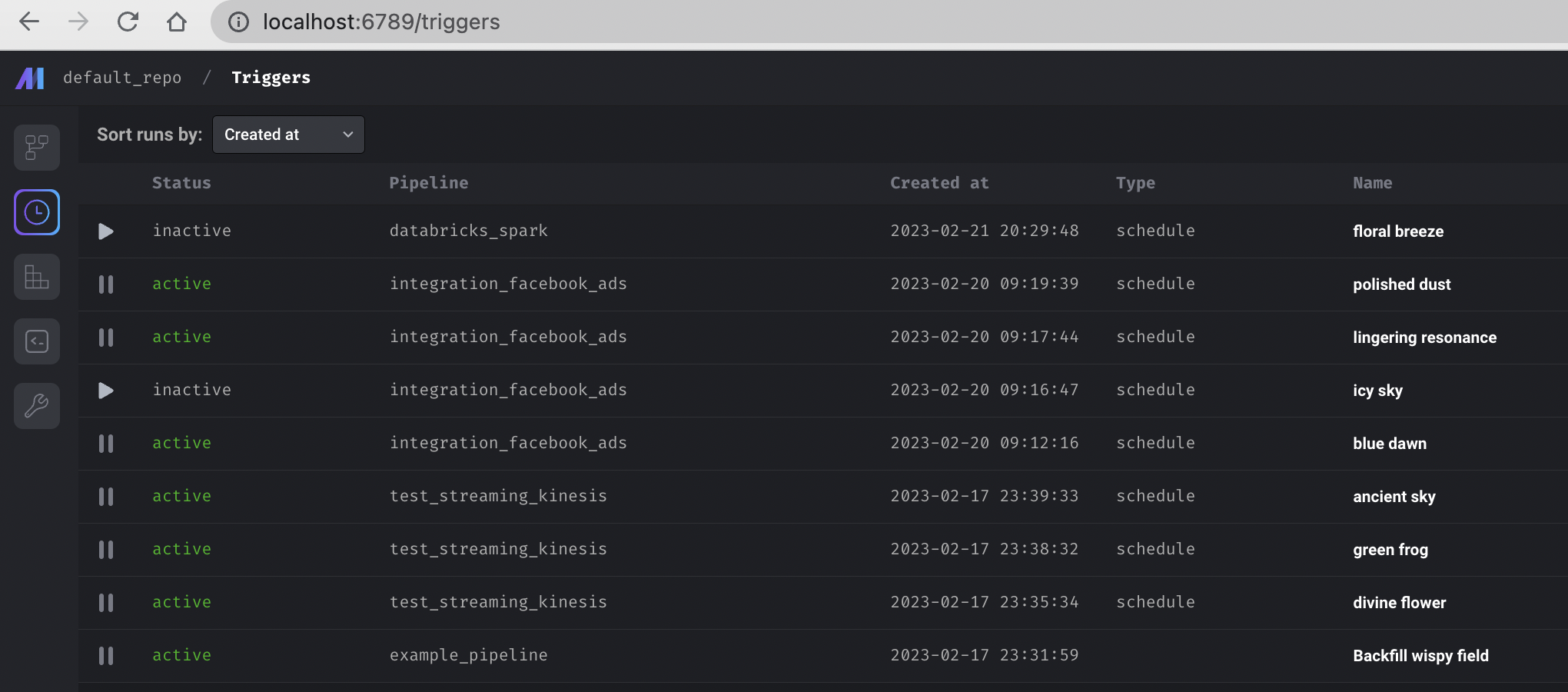

concurrency: 100Add triggers list page and terminal tab

- Add a dedicated page to show all triggers.

- Add a link to the terminal in the main dashboard left vertical navigation and show the terminal in the main view of the dashboard.

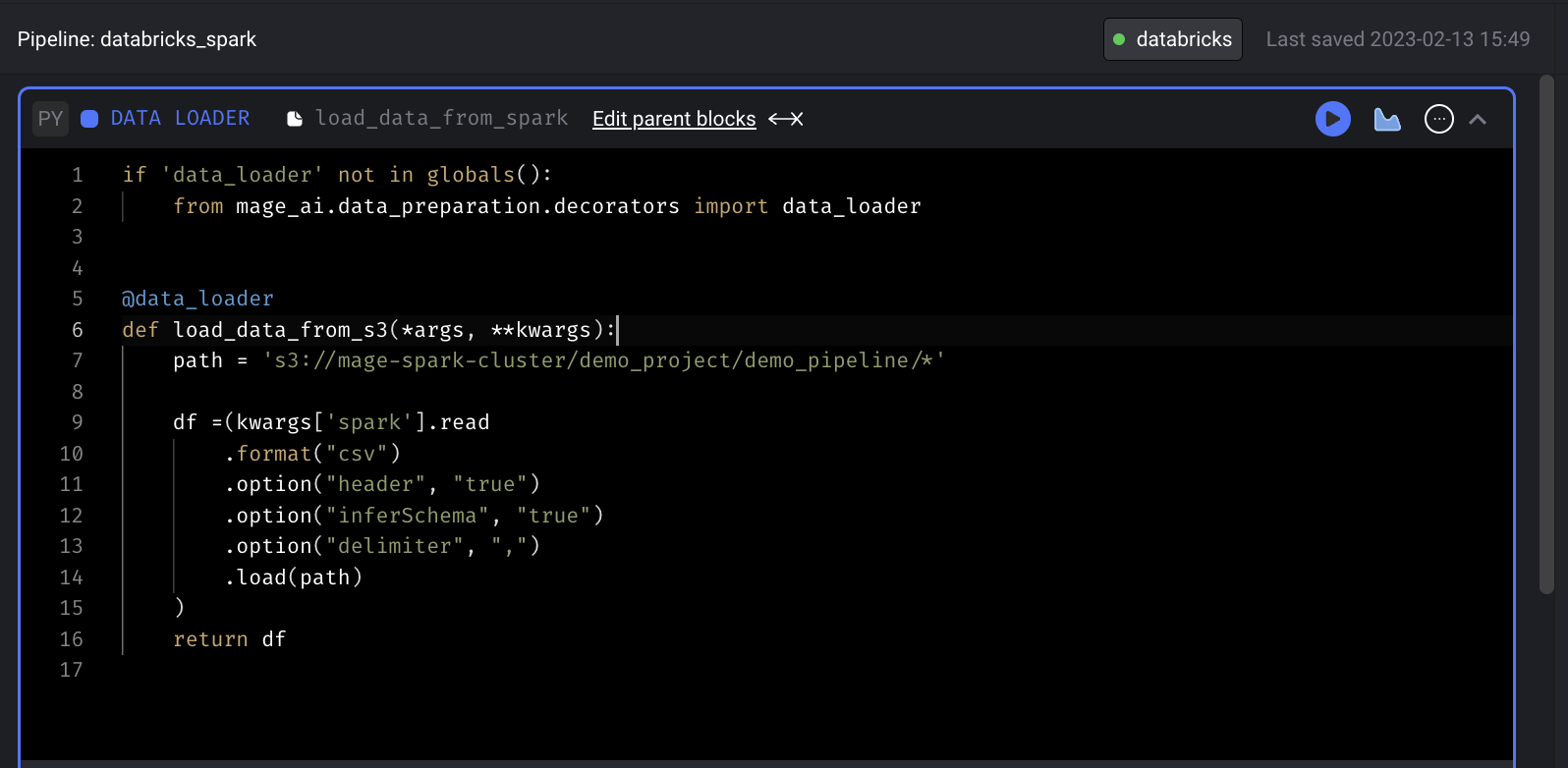

Support running PySpark pipeline locally

Support running PySpark pipelines locally without custom code and settings.

If you have your Spark cluster running locally, you can just build your standard batch pipeline with PySpark code same as other Python pipelines. Mage handles data passing between blocks automatically for Spark DataFrames. You can use kwargs['spark'] in Mage blocks to access the Spark session.

Other bug fixes & polish

- Add MySQL data exporter template

- Add MySQL data loader template

- Upgrade Pandas version to 1.5.3

- Improve K8s executor

- Pass environment variables to k8s job pods

- Use the same image from main mage server in k8s job pods

- Store and return sample block output for large json object

- Support SASL authentication with Confluent Cloud Kafka in streaming pipeline

View full Changelog

Release 0.7.98 | Quantumania Release

Data integration

New sources

Full lists of available sources and destinations can be found here:

- Sources: https://docs.mage.ai/data-integrations/overview#available-sources

- Destinations: https://docs.mage.ai/data-integrations/overview#available-destinations

Improvements on existing sources and destinations

- Support deltalake connector in Trino destination

- Fix Outreach source bookmark comparison error

- Fix Facebook Ads source “User request limit reached” error

- Show more HubSpot source sync print log statements to give the user more information on the progress and activity

Databricks integration for Spark

Mage now supports building and running Spark pipelines with remote Databricks Spark cluster.

Check out the guide to learn about how to use Databricks Spark cluster with Mage.

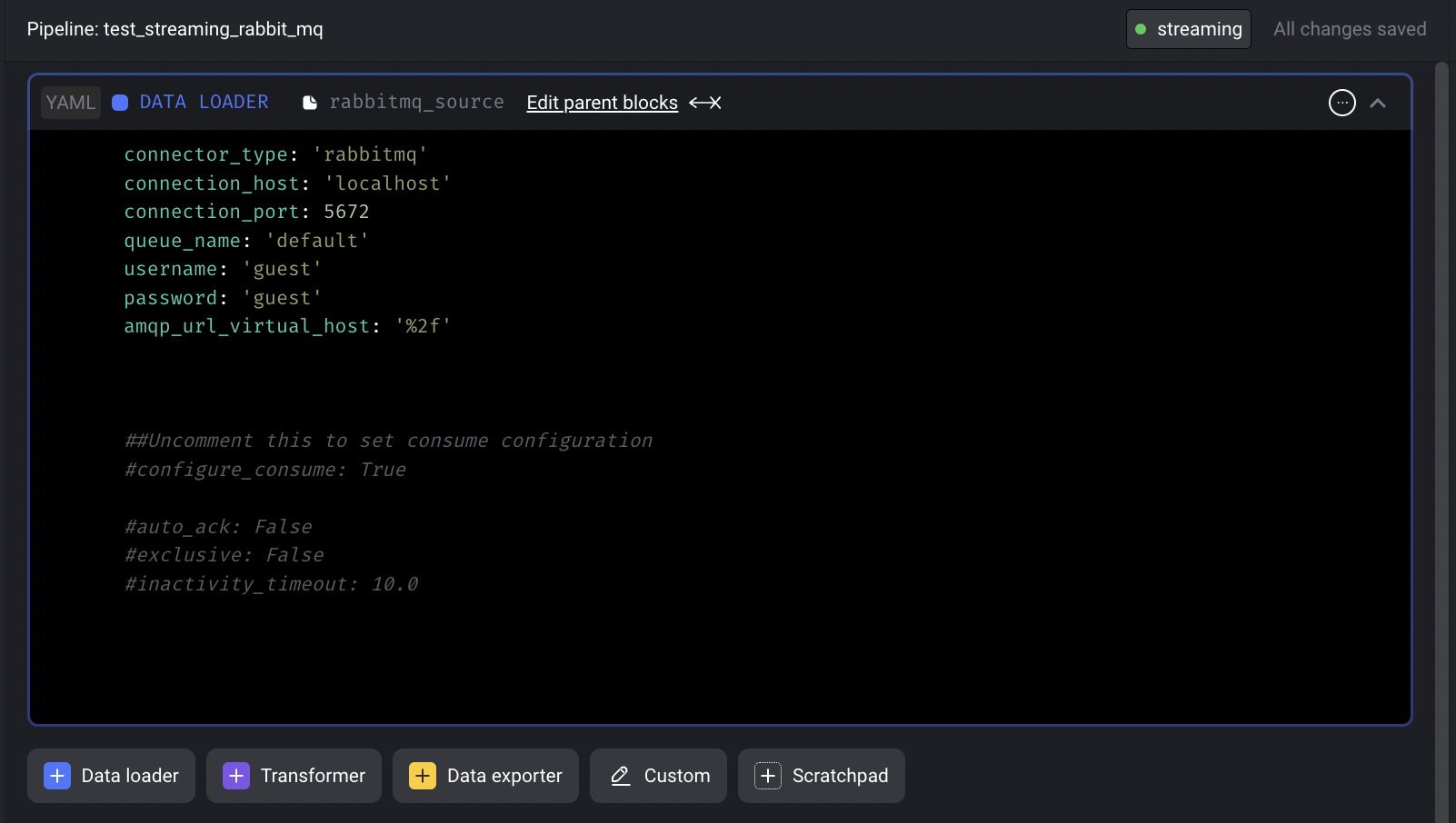

RabbitMQ streaming source

Shout out to Luis Salomão for his contribution of adding the RabbitMQ streaming source to Mage! Check out the doc to set up a streaming pipeline with RabbitMQ source.

DBT support for Trino

Support running Trino DBT models in Mage.

More K8s support

- Allow customizing namespace by setting the

KUBE_NAMESPACEenvironment variable. - Support K8s executor on AWS EKS cluster.

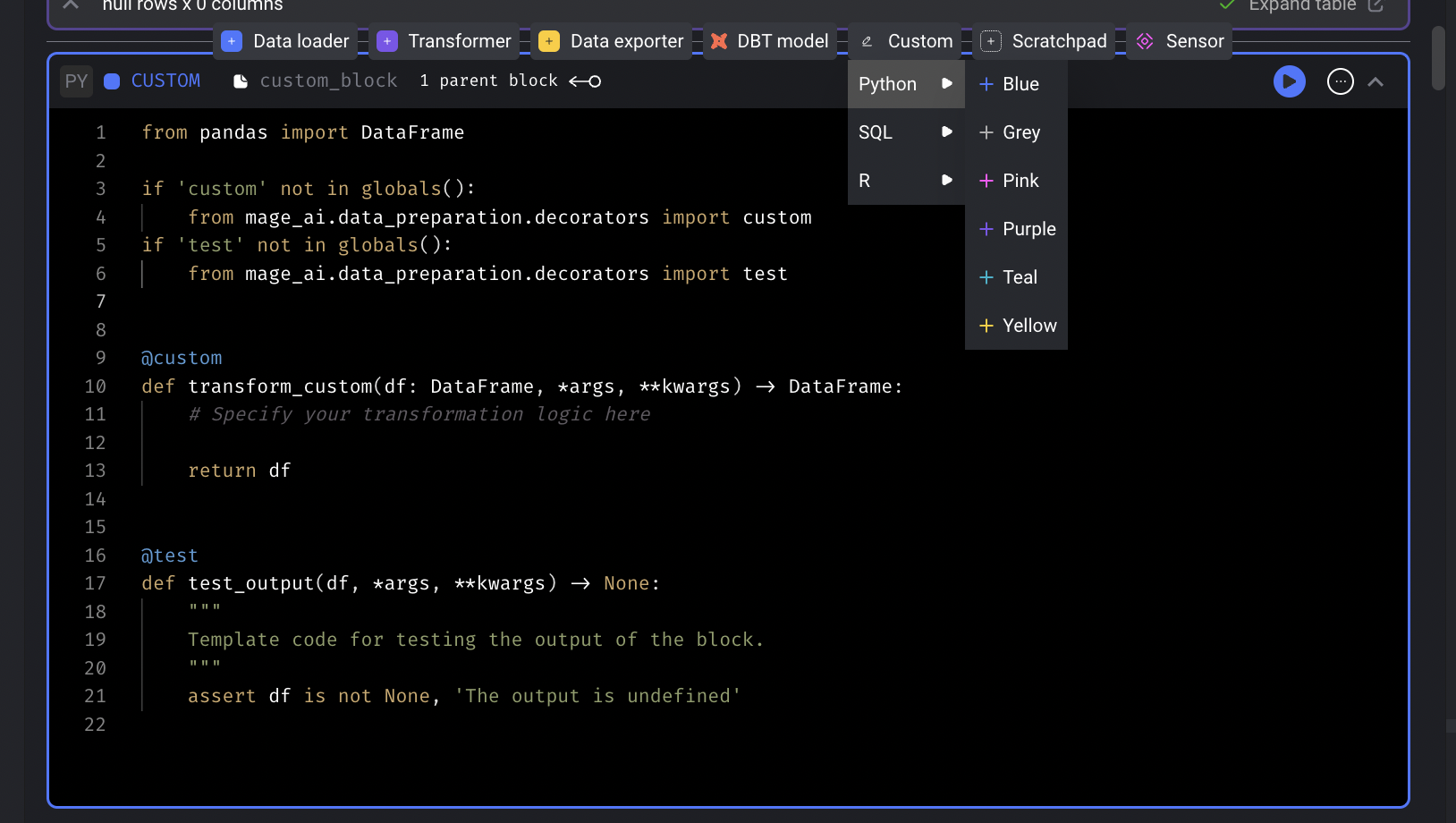

Generic block

Add a generic block that can run in a pipeline, optionally accept inputs, and optionally return outputs but not a data loader, data exporter, or transformer block.

Other bug fixes & polish

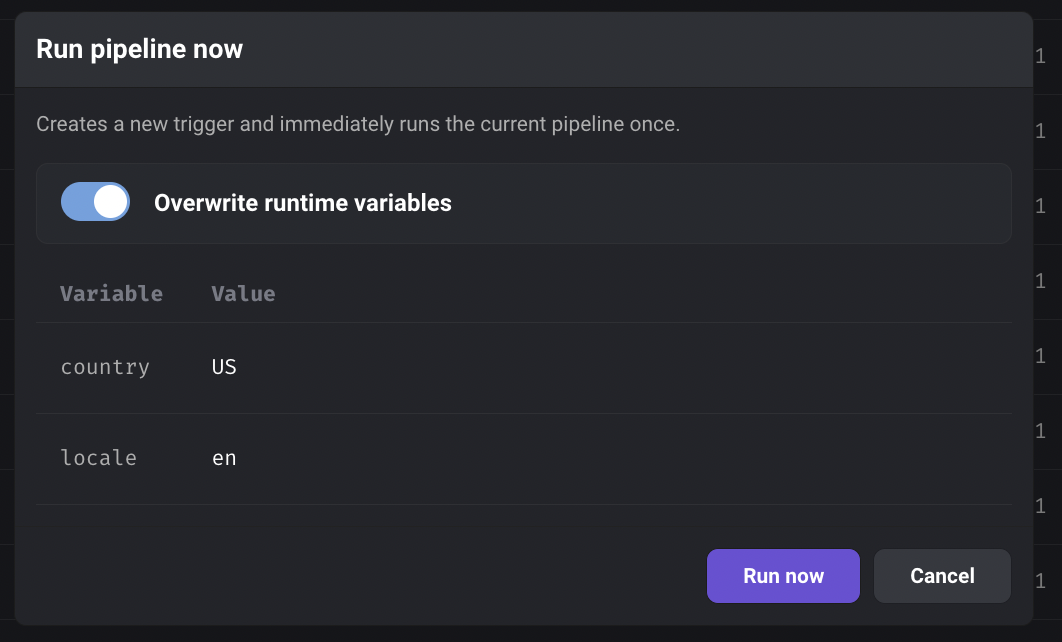

- Support overriding runtime variables when clicking the Run now button on the triggers list page.

- Support MySQL SQL block

- Fix the serialization for the column that is a dictionary or list of dictionaries when saving the output dataframe of a block.

- Allow selecting multiple partition keys for Delta Lake destination.

- Support copy and paste into/from Mage terminal.

View full Changelog

Release 0.7.90 | That '90s Show Release

Data integration

New sources

New destinations

Full lists of available sources and destinations can be found here:

- Sources: https://docs.mage.ai/data-integrations/overview#available-sources

- Destinations: https://docs.mage.ai/data-integrations/overview#available-destinations

Improvements on existing sources and destinations

- Trino destination

- Support

MERGEcommand in Trino connector to handle conflict. - Allow customizing

query_max_lengthto adjust batch size.

- Support

- MSSQL source

- Fix datetime column conversion and comparison for MSSQL source.

- BigQuery destination

- Fix BigQuery error “Deadline of 600.0s exceeded while calling target function”.

- Deltalake destination

- Upgrade delta library from version from 0.6.4 to 0.7.0 to fix some errors.

- Allow datetime columns to be used as bookmark properties.

- When clicking apply button in the data integration schema table, if a bookmark column is not a valid replication key for a table or a unique column is not a valid key property for a table, don’t apply that change to that stream.

New command line tool

Mage has a newly revamped command line tool, with better formatting, clearer help commands, and more informative error messages. Kudos to community member @jlondonobo, for your awesome contribution!

DBT block improvements

- Support running Redshift DBT models in Mage.

- Raise an error if there is a DBT compilation error when running DBT blocks in a pipeline.

- Fix duplicate DBT source names error with same source name across multiple

mage_sources.ymlfiles in different model subfolders: use only 1 sources file for. all models instead of nesting them in subfolders.

Notebook improvements

- Support editing global variables in UI: https://docs.mage.ai/production/configuring-production-settings/runtime-variable#in-mage-editor

- Support creating or edit global variables in code by editing the pipeline

metadata.yamlfile. https://docs.mage.ai/production/configuring-production-settings/runtime-variable#in-code - Add a save file button when editing a file not in the pipeline notebook.

- Support Windows keyboard shortcuts: CTRL+S to save the files.

- Support uploading files through UI.

Store logs in GCP Cloud Storage bucket

Besides storing logs on the local disk or AWS S3, we now add the option to store the logs in GCP Cloud Storage by adding logging config in project’s metadata.yaml like below:

logging_config:

type: gcs

level: INFO

destination_config:

path_to_credentials: <path to gcp credentials json file>

bucket: <bucket name>

prefix: <prefix path>Check out the doc for details: https://docs.mage.ai/production/observability/logging#google-cloud-storage

Other bug fixes & improvements

- SQL block improvements

- Support writing raw SQL to customize the create table and insert commands.

- Allow editing SQL block output table names.

- Support loading files from a directory when using

mage_ai.io.file.FileIO. Example:

from mage_ai.io.file import FileIO

file_directories = ['default_repo/csvs']

FileIO().load(file_directories=file_directories)View full Changelog

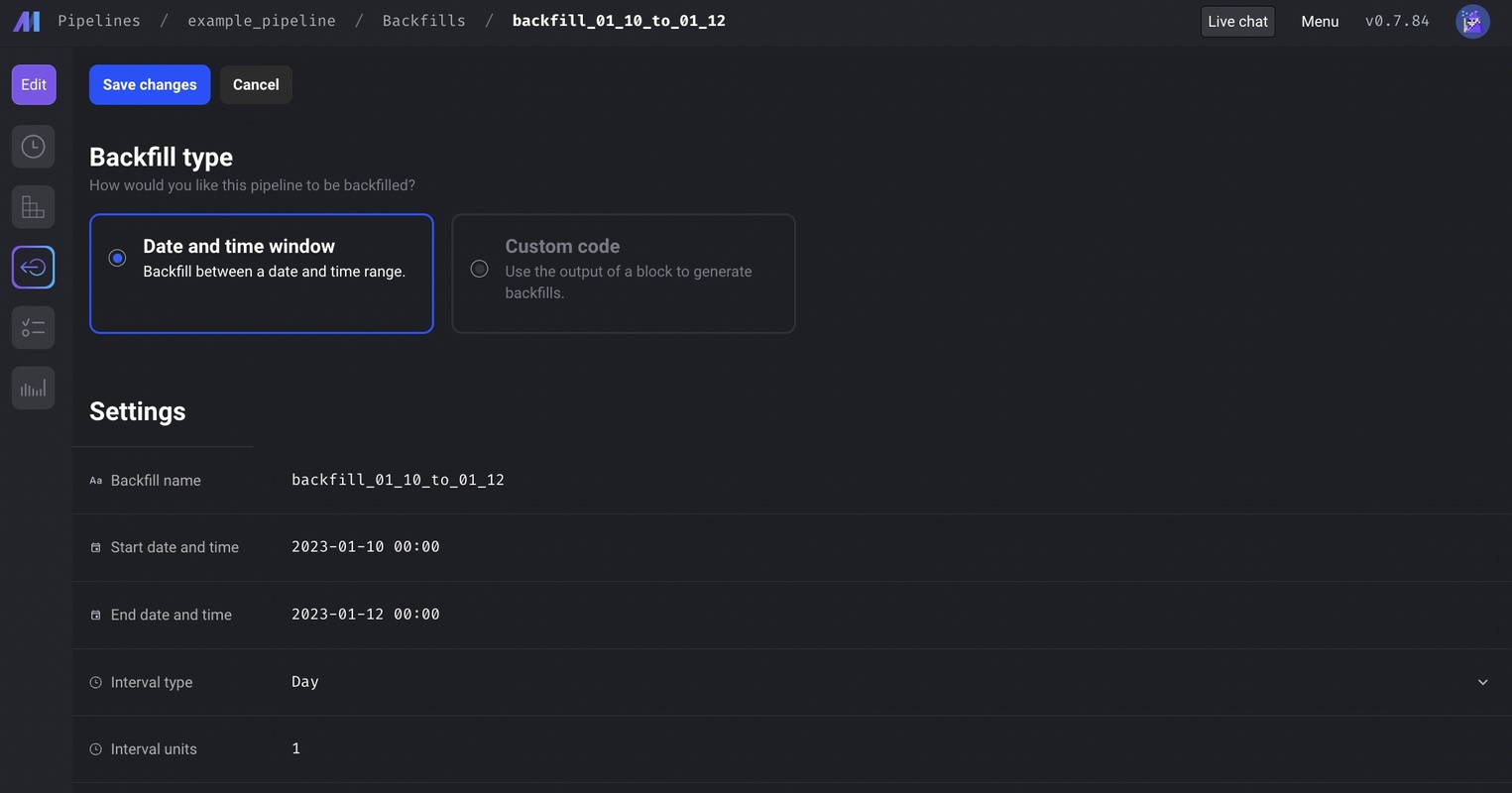

Release 0.7.84 | Rabbit Release

Backfill framework 2.0

Mage launched a new backfill framework to make backfills a lot easier. User can select a date range and date interval for backfill. Mage will automatically create the pipeline runs within the date range, and run them concurrently to backfill the data.

Docs

- Backfill framework overview: https://docs.mage.ai/orchestration/backfills/overview

- Backfill guide: https://docs.mage.ai/orchestration/backfills/guides

Data integration

New sources

New destinations

- Trino (all connectors)

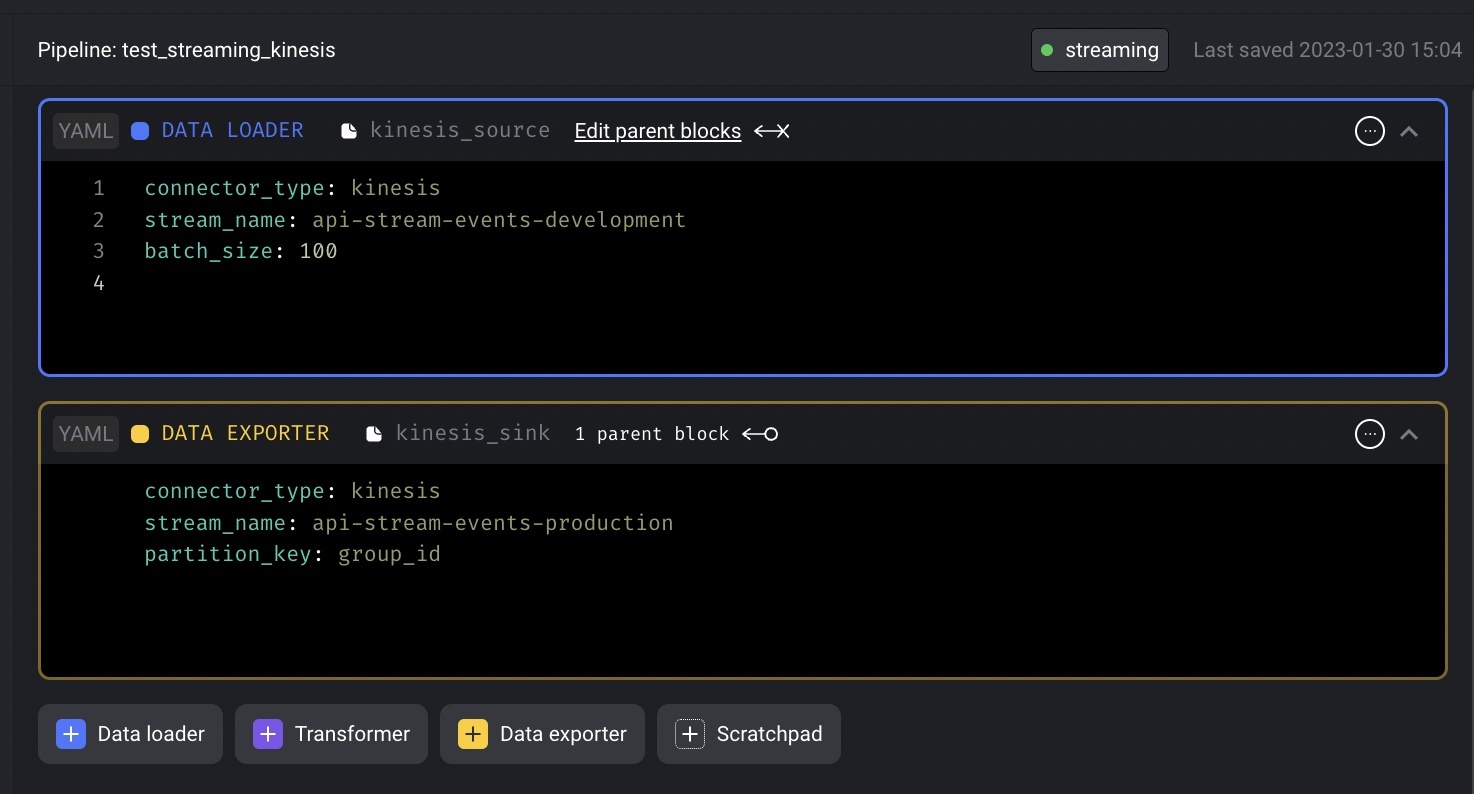

Streaming pipeline

Add Kinesis as streaming source and destination(sink) to streaming pipeline.

- For Kinesis streaming source, configure the source stream name and batch size.

- For Kinesis streaming destination(sink), configure the destination stream name and partition key.

- To use Kinesis streaming source and destination, make sure the following environment variables exist:

AWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEYAWS_REGION

Kubernetes support

- Support running Mage in Kubernetes locally: https://docs.mage.ai/getting-started/setup#using-kubernetes

- Support executing blocks in separate Kubernetes jobs: https://docs.mage.ai/production/configuring-production-settings/compute-resource#kubernetes-executor

blocks:

- uuid: example_data_loader

type: data_loader

upstream_blocks: []

downstream_blocks: []

executor_type: k8s

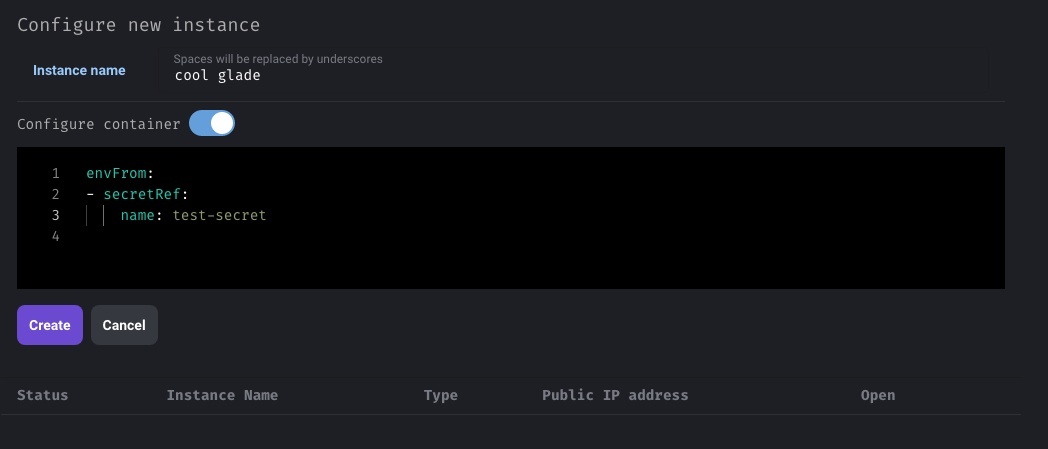

...- When managing dev environment in Kubernetes cluster, allow adding custom config for the mage container in Kubernetes.

DBT improvements

- Support running MySQL DBT models in Mage.

- When adding a DBT block to run all/multiple models, allow manual naming of the block.

Metaplane integration

Mage can run monitors in Metaplane via API integration. Check out the guide to learn about how to run monitors in Metaplane and poll statuses of the monitors.

Other bug fixes & polish

- SQL block: support SSH tunnel connection in Postgres SQL block

- Follow this guide to configure Postgres SQL block to use SSH tunnel

- R block: support accessing runtime variables in R block

- Follow this guide to use runtime variables in R blocks

- Added setting to skip current pipeline run if previous pipeline run hasn’t finished.

- Pass runtime variables to test functions. You can access runtime variables via

kwargs['key']in test functions.

View full Changelog

Release 0.7.74 | Lunar Release

Data integration

New sources

Improvements on existing sources and destinations

- S3 source

- Automatically add

_s3_last_modifiedcolumn from LastModified key, and enable_s3_last_modifiedcolumn as a bookmark property. - Allow filtering objects using regex syntax by configuring

search_patternkey. - Support multiple streams by configuring a list of table configs in

table_configskey. https://github.com/mage-ai/mage-ai/blob/master/mage_integrations/mage_integrations/sources/amazon_s3/README.md

- Automatically add

- Postgres source log based replication

- Automatically add a

_mage_deleted_atcolumn to record the source row deletion time. - When operation is update and unique conflict method is ignore, create a new record in destination.

- Automatically add a

- In source or destination yaml config, interpolate secret values from AWS Secrets Manager using syntax

{{ aws_secret_var('some_name_for_secret') }}. Here is the full guide: https://docs.mage.ai/production/configuring-production-settings/secrets#yaml

Full lists of available sources and destinations can be found here:

- Sources: https://docs.mage.ai/data-integrations/overview#available-sources

- Destinations: https://docs.mage.ai/data-integrations/overview#available-destinations

Customize pipeline alerts

Customize alerts to only send when pipeline fails or succeeds (or both) via alert_on config

notification_config:

alert_on:

- trigger_failure

- trigger_passed_sla

- trigger_successHere are the guides for configuring the alerts

- Email alerts: https://docs.mage.ai/production/observability/alerting-email#create-notification-config

- Slack alerts: https://docs.mage.ai/production/observability/alerting-slack#update-mage-project-settings

- Teams alerts: https://docs.mage.ai/production/observability/alerting-teams#update-mage-project-settings

Deploy Mage on AWS using AWS Cloud Development Kit (CDK)

Besides using Terraform scripts to deploy Mage to cloud, Mage now also supports managing AWS cloud resources using AWS Cloud Development Kit in Typescript.

Follow this guide to deploy Mage app to AWS using AWS CDK scripts.

Stitch integration

Mage can orchestrate the sync jobs in Stitch via API integration. Check out the guide to learn about how to trigger the jobs in Stitch and poll statuses of the jobs.

Bug fixes & polish

-

Allow pressing

escapekey to close error message popup instead of having to click on thexbutton in the top right corner. -

If a data integration source has multiple streams, select all streams with one click instead of individually selecting every single stream.

-

Make pipeline runs pages (both the overall

/pipeline-runsand the trigger/pipelines/[trigger]/runspages) more efficient by avoiding individual requests for pipeline schedules (i.e. triggers). -

In order to avoid confusion when using the drag and drop feature to add dependencies between blocks in the dependency tree, the ports (white circles on blocks) on other blocks disappear when the drag feature is active. The dependency lines must be dragged from one block’s port onto another block itself, not another block’s port, which is what some users were doing previously.

-

Fix positioning of newly added blocks. Previously when adding a new block with a custom block name, the blocks were being added to the bottom of the pipeline, so these new blocks should appear immediately after the block where it was added now.

-

Popup error messages include both the stack trace and traceback to help with debugging (previously did not include the traceback).

-

Update links to docs in code block comments (links were broken due to recent docs migration to a different platform).

View full Changelog