-

Notifications

You must be signed in to change notification settings - Fork 5

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Spherical volume rendering #64

base: main

Are you sure you want to change the base?

Conversation

…der functionality based on dataset geometry

for more information, see https://pre-commit.ci

|

Looks like the commit history was preserved, so I won't worry about figuring out how the previous PR got closed... |

|

note to self: moving the spherical uniforms down to the |

|

more things to do:

|

|

latest push is a proof of concept for calculating the intersection points of a spherical block with a ray. All intersections with the 6 basic geometries defining the faces of a spherical subvolume are calculated: inner and outer spheres at fixed r, inner and outer cones at fixed theta, the two planes at fixed phi. The definition of the fixed-phi planes are calculated globally in the initial python loop over the blocks and passed down as vertex attributes which are passed along without modification to the fragment shader where all of the intersection points are calculated. To do:

|

|

|

||

| axis_id = self.data_source.ds.coordinates.axis_id | ||

|

|

||

| def calculate_phi_normal_plane(le_or_re): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

do we spend enough time in this function that it makes sense to vectorize it? i.e., call if on a list of coordinates and then return an array?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Vectorizing this is a good idea! and I think will simplify un-tangling the spherical-only part of the calculation from the base cartesian version.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| self.vertex_array.attributes.append( | ||

| VertexAttribute(name="phi_plane_re", data=phi_plane_re) | ||

| ) | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

So it occurs to me that we should have the cartesian volume rendering as the basic option, and we can/should have spherical as a subclass. Let's not try cramming it all in; @neutrinoceros 's work on having things be visible in index-space coordinates is encouraging me to think of it in both ways.

That's not really specific here though, except that what we may want to do is to have this be a supplemental function that gets called if the component accessing the data is viewing it in spherical coordinates.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ya! I agree totally! I just jammed it in here to get things in initially working. Unjamming it and subclassing it relates to your vectorization question I think. If I vectorize that function, it'll be easier to have a sublcass that takes the output from the standard cartesian version to calculate the new spherical-only attributes.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

latest push no longer breaks things for cartesian coords (it's now vectorized and only runs if spherical).

I still want to keep thinking on how to better capture the different geometries though. I initially thought it may make sense to have a SphericalBlockCollection, but implementing it wasn't super straightforward because the calculations rely on the left and right edges, which currently only get saved via the vertex_array attribute after casting to "f4". So a standard construction like:

class SphericalBlockCollection(BlockCollection):

def add_data(self, field, no_ghost=False):

super().add_data(field, no_ghost=no_ghost)

<< --- calculate phi-normal planes from left, right edges --- >>would require us also saving the full le and re arrays as attributes. Since they're only needed for an intermediate step, it seemed better to just add a geometry check to BlockCollection.add_data(). But i'm open to ideas here!

| float dir_dot_dir = dot(ray_dir, ray_dir); | ||

| float ro_dot_ro = dot(ray_origin, ray_origin); | ||

| float dir_dot_ro = dot(ray_dir, ray_origin); | ||

| float rsq = r * r; // could be calculated in vertex shader |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

which r is this? could we actually calculate it in the vertex shader? eventually this would need to be once for every cell edge, right?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

that would be the r from left_edge or right_edge. So I could calculate r^2 for both of those in the vertex shader, ya?

| // note: cos(theta) and vhat could be calculated in vertex shader | ||

| float costheta; | ||

| vec3 vhat; | ||

| if (theta > PI/2.0) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It's entirely possible that this is what the compiler would do anyway, but I have found it to be simpler to write this as the sum of two different values multiplied by booleans. So you'd compute both and then add them. For instance, something like this, but with the casting done correctly:

first = theta > PI/2;

vhat = vec3(0,0,-1) * first + vec3(0,0,1) * (!first)

something like that, but I'm forgetting the right casting.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I did see that suggestion on some glsl forums, in some cases it can be more efficient than the full if statements? I think it would simplify the code here, will make that change.

| costheta = cos(theta); | ||

| } | ||

| float cos2t = pow(costheta, 2); | ||

| // note: theta = PI/2.0 is well defined. determinate = 0 in that case and |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

it may also be worth exploring using slight offsets instead of accounting for singularities; i.e., if ==0.0 produces a singularity, use 0.0 + epsilon

| float b = 2.0 * (ro_dot_vhat * dir_dot_vhat - ro_dot_dir*cos2t); | ||

| float c = pow(ro_dot_vhat, 2) - ro_dot_ro*cos2t; | ||

| float determinate = b*b - 2.0 * a_2 * c; | ||

| if (determinate < 0.0) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This set of nested conditionals probably does need refactoring. I think single-level nested conditionals might be manageable by the compiler but we should avoid multi-level nesting.

| { | ||

| return vec2(INFINITY, INFINITY); | ||

| } | ||

| else if (determinate == 0.0) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we do not want multiple return points

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There can be 2 real intersections of the cone though? In most cases, only one of the intersections would also be on the face of the spherical voxel. But in some cases there could be 2.

| float t_p_2 = get_ray_plane_intersection(vec3(phi_plane_re), phi_plane_re[3], ray_position_xyz, dir); | ||

| vec2 t_cone_outer = get_ray_cone_intersection(right_edge[id_theta], ray_position_xyz, dir); | ||

| vec2 t_cone_inner= get_ray_cone_intersection(left_edge[id_theta], ray_position_xyz, dir); | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

so once we have these, what do we do?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

- all those t values are the intersections with the base geometries on which the faces of the spherical voxel lies, so we need to find the t values that actually intersect the voxel, not just the base geometries. Those would be the ray entry/exit points of the current voxel.

- I think those entry/exit points correspond to t_min and t_max of the standard cartesian tracing? So we'd similarly want to take n_samples of the current fragment between those min/max values?

|

so after merging with main, the scaling is off. I suspect I'm now scaling the coordinates twice somewhere... |

|

also, can now make use of the preprocessor directives from #70 to better separate the spherical-only parts of the shaders. |

|

Check into the `YTPositionTrait` settings, which now convert to unitary

instead of leaving in code units.

…On Tue, Jun 6, 2023 at 3:20 PM Chris Havlin ***@***.***> wrote:

so after merging with main, there scaling is off. I suspect I'm now

scaling the coordinates twice somewhere...

—

Reply to this email directly, view it on GitHub

<#64 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAAVXO4ZIEOETMK4MTETAG3XJ6GJLANCNFSM6AAAAAASSFCE6A>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

note to self: since the current shader iteration is returning single values for blocks and not actually doing any ray traversal, images will be progressively chunkier to nonsensical when using load_uniform_grid with decreasing nprocs. I think this should be fixed by actually evaluating along rays... but wanted to make a note here so I remember to double check. Following images generated with https://gist.github.com/chrishavlin/e5a4ee4c9032cf143cad01c24ffdf1af with decreasing nprocs nprocs 2048nprocs 1024nprocs 256 |

|

@chrishavlin Can you resolve the conflicts? |

| if self._yt_geom_str == "cartesian": | ||

| units = self.data_source.ds.units | ||

| ratio = (units.code_length / units.unitary).base_value | ||

| dx = dx * ratio | ||

| le = le * ratio | ||

| re = re * ratio |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

So I had to put the new scaling from #79 in this if statement....

I think we should still scale in the spherical case -- but I think we should just apply this ratio to the r coordinate. That sound right? I know we do want to scale theta and phi from 0 to 1 for the texture maps... but we don't want to scale theta and phi when calculating positions in model space.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think that is right. Just scale r.

This is a follow up based on #42 from @sochowski

Fairly confident the geometry shaders and texture sampling are working as they should at this point, next step is to add some intersection checks in the fragment shader and then sample along the ray within each fragment.

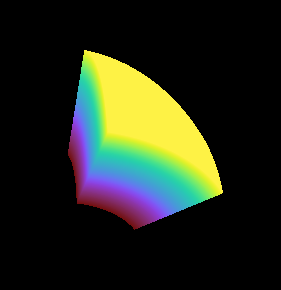

Image from the current version of

examples/amr_spherical_volume_rendering.pyshowing the radial component of a spherical dataset in the range of 0.5 to 1 in r, 0 to pi/4 in phi, pi/4 to pi/2 in theta.