An extension for Jupyter Lab & Jupyter Notebook to monitor Apache Spark (pyspark) from notebooks

|

+ |  |

= |  |

- Jupyter Lab 3 OR Jupyter Notebook 4.4.0 or higher

- Local pyspark 2/3 or sparkmagic to connect to a remote spark instance

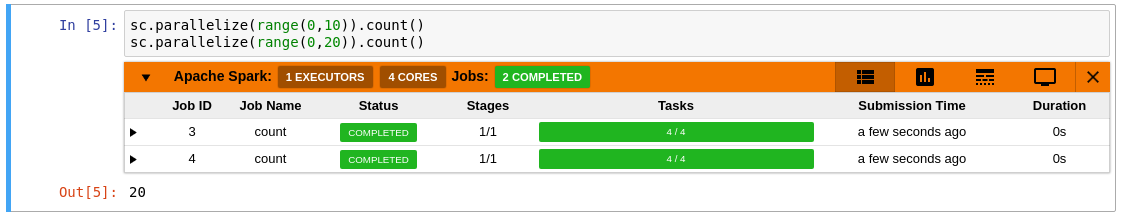

- Automatically displays a live monitoring tool below cells that run Spark jobs

- A table of jobs and stages with progressbars

- A timeline which shows jobs, stages, and tasks

- A graph showing number of active tasks & executor cores vs time

|

|

|

pip install sparkmonitor # install the extension

# set up an ipython profile and add our kernel extension to it

ipython profile create # if it does not exist

echo "c.InteractiveShellApp.extensions.append('sparkmonitor.kernelextension')" >> $(ipython profile locate default)/ipython_kernel_config.py

# For use with jupyter notebook install and enable the nbextension

jupyter nbextension install sparkmonitor --py

jupyter nbextension enable sparkmonitor --py

# The jupyterlab extension is automatically enabledWith the extension installed, a SparkConf object called conf will be usable from your notebooks. You can use it as follows:

from pyspark import SparkContext

# Start the spark context using the SparkConf object named `conf` the extension created in your kernel.

sc=SparkContext.getOrCreate(conf=conf)If you already have your own spark configuration, you will need to set spark.extraListeners to sparkmonitor.listener.JupyterSparkMonitorListener and spark.driver.extraClassPath to the path to the sparkmonitor python package path/to/package/sparkmonitor/listener_<scala_version>.jar

from pyspark.sql import SparkSession

spark = SparkSession.builder\

.config('spark.extraListeners', 'sparkmonitor.listener.JupyterSparkMonitorListener')\

.config('spark.driver.extraClassPath', 'venv/lib/python3.<X>/site-packages/sparkmonitor/listener_<scala_version>.jar')\

.getOrCreate()-

Setup sparkmagic & verify everything is working fine

-

Copy the required jar file to the remote spark servers

-

Add listener_<scala_version>.jar to the spark job

For eg. set spark.jars to

https://github.com/swan-cern/sparkmonitor/releases/download/<release>/listener_<scala>.jar -

Set

spark.extraListenersas above -

Set

SPARKMONITOR_KERNEL_HOSTenvironment variable for the spark job using sparkmagic confFor yarn, you may use spark.yarn.appMasterEnv to set the variables

If you'd like to develop the extension:

# See package.json scripts for building the frontend

yarn run build:<action>

# Install the package in editable mode

pip install -e .

# Symlink jupyterlab extension

jupyter labextension develop --overwrite .

# Watch for frontend changes

yarn run watch

# Build the spark JAR files

sbt +package

-

This project was originally written by krishnan-r as a Google Summer of Code project for Jupyter Notebook with the SWAN Notebook Service team at CERN.

-

Further fixes and improvements were made by the team at CERN and members of the community maintained at swan-cern/jupyter-extensions/tree/master/SparkMonitor

-

Jafer Haider created the fork jupyterlab-sparkmonitor to update the extension to be compatible with JupyterLab as part of his internship at Yelp.

-

This repository merges all the work done above and provides support for Lab & Notebook from a single package.

This repository is published to pypi as sparkmonitor

-

2.x see the github releases page of this repository

-

1.x and below were published from swan-cern/jupyter-extensions and some initial versions from krishnan-r/sparkmonitor