42 school project ft_linear_regression could be seen as an entrypoint to the data science branch of 42-school, in the outer-circle holygraph.

This project is an introduction the field and does not have the pretention to be a fancy data science project.

Machine learning modules, and any module doing the job, are forbidden.

- Preview

- Subject

- My solution to linear regression

- Usage : venv and run

- Classes and files

- Training : predicting output values with gradient descent algorithm

- ft_linear_regression functionalities

Figure 1. Loss surface visualization. Cost function

Figure 2. Model Training. Left panel: normalized dataset scatterplot representation, with the line to the predicted value after training. Right panels: Cost function,

The objective is to implement a simple linear regression with a single feature, from scratch. The choice of programming language is free, but should suitable for visualizing data. Using librairies is authorized, except for the ones that does all the work. For example, using python’s numpy.polynomial() function or scikit-learn library would be considered as cheating.

Monovariate : Car mileage as inputs, car price as output

| km | price |

|---|---|

| 240000 | 3650 |

| 139800 | 3800 |

| 150500 | 4400 |

| ... | ... |

A first program predict.py is predicting the price of a car for a given mileage. The prediction is based on the following model hypothesis :

estimatePrice(mileage) = θ0 + (θ1 ∗ mileage)

Parameters thetas are set to 0 by default, if training did not occur yet.

A second program training.py is training the model, from a data.csv train set. According to the hypothesis, both parameters thetas are updated with gradient-descent algorithm.

The two programs cannot directly communicate. Model parameters issued from training dataset, should be stored and be accessible independently of runtime (Data persistency).

• Plotting the data into a graph to see repartition.

• Plotting the line resulting from linear regression training into the same graph.

• Calculating the precision of the implemented algorithm.

• Any feature that is making sense

To implement linear regression from scratch, I chose Python language.

Librairies : The power of numpy, a pinch of pandas and matplotlib for visualisation.

a virtual environment is necessary so that python and its dependencies are running in an isolated manner, independently from the "system" Python (the host machine).Virtualization with the help of Docker could be a way to do that in a more complex context. Here, only python installer pip, python3 and few libraries are needed.Thus, virtualenv is the most straightforward tool (virtualenv doc. and python doccs), and can install a virtual environment from these shell command :

virtualenv ./venv/

/venv/bin/pip install -r requirements.txtMakefile capabilities were usedto set up virtual environment for Python, run programs or clean files. Of course, there is no compilation occuring since Python is an interpreted language.

make command will install the virtual environment with dependencies specified in the requirements.txt file.

make predict to execute the predict.py program.

make training to execute the training.py program.

make flake to check for norm with flake8.

make clean to remove __pycache__ and .pyc files.

make fclean to remove the virtual environement after applying the clean rule.

Run with predict.py or training.py

After, that virtual environment and requirements are installed.

Run with virtual environment python

venv/bin/python predict.pyOtherwise Activate of the virtual environment

source /venv/bin/activateThis will change the shell prompt, to (venv) and allow to directly use venv/bin/*.

Type only pip or python of the venv with only one word, no need for /venv/bin/ prefix.

python predict.pygraph TD;

A[predict.py]-->|instanciate|B[class <br> PredictPriceFromModel];

C{model <br> parameters <br> persistency}--read-->A[predict.py];

D[training.py]--instanciates-->E[class <br> CarPriceDatasetAnalysis];

E[class <br> CarPriceDatasetAnalysis]-->F[class <br> LinearRegressionGradientDescent];

E[class <br> CarPriceDatasetAnalysis]--writes-->C{model <br> parameters <br> persistency};

G{car price <br> training <br> dataset}--read-->E[class <br> CarPriceDatasetAnalysis];

The objective is to find a solution to the linear hypothesis model.

For multiple linear regression, the output response (

Predicted output

In our model, the hypothesis is that price is depending only on mileage, therefore

For any x input value, and more specifically any

Output predicted value

For any given

The linear-fit relationship to the given dataset is based on the Sum of Squared Residuals Method, trying to find the minimize

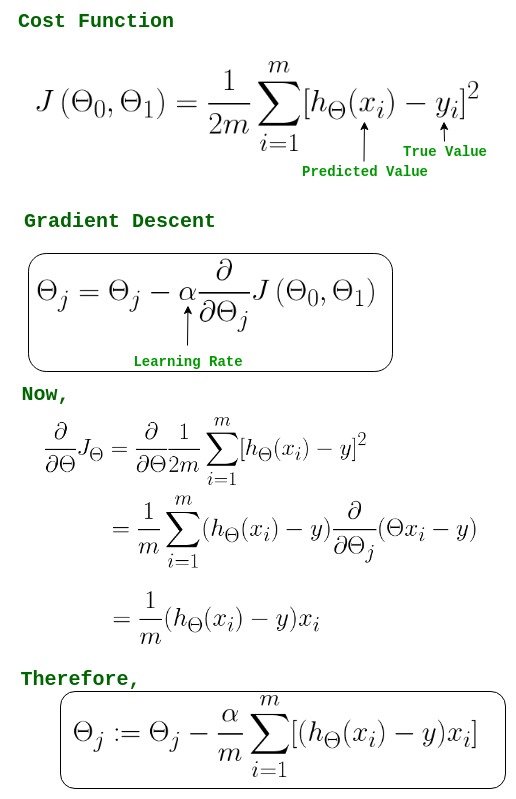

The cost function of the linear regression

cost function

To implement the gradient descent algorithm, to keeping it simple, the slope of the cost function according to each

Partial derivative of

Partial derivative of

numpy simplifies the equation translation into python coding language :

partial_derivative = np.zeros(2)

partial_derivative[0] = np.mean(residual)

partial_derivative[1] = np.mean(np.multiply(self.x, residual))

self.theta -= self.alpha * partial_derivativeDevelopped explanation are found here : geeksforgeeks.com : gradient descent in linear regression articles

**Basically, at any step of the learning process:

The pair

In addition to the algoritmic implementation, there is other functional aspects.

At the runtime, user's input with (Y/N) allow to control training and plotting features.

This interactivity also allow to skip optional features to focus on training parameter optimisation , learning rate and epochs.

-

normalization of the dataset. The values are in thousands order of magnitude (both mileage and price) and needed to be scaled.

-

data persistency : Subsequently, linear regression parameters has to be stored in a file, so that the model could be further used by the

predict.pyprogram. -

model metrics for linear regression analysis and a model accuracy report. statistics_utils.py

Providing many plots, using matplotlib.

- 3D plot : cost function

$J(\theta_0, \theta_1)$ , log-scaled. Allows a visual explanation forminimal cost(s)point(s) andgradient descent.` - Scatterplot of the trained dataset.

- Same scatterplot with the regression line. The equation, leraning rate and epochs and shown.

- Plot of cost function**

$J(\theta_0, \theta_1)$ over epochs, to show the descent to the minimal cost. - plot of hypothesis parameters

$\theta_0$ and$\theta_1$ over epochs.

Allows to predict price for a given mileage. This relies on persistent data, the model parameters file.

- model parameters are set to value zero, if the persistent model file cannot be read.

- Exceptions are thrown if the user input price is not valid.