-

Notifications

You must be signed in to change notification settings - Fork 0

Home

This wiki contains all information on the Hive software and how to set it up on the testrack hardware.

Hive is a project which allows us to test probe-rs against a multitude of probes and targets. The problem with properly testing debugging software like probe-rs is that such software interoperates very closely with debug probes and targets. In order to model those pieces of hardware it would be required to write a lot of mocks for all the available hardware which is a seemingly impossible task to do.

The idea of Hive is to be able to test probe-rs directly on the probe and target hardware. This comes with new challenges but enables us to be more confident of our code working properly on a variety of probes and targets which we tested probe-rs on using Hive.

Hive consists of a hardware testrack and its corresponding software.

Following it is briefly explained how the software works.

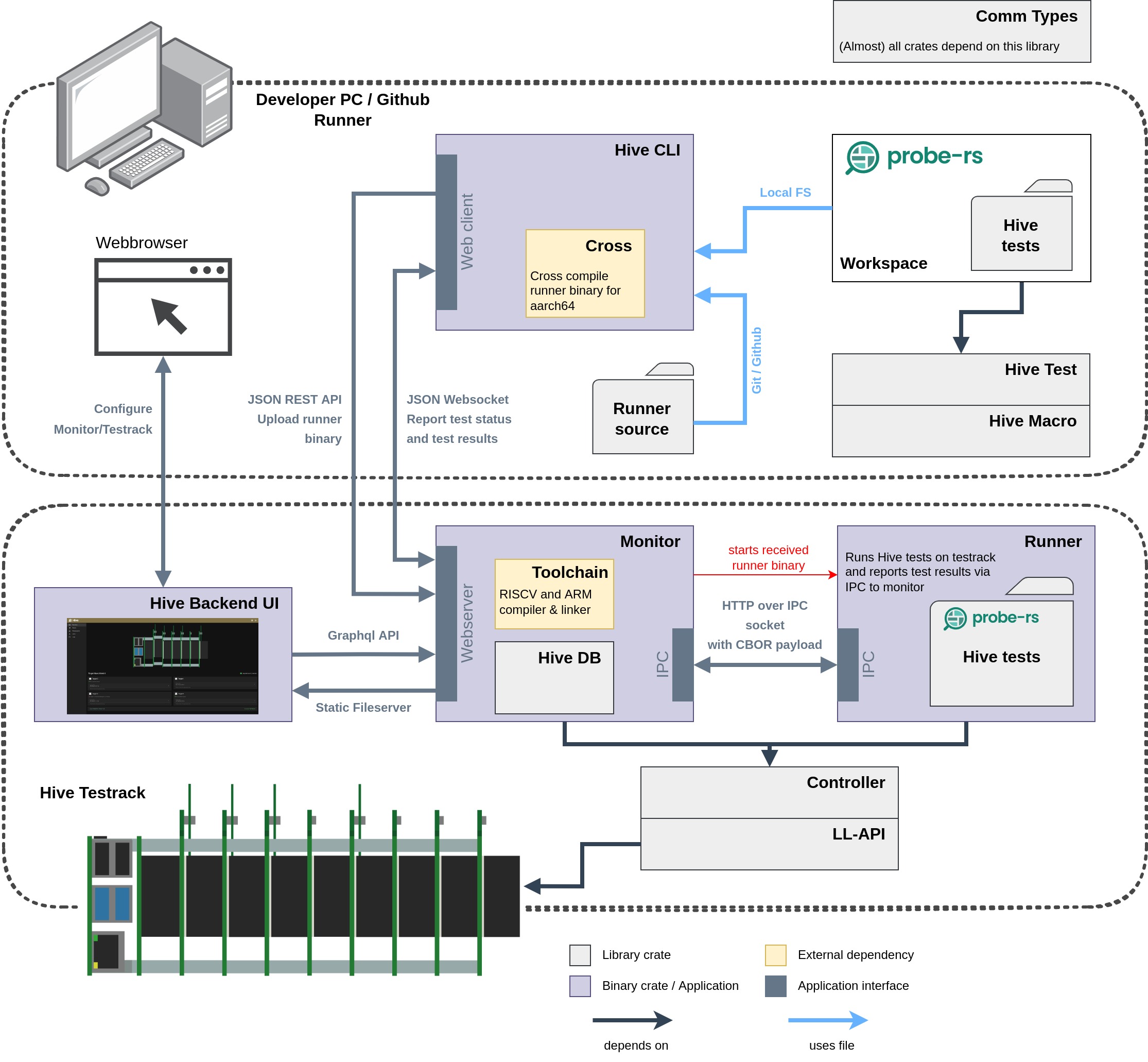

The software consists of three main parts which provide all the functionality required to actually perform tests on the hardware:

The monitor is the heart of the Hive testrack. It runs the webserver, all the necessary hardware initialization and the database used to store configuration and user data. Moreover, it manages critical things required for the tests to actually work like testprograms for example (more on that later). This binary is supposed to run constantly on the Hive testrack in order to provide all this functionality. The provided webserver is the only interface of Hive to the outside world and is used for a variety of things such as configuration and test request handling.

The runner executes the tests on the hardware using the current probe-rs testcandidate. Therefore the runner binary is unique for each test run as the probe-rs testcandidate is expected to change every test run. It not only contains the testcandidate but also all means to control the testrack hardware to allow it to actually run the tests on the hardware without any help from the monitor.

The monitor and runner have a special relationship as the monitor executes the runner binary to perform tests and performs all necessary hardware preparation before execution. The binaries communicate with each other via IPC.

The Hive CLI allows probe-rs developers and GitHub runners to run tests on Hive testracks. It is not only a simple http client which issues requests to the monitor on the testrack but also contains more complex functionality as it builds the runner binary using the local probe-rs testcandidate and its tests provided by the developer.

A handy overview over all crates in the hive-software workspace is shown in the image below:

To understand how the software works it is important to understand how a Hive test is actually performed:

As a first step a testprogram is flashed onto the target. It ensures that each tested target is in the same consistent state before the test functions are executed. The testprogram does not need to have specific functionality but it can be used to enhance the testing capabilities. By introducing specific constants in the testprogram it is also possible to test any probe-rs read operations against it, as it is assumed that the testprogram was flashed correctly. It also allows to easily test things like breaking and halting the core.

After this initialization step the probe is connected to the target and the computer. Now one can execute some test logic while using the probe-rs testcandidate to access the target.

A test request is generally issued by using the Hive CLI application. Although it is also possible for other applications to issue test request if they can implement the required functionality. Before the Hive CLI sends any requests to the testrack it first creates a workspace and copies the probe-rs testcandidate source as well as the runner source code into it. It then uses Cross to cross compile the runner binary with the specific probe-rs testcandidate to the architecture of the Raspberry Pi 4b which runs on the testrack.

Originally the compiling of the runner was implemented in the monitor which runs on the testrack. However, it turned out that compiling the runner binary on the rpi required a significant amount of time ranging from 5-15min depending on if the cargo cache was already existing or not. As most developers should have access to faster hardware when running the CLI it made sense to move the build step from the server side to the client side. This also simplifies the monitor logic quite a lot while only marginally increasing the complexity of the Hive CLI.

If the build process already fails no request is sent and the build failure is reported back to the user containing the exact issue. Otherwise the CLI initiates the test request as following:

As can be seen in the image above the monitor receives the initial test request with the runner binary and generates a WS-ticket to it. The WS-ticket is a base64 encoded random sequence of bytes which is used to secure the websocket endpoint of the monitor as we only want people who issued a valid test request to be able to open a websocket connection. It also acts as a way to identify the associated cached test request once a websocket is opened with a ticket.

The generated WS-ticket is then returned in the response to the client. Now the client needs to open a websocket on the monitor by using the provided WS-ticket within a certain timeframe (WS-tickets currently expire after 30s). If the monitor receives a valid WS-ticket on a websocket upgrade request it moves the associated cached task into the valid task queue where it awaits execution. The websocket is now used to push real-time status messages on the test run as well as ultimately the test results back to the client (in our case the Hive CLI). Once the results have been sent both parties close the websocket and the test request is fulfilled.

Of course, a lot more happens between opening and closing the websocket. Once the websocket has been opened, the associated test request is now considered valid and moved into the execution queue. This queue and request caches are managed in the monitor by the internal task manager. Alongside the task manager is the task runner. The task runner receives tasks from the task manager and executes them. Once the task runner receives our test request it starts with its initialization routine to prepare various subparts of the software for test execution:

-

Reinitialize hardware (If configuration data in DB changed)

Includes rebuilding and linking of the active testprogram and reflashing of the testprogram onto all targets

-

Rebuild and Link the active Testprogram and reflash testprogram onto targets (If testprogram changed and hardware was not reinitialized)

By now the hardware is ready for testing. Until now the monitor had full control over the debug probes. Before launching the runner to perform the tests the monitor needs to drop the probe handles to unlock the USB. After unlocking the runner binary provided by the test request is launched by the monitor. Both the monitor and runner first establish a connection via an IPC socket. From now on the runner takes over.

As with the monitor the runner first needs to perform certain initialization steps. It needs to know the current hardware configuration such as which probe is connected to which testchannel and where each target is connected. It requests all that information over IPC from the monitor. Once it received the required information it initializes the hardware based on the data from the monitor. It also checks whether the data makes sense compared to what it actually detects on the hardware. In case any discrepancy is detected the runner fails as this indicates that the hardware was changed by the user or there was some other hardware error which prevented proper detection.

If all goes well the runner starts the testing threads. One for each testchannel which has a debug probe connected. Now the probe-rs testcandidate has full control over the debug probes. All the runner now does is to collect all the Hive testfunctions which it was built with and sort them by the order specified by the user. After that it connects each testchannel with a debug probe to each available target. On each of those connections it goes through the testfunctions in order and executes them. It then collects the result of each of those function calls (For example whether it panicked or not) and buffers them in a global buffer.

Once all test threads have tested every available target to probe combination, the runner sends the buffered test results back to the monitor and terminates. The monitor sends the received test results back to the client over the websocket connection which is then closed.

Before completing the test task the monitor reinitializes the hardware and takes over the control over the debug probes again to be ready for the next test task.

The monitor exposes the Hive backend UI which is a Vue webapp that allows authenticated users to manage the testrack configuration. As of now it allows the following operations:

- Dashboard (Target and probe assignment, testrack status)

- Testprograms (Creation, modification and activation as well as toolchain output status)

- Users (User management, creation, modification and deletion)

- Logs (View the last 100 log entries of the monitor and runner binaries)

The webapp communicates with the monitor using a graphql API. The API is only accessible to authenticated users.