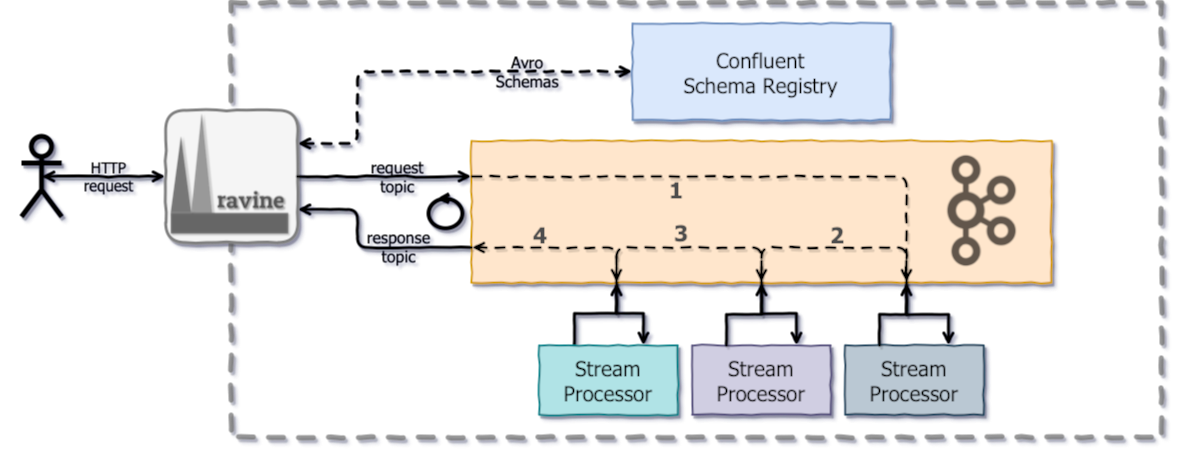

Ravine works as a bridge between synchronous HTTP based workload, and event-driven applications landscape. It acts as a bridge holding HTTP requests while it fires a request message in Kafka and wait for a response, therefore other applications can rely in this service to offload HTTP communication, while Ravine rely in Confluent Schema-Registry to maintain API contracts.

The project goal is empower developers to focus on event-driven eco-systems, and easily plug HTTP endpoints against existing Kafka based landscapes.

Ravine is based on defining HTTP endpoints and mapping request and response topics. On request topic, it will send payload received during HTTP communication, the payload is serialized with configured Schema-Registry Subject version, and dispatched on request topic. Ravine will wait for configured timeout, to eventually receive a message in response topic, having the same unique key, where the message value is used as response payload for ongoing HTTP request.

Furthermore, to identify the response event, Ravine will inspect Kafka message header in order to find unique-ID in there. Therefore, you can conserve the original headers in order to respond events back to Ravine.

The following diagram represents the high level relationship between Ravine and Kafka topics.

Regarding HTTP request parameters and headers, those are forwarded in the request event as Kafka

headers. To identify request parameters consider the header named ravine-request-parameter-names,

and regarding HTTP request headers, consider then ravine-request-header-names, where the actual

value of those variable names are actual header entries.

Based on configuration you can define endpoints that will be tight up with a given Avro Schema, in Schema-Registry. Therefore Ravine during startup will fetch the subjects in specified versions to be able to parse payloads and produce messages.

This application is using Spring Boot Actuator plugin, therefore by querying

/actuator/health endpoint path, you can probe application functional status.

$ curl --silent http://127.0.0.1:8080/actuator/health |jq

{

"status": "UP",

"details": {

"kafka-consumer--kafka_response_topic": {

"status": "UP"

}

}

}

Kafka consumers in use by this application are also subject of main health check endpoint, as the example shows.

Configuration for Ravine is divided in the following sections: startup, cache, kafka and

routes. Those sections are covered on the next topics. Please consider

applicaton.yaml as a concrete example.

Startup is covering the application boot, taking care of how long to wait before expose the endpoints. In this configuration section you will find:

ravine.startup.timeoutMs: timeout in milliseconds on waiting for Kafka consumers to report ready (RUNNING);ravine.startup.checkIntervalMs: internal in milliseconds to check consumers status;

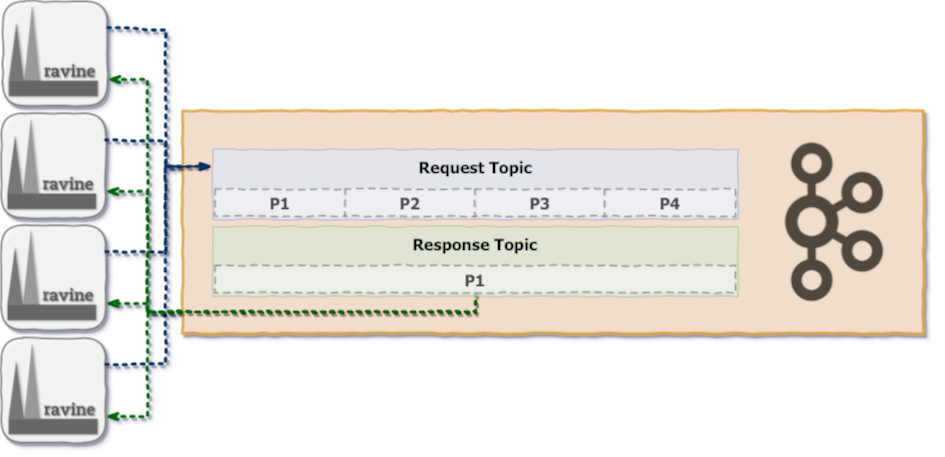

A common usage scenario is to fan out response topic messages to all Ravine instances, and therefore you want to have tighter control of caching.

ravine.cache.maximumSize: maximum amount of entries in cache;ravine.cache.expireMs: expiration time in milliseconds, since the record was written in cache;

Kafka section covers the settings related to the Kafka ecosystem.

ravine.kafka.schemaRegistryUrl: Confluent Schema-Registry URL;ravine.kafka.brokers: comma separated list of Kafka bootstrap brokers;ravine.kafka.properties: key-value pairs of Kafka properties applied to all consumers and producers;

Routes are the core of Ravine's configuration, here you specify which endpoint routes are exposed

by Ravine, and what's the expected data flow path. In this section, you will find the following

sub-sections: endpoint, subject, request and response. Additionally each route has a name,

a simple identification tag used during tracing.

Endpoint defines the HTTP settings covering this route entry.

ravine.routes[n].endpoint.path: route path;ravine.routes[n].endpoint.methods: HTTP methods accepted in this route,get,postorput;ravine.routes[n].endpoint.response.httpCode: HTTP status code, by default200;ravine.routes[n].endpoint.response.contentType: response content-type, by default usingapplication/json;ravine.routes[n].endpoint.response.body: payload to be displayed on a successful request;

When you define ravine.routes[n].response, the actual payload

ravine.routes[n].endpoint.response.body is not used, since the body of request will be filled by

response message coming from Kafka. Regarding ravine.routes[n].endpoint.response.httpCode, this

setting will only take place on a successful request, other error events are applicable here.

Subject section refers to Schema-Registry, here you define the subject name and version that will be used to validate HTTP request, and produce a message in request Kafka topic.

ravine.routes[n].subject.name: subject name;ravine.routes[n].subject.version: subject version, by default1;

Subjects are loaded during Ravine boot.

Request defines the Kafka settings to produce a message in the topic used to gather requests for this specific route. Third party stream consumers would tap on this topic and react on its messages.

ravine.routes[n].request.topic: Kafka topic name;ravine.routes[n].request.valueSerde: value serializer class, by default:io.confluent.kafka.streams.serdes.avro.GenericAvroSerde;ravine.routes[n].request.timeoutMs: timeout in milliseconds to produce a message;ravine.routes[n].request.acks: Kafka producerackapproach, by default usingall;ravine.routes[n].request.properties: kay-value pairs of properties to be informed in producer;

Response defines the Kafka topic on which Ravine wait for a response message for ongoing requests.

ravine.routes[n].response.topic: Kafka topic name;ravine.routes[n].response.valueSerde: value serializer class, by default:io.confluent.kafka.serdes.avro.GenericAvroSerde;ravine.routes[n].request.properties: kay-value pairs of properties to be informed in consumer;ravine.routes[n].response.timeoutMs: timeout in milliseconds to wait for response message;

This application is instrumented using Micrometer, which will register and accumulate

application runtime metrics, and expose them via /actuator/prometheus endpoint. Metrics in this

endpoint are Prometheus compatible, can be scraped in timely fashion.

$ curl --silent 127.0.0.1:8080/actuator/prometheus |head -n 3

# HELP logback_events_total Number of error level events that made it to the logs

# TYPE logback_events_total counter

logback_events_total{level="warn",} 7.0

In order to trace requests throughout Ravine's runtime and Kafka, this project includes OpenTracing modules for Spring Boot and Kafka Streams, and therefore you can offload tracing spans to Jaeger.

To enable tracing, make sure you set:

opentracing.jaeger.enabled=trueMore configuration can be informed as properties, please consider upstream plugin documentation.

In order to run tests locally, you need to spin up the necessary backend systems, namely

Apache Kafka (which depends on Apache ZooKeeper), and

Confluent Schema-Registry. They are wired up using

Docker-Compose in a way that Ravine can reach requested backend, and using

localtest.me to simulate DNS for Kafka-Streams consumers.

The steps required on your laptop are the same needed during CI, so please consider

travis.yml as documentation.

Source code of this project is formatted using Google Java Format style, please make sure your IDE is prepared to format and clean-up the code in that fashion.

To release new versions, execute the following:

gradlew releaseThe release plugin will execute a number of steps to release this project, by tagging and pushing the code, plus prepating a new version. Some steps are interactive.