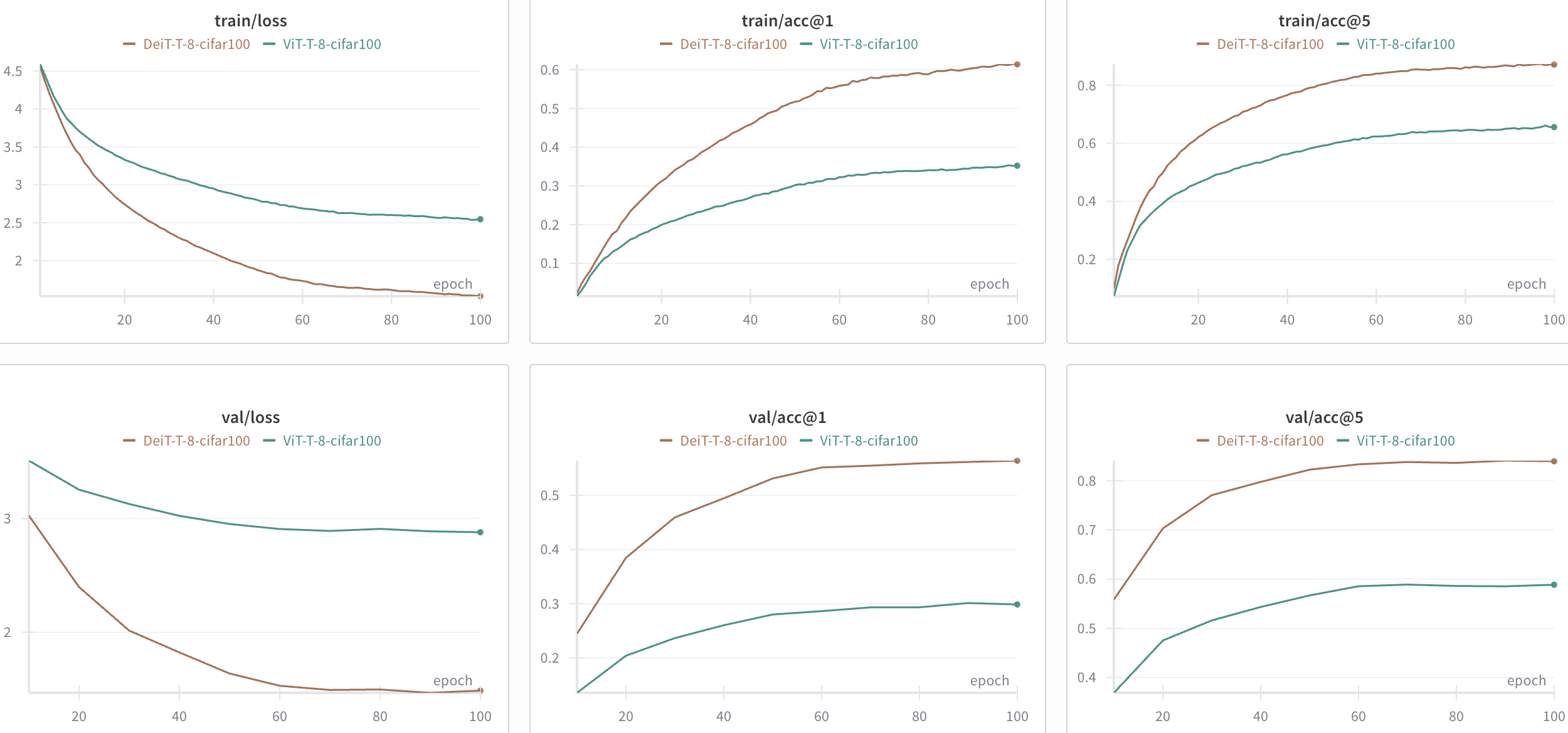

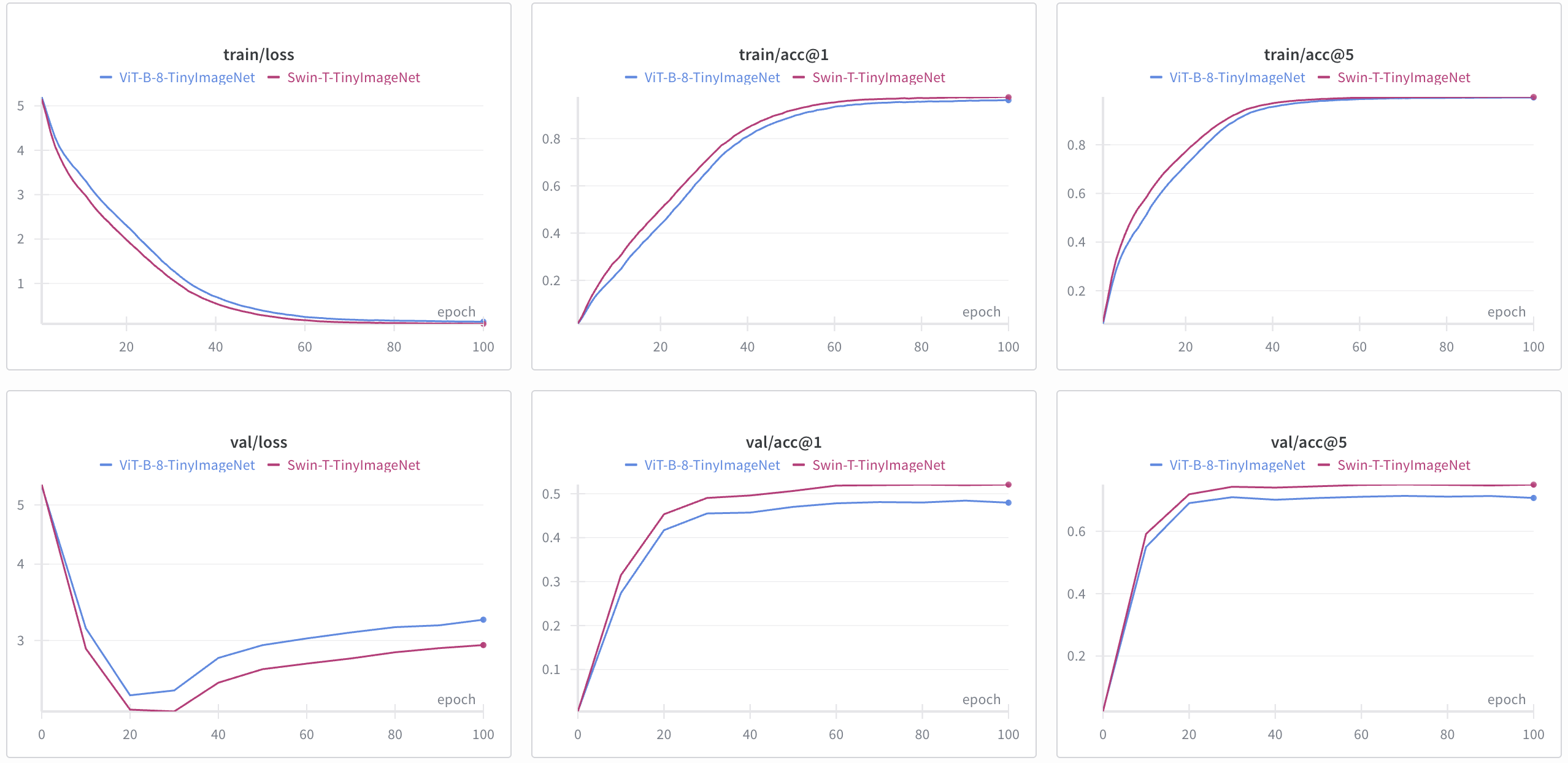

A minimal PyTorch implementation of Vision Transformers(ViT), its varients Data efficient Image Transformers (DeiT) and Swin Transformers. Experimented with CIFAR-100 (ViT-T/8 vs DeiT-T/8) and Tiny-Imagenet dataset with a (ViT-T/8 vs Swin-T-TinyImageNet), but supports other varients as well.

Architectural correctness is tested via parameter counts and output parity, matched against torchvision implementations (with exceptions for Swin due to differing internal choices).

Configuration is managed using Hydra, with optional experiment tracking via Weights & Biases (wandb).

- Install uv and run

uv syncuv run train.py +run=vit-cifar100uv run train.py +run=deit-cifar100Uses frozen resnet18_cifar100 (via timm) as Teacher and is used for hard distillation (as it is showen to work well in DeiT paper)

uv run train.py +run=vit-tiny-imagenetuv run train.py +run=swin-tiny-imagenet.

├── config/

│ ├── dataset/ # Dataset configs

│ ├── model/ # Model configs (ViT / DeiT / Swin)

│ ├── run/ # Experiment presets

│ └── default.yaml # Global defaults

├── model/ # Model implementations

├── data.py # Dataset & dataloaders

├── train.py # Training entry point

├── utils.py # Training utilities

└── tests/ # Architecture & parity tests

- Explicit configs over implicit defaults

- Modular overrides:

datasetmodeloptimizerlr_scheduler

- Experiment outputs are auto-versioned and logged.

Example override:

python train.py model=ViT-B-16 dataset=cifar100