-

Notifications

You must be signed in to change notification settings - Fork 3.7k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Proposal] The problem with visual comparison #24312

Comments

|

@KuznetsovRoman hi ! is there any chance to try your comparator now with PW ? for example in fork or some other way ? sounds very interesting... |

This patch brings in antialiasing tests from `looks-same` project for our experimental `ssim-cie94` comparator. One of the new tests found a bug in our implementation. References microsoft#24312

You could checkout my branch (https://github.com/gemini-testing/looks-same/tree/HERMIONE-1049) and use it like: const looksSame = require('looks-same');

// createDiffImage: true is mandatory

const result = await looksSame(image1, image2, {createDiffImage: true});

I dont have any fork, but you could monkey-patch your playwright. |

|

Hey @KuznetsovRoman, Thank you for bringing looks-same to our attention! I didn't see this project when we were researching image comparison awhile ago. I looked into the project and played a bit with it. Here are my high-level thoughts/comments:

Now, we have an experimental await expect(page).toHaveScreenshot({

_comparator: 'ssim-cie94',

});This comparator uses Now, I'd like to compare First, I pulled anti-aliasing tests from Secondly, I hooked

Running Finally, could you please try using |

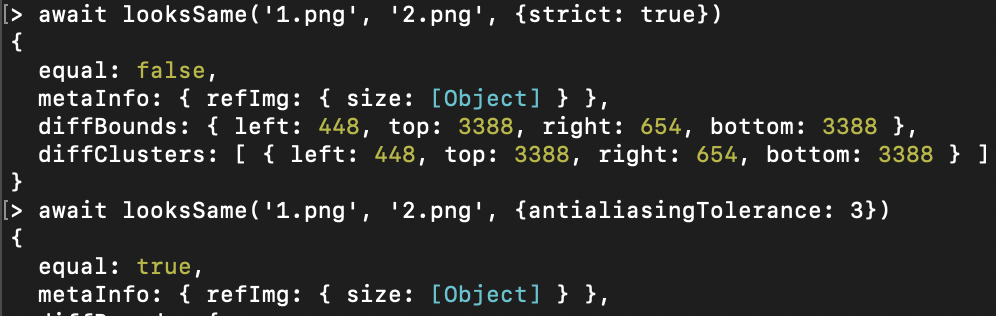

I need specific examples. Like in I doubt it is antialiasing problem and |

@KuznetsovRoman sure

This fails 5 tests for me.

This is an actual rendering artifact we were getting in one of our webkit screenshot tests. Whether this kind of artifact should or should not be ignored is questionable, and ultimately is a client call. My concern is the diff that is clearly wrong. |

The problem with your usage is the following: const diff = await looksSame.createDiff({

reference: expected,

current: actual,

extension: 'png',

highlightColor: '#ff0000', // color to highlight the differences

...looksSameOptions,

});

// diff is now PNG buffer (with png signature and other chunks, not a raw image buffer)

// so you don't need to call "diffPNG.data = diff", "PNG.sync.write(diffPNG)"

// instead, just use "body: diff" in "testInfo.attach" methodThis would work: const diff = await looksSame.createDiff({

reference: expected,

current: actual,

extension: 'png',

highlightColor: '#ff0000', // color to highlight the differences

...looksSameOptions,

});

await testInfo.attach(fixtureName + '-diff.png', {

body: diff,

contentType: 'image/png',

});

Also, on my branch https://github.com/gemini-testing/looks-same/tree/HERMIONE-1049 you should use const result = await looksSame(image1, image2, {createDiffImage: true});

// {

// equal: false,

// diffImage: OriginalImage,

// differentPixels: 2,

// totalPixels: 10404,

// diffBounds: { left: 1, top: 0, right: 1, bottom: 1 },

// diffClusters: [ { left: 1, top: 0, right: 1, bottom: 1 } ]

// }

const {diffImage} = result;

await diffImage.save('my-diff.png'); |

Could you give an |

CIE94 still has a perceptual uniformity issue, so CIEDE2000 is better. Example:

On my branch i did some modifications to improve image comparison speed: https://github.com/gemini-testing/looks-same/blob/HERMIONE-1049/index.js#L23. With those improvements, comparison speed is similar So, in most cases we don't really need to calc CIEDE2000 to make sure white and red are different colors. The trick is to calc fast CIE76 firstly, and in most cases that is enough (based on the results of my calculations, |

|

@aslushnikov are there any updates? |

@KuznetsovRoman I haven't had a chance to continue experiments yet; will come back to this in a bit.

Meanwhile, any chance you can give this a try? |

I want to be sure "expected" could render as "actual" |

|

@KuznetsovRoman unfortunately, I don't recall exactly where it comes from. IIRC this is one of the Playwright tests from back in the days that was rendering differently on x86 vs arm64 Ubuntu. However, behavior of the comparator is not that important in this particular test case - algorithms can be tuned to yield either outcome. I'm interested in getting your feedback on using our experimental |

Previously, I did not include the time to save the image, but that is wrong, because

|

|

@KuznetsovRoman awesome, thank you for the numbers! Could you please share the code so that I can reproduce these findings locally? |

Sure: https://github.com/KuznetsovRoman/img-comparison-bench nvm use

npm i

node index.js |

…or (microsoft#24348) This patch brings in antialiasing tests from `looks-same` project for our experimental `ssim-cie94` comparator. One of the new tests found a bug in our implementation. References microsoft#24312

|

@alushnikov It seems you're quite motivated in improving ssim-cie94. Have you had a chance to continue experiments with looks-same? I'm not advocating for it, but given the expertise its maintainers have I believe it's worth giving it a chance. Ultimately, what users like myself desire is for Playwright to use the best tool for the job. @KuznetsovRoman It'd be nice to hear about the look-same algorithm's corner cases and drawbacks too. It's hard to believe that the algorithm is that good without any drawbacks given the complexity on the matter. Also would be nice to see the research that would confirm its superiority, otherwise it's easy to be sceptical. On the topic, I've found this talk where the speaker tells how they've omitted using complex color-matching algorithms altogether and got an improvement of about 5 times the speed. Perhaps naive, but if their solution is stable I believe the tradeoff would be justified. The speaker, I believe, works at Microsoft now. The talk is quite old, I wonder if it is still relevant https://youtu.be/ULwdj_Vr_WA?feature=shared&t=1942 cc @mourner |

Problem

Pixelmatch (which is being used to compare snapshots) has an issue (as i described in mapbox/pixelmatch#127)

And that is not the only one. Pixelmatch also has some sort of antialiasing detection, but its not configurable and not perfect either:

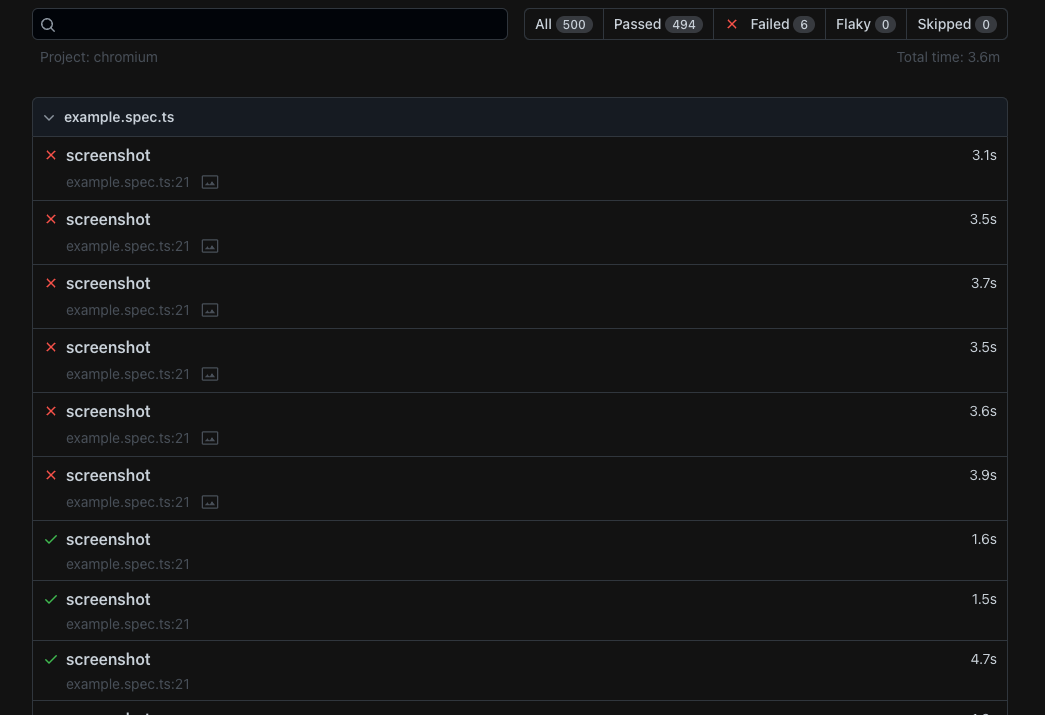

I launched playwright test 500 times and 6 of those failed due to browser rendering instability, thats 1.2%, which is a lot.

Test code src: https://pastebin.com/eWzMpKBW

Solution

I can solve both of these problems by integrating our package

looks-sameinstead ofpixelmatchinto Playwright.Tools comparison

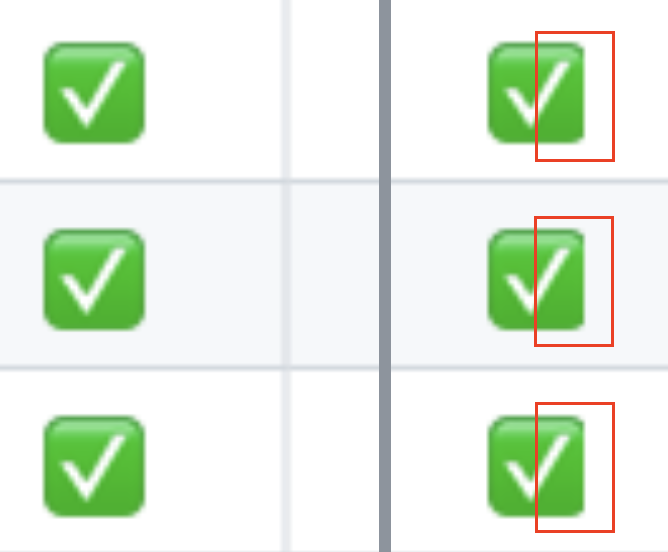

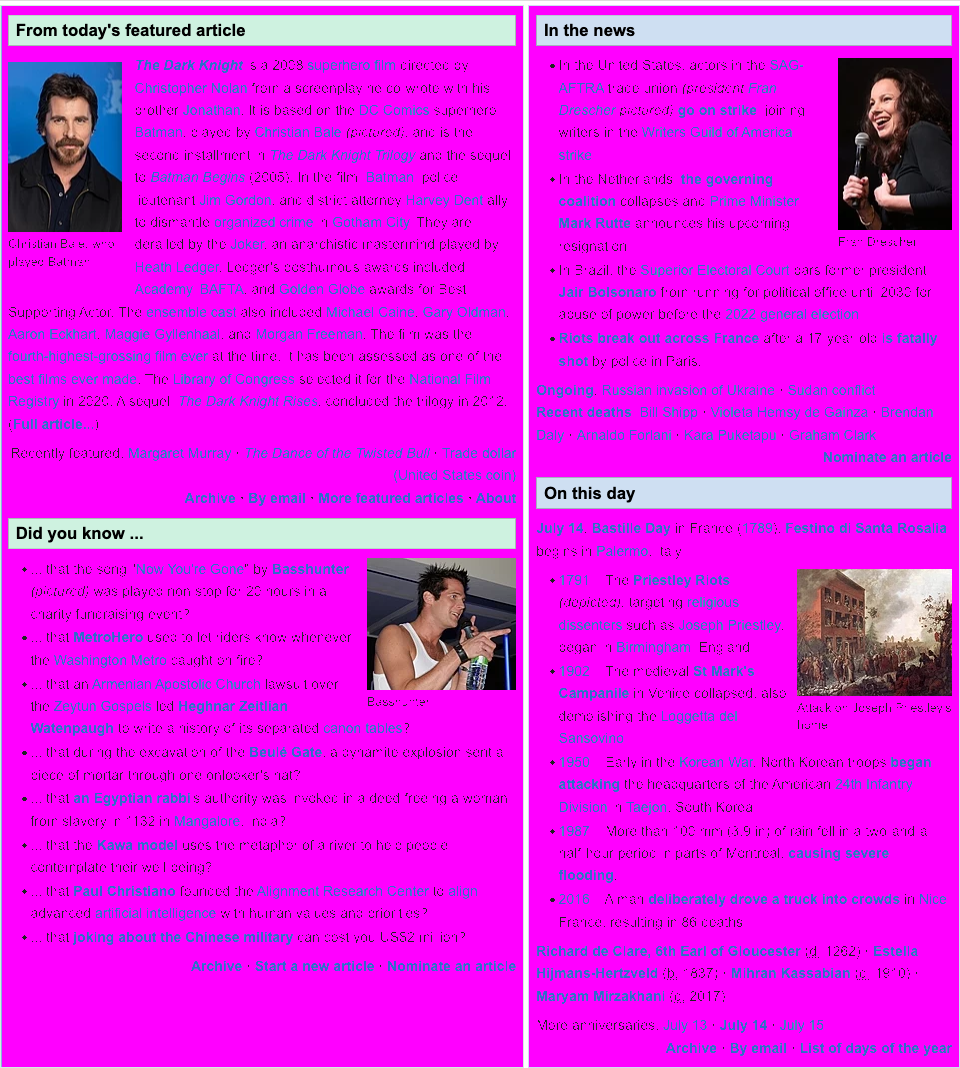

looks-samehas configurable CIEDE2000 tolerance (2.3 by default) instead ofpixelmatchthreshold, which takes into account human color perception.These colors from example1 (linked in issue above) would be considered as different if

tolerance < 33.5, so diff image (marked as pink) would be:At the same time,

looks-samewouldn't mark the difference from example2 (linked in issue above) withtolerance > 1.86looks-samehas configurableantialiasingTolerance, which would help with those 6/500 fails.It can detect the diff from above, but would ignore it with propper

antialiasingTolerance.Benchmarking

Case:

Compare two images (896x5069) and save diff image to file system.

Environment:

Apple M1 Pro, 32GB

Currently,

looks-sameis not that fast, but i managed to improve its performance, and thats what i got on my branch:equal buffers

indistinguishable difference (23166 / 4541824 pixels have #fefefe color instead of #ffffff)

distinguishable difference (5 red pixels on white background)

big difference (500x500 red square in the center)

huge difference (two 500x500 red squares in the center)

gigantic difference (four 500x500 red squares in the center)

Conclusion

looks-samepixelmatchignoreCaret,tolerance,antialiasingTolerancepixelmatchon average, unless the diff if really huge: (130ms constant time(896x5069) + 130 ms per each 250k different pixels)I can complete the job and make a Pull Request to use

looks-samein Playwright image comparison, but firstly i would like to clarify: are you interested in this?P.S. If you need benchmark code examples, i could provide them in PR after polishing the

looks-samecodeThe text was updated successfully, but these errors were encountered: