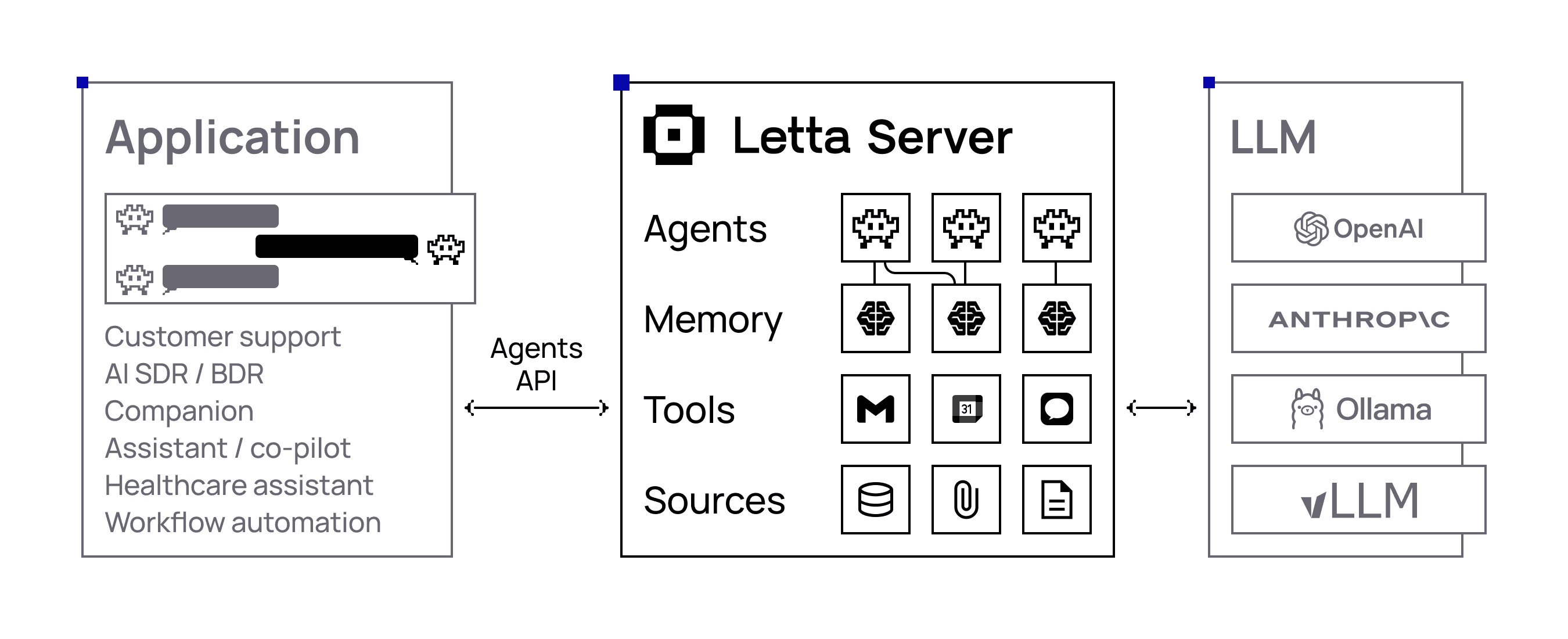

This is the Vercel AI SDK provider for Letta - the platform for building stateful AI agents with long-term memory. This will enable you to use Letta agents seamlessly with the Vercel AI SDK ecosystem.

Letta is an open-source platform for building stateful agents with advanced memory and infinite context, using any model. Built-in persistence and memory management, with full support for custom tools and MCP (Model Context Protocol). Letta agents can remember context across sessions, learn from interactions, and maintain consistent personalities over time. Letta agents maintain memories across sessions and continuously improve, even while they sleep.

Check out our comprehensive Next.js example that demonstrates:

- Real-time streaming conversations

- Agent memory persistence

- Message history management

- Error handling

Located at: Letta Chatbot Template

npm install @letta-ai/vercel-ai-sdk-providerCreate a .env file and add your API Key:

LETTA_API_KEY=your_letta_cloud_apikey

LETTA_BASE_URL=https://api.letta.comimport { lettaCloud } from '@letta-ai/vercel-ai-sdk-provider';

import { generateText } from 'ai';

const { text } = await generateText({

model: lettaCloud('your-agent-id'),

prompt: 'Write a vegetarian lasagna recipe for 4 people.',

});

console.log(text);For local development with Letta running on http://localhost:8283:

LETTA_BASE_URL=http://localhost:8283import { lettaLocal } from '@letta-ai/vercel-ai-sdk-provider';

import { generateText } from 'ai';

const { text } = await generateText({

model: lettaLocal('your-agent-id'),

prompt: 'What did we discuss yesterday about the project?',

});For custom endpoints or configurations:

import { createLetta } from '@letta-ai/vercel-ai-sdk-provider';

import { generateText } from 'ai';

const letta = createLetta({

baseUrl: 'https://your-custom-letta-endpoint.com',

token: 'your-access-token'

});

const { text } = await generateText({

model: letta('your-agent-id'),

prompt: 'Continue our conversation from last week.',

});import { lettaCloud } from '@letta-ai/vercel-ai-sdk-provider';

import { streamText } from 'ai';

const { textStream } = await streamText({

model: lettaCloud('your-agent-id'),

messages: [

{ role: 'user', content: 'Tell me a story about a robot learning to paint.' }

],

});

for await (const textPart of textStream) {

console.log(textPart);

}import { lettaCloud } from '@letta-ai/vercel-ai-sdk-provider';

// Create a new agent using the Letta client

const agent = await lettaCloud.client.templates.agents.create(

'your-project-id',

'your-template-name'

);

console.log('Created agent:', agent.agents[0].id);

// Now use the agent with AI SDK

const { text } = await generateText({

model: lettaCloud(agent.agents[0].id),

prompt: 'Hello! I\'m excited to work with you.',

});

console.log(text)'use client';

import { useChat } from 'ai/react';

import { lettaCloud } from '@letta-ai/vercel-ai-sdk-provider';

export default function ChatComponent({ agentId }: { agentId: string }) {

const { messages, input, handleInputChange, handleSubmit } = useChat({

api: '/api/chat',

initialMessages: [],

});

return (

<div>

<div>

{messages.map(message => (

<div key={message.id}>

<strong>{message.role}:</strong> {message.content}

</div>

))}

</div>

<form onSubmit={handleSubmit}>

<input

value={input}

onChange={handleInputChange}

placeholder="Say something..."

/>

<button type="submit">Send</button>

</form>

</div>

);

}// app/api/chat/route.ts

import { lettaCloud } from '@letta-ai/vercel-ai-sdk-provider';

import { streamText } from 'ai';

export async function POST(req: Request) {

const { messages, agentId } = await req.json();

const result = await streamText({

model: lettaCloud(agentId),

messages,

});

return result.toDataStreamResponse();

}| Variable | Description | Default |

|---|---|---|

LETTA_API_KEY |

Your Letta API key (required for Letta Cloud) | - |

LETTA_BASE_URL |

The base URL for Letta API | https://api.letta.com or http://localhost:8283 |

LETTA_DEFAULT_PROJECT_SLUG |

Default project slug for agent creation | default-project |

LETTA_DEFAULT_TEMPLATE_NAME |

Default template name for agent creation | - |

interface LettaClient.Options {

baseUrl?: string; // Specify a custom URL to connect the client to

token?: string; // API token/key

project?: string; // Your project slug

fetcher?: Function; // Custom fetch function

}lettaCloud(agentId: string)- Pre-configured provider for Letta CloudlettaLocal(agentId: string)- Pre-configured provider for local Letta instancecreateLetta(options)- Create a custom provider instance

convertToAiSdkMessage(messages, options)- Convert Letta messages to AI SDK formatloadDefaultProject- Load default project from environmentloadDefaultTemplate- Load default template from environment

Agent not found error:

// Ensure your agent ID is correct

const agents = await lettaCloud.client.agents.list();

console.log('Available agents:', agents.map(a => a.id));Authentication errors:

- Verify your

LETTA_API_KEYis set correctly - Check that your API key has the necessary permissions

MIT License - see LICENSE for details.