This is the code for 3D reconstruction in the paper Neural 3D Mesh Renderer (CVPR 2018) by Hiroharu Kato, Yoshitaka Ushiku, and Tatsuya Harada.

Related repositories:

Please install neural renderer.

# install neural_renderer

git clone https://github.com/hiroharu-kato/neural_renderer.git

cd neural_renderer

python setup.py install --user

# or, sudo python setup.py installFirst, you need download pre-trained models.

bash download_models.shYou can reconstruct a 3D model (*.obj) and multi-view images by the following commands.

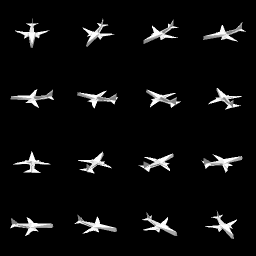

python mesh_reconstruction/reconstruct.py -d ./data/models -eid singleclass_02691156 -i ./data/examples/airplane_in.png -oi ./data/examples/airplane_out.png -oo ./data/examples/airplane_out.obj

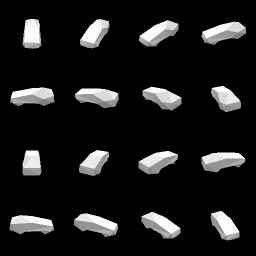

python mesh_reconstruction/reconstruct.py -d ./data/models -eid singleclass_02958343 -i ./data/examples/car_in.png -oi ./data/examples/car_out.png -oo ./data/examples/car_out.obj

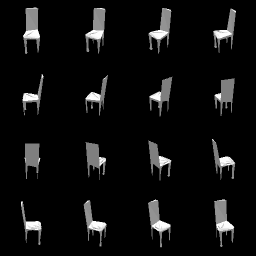

python mesh_reconstruction/reconstruct.py -d ./data/models -eid singleclass_03001627 -i ./data/examples/chair_in.png -oi ./data/examples/chair_out.png -oo ./data/examples/chair_out.objYou can evaluate voxel IoU of a model on test set by the following command.

python mesh_reconstruction/test.py -d ./data/models -eid multiclassMean IoU of pre-trained model is 0.5988, which is slightly different from that in the paper (0.6031). This is mainly because of random initialization of networks.

First, you need download datasets rendered using ShapeNet dataset.

bash download_dataset.shThis dataset is created by render.py using Blender.

You can train models by the following command.

bash train.shThis produces almost the same models as the pre-trained models. It takes about three days on Tesla P100 GPU.

@inproceedings{kato2018renderer

title={Neural 3D Mesh Renderer},

author={Kato, Hiroharu and Ushiku, Yoshitaka and Harada, Tatsuya},

booktitle={The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2018}

}