In this project we aim to caption an image using a combination of autoencoders and SVM. For this purpose we use a subset of the captioned MS-COCO dataset for training. This is achieved in three board stages:

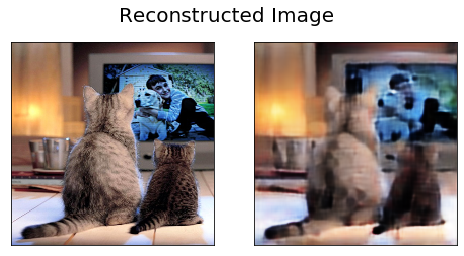

In this stage we use a Convolutional Autoencoder to compress the images into a smaller feature space. The autoencoder minimizes the original image (200px x 200px RGB) into a smaller feature space. It also minimizes the loss by reconstucting an image from the smaller feature space and applying gradient descent to readjust weights.

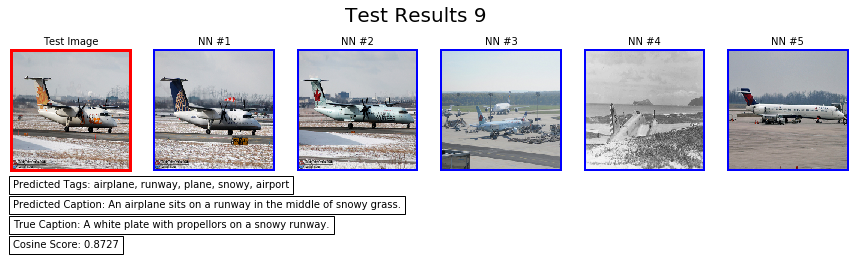

Once the autoencoder has been trained, the new features are extracted for the training and test set. An SVM model is then trained with the training data features and label predicions are made to determine the category of the test images.

Lastly, the nearest neighbors algorithms is used to find the K=5 nearest images within the predicted class. The most appropriate caption is determined from the captions of the 5 nearest neighbors identified within the class. Its done using semantic similarity analysis among the captions.

- AutoCaption.ipynb can we previewed but it will not contain all the visualizations (specifically the altair plots).

- Use AutoCaption_Rendered.html to preview all the visualizations.

-

Download the codebase.

-

Download the Fast Text 300D embeddings, unzip it and place the wiki-news-300d-1M.vec file in the 'models' directory. Link: https://dl.fbaipublicfiles.com/fasttext/vectors-english/wiki-news-300d-1M.vec.zip

-

Install all the python dependencies requirements using the requirements.txt

-

Open the AutoCaption.ipynb notebook using Jupyter.

-

Make sure that the 'download_coco' flag is set to True in the notebook and then execute it.