-

Notifications

You must be signed in to change notification settings - Fork 0

Home

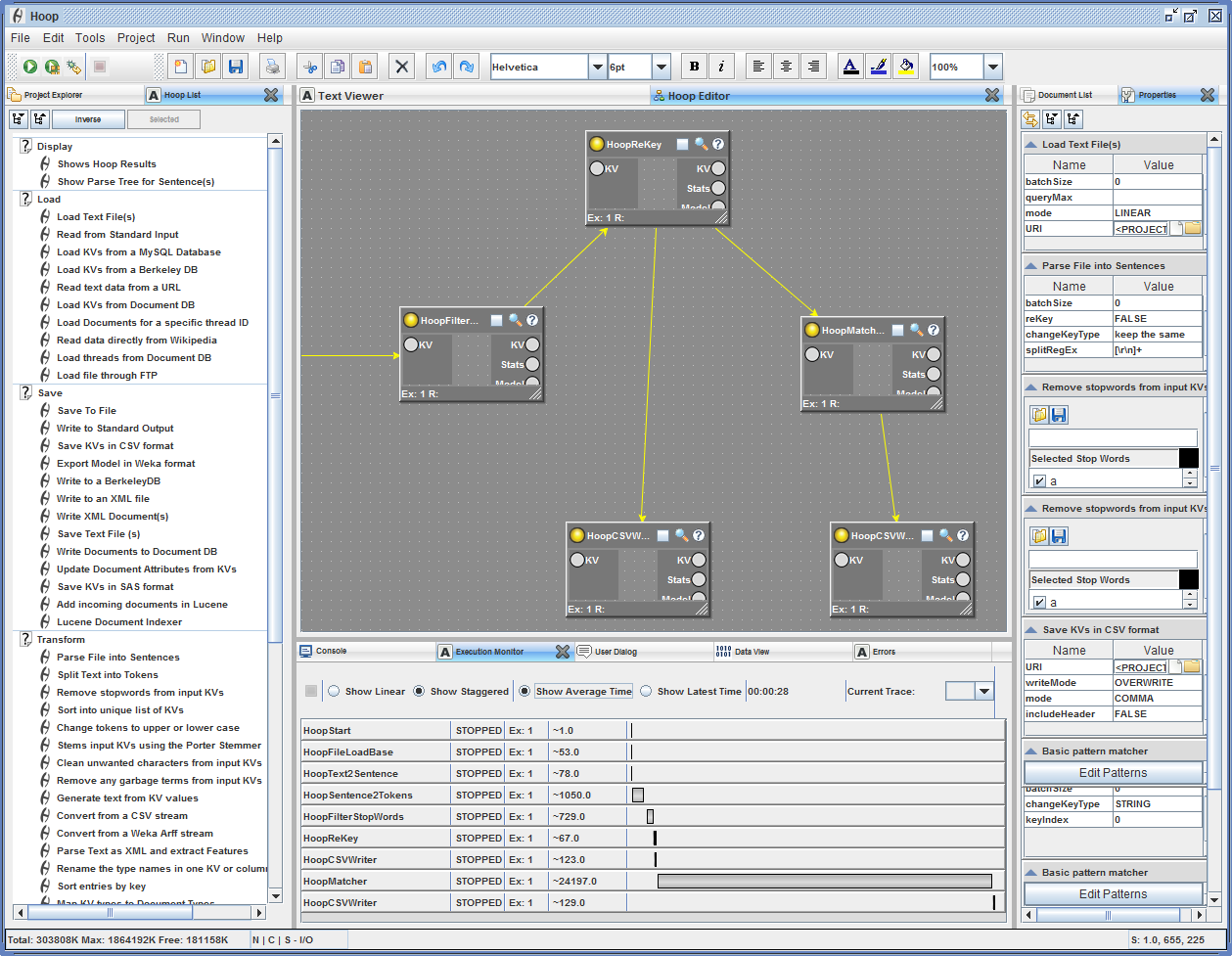

Developed as a text mining and language exploration workbench in Java, Hoop is a sandbox for generic text and language machine learning exploration that can be adopted to a wide variety of tasks. Hoop is composed of a collection of modules (hoops) each of which can take linguistic input from one, process it and pass it onto another. Combining those modules or hoops allows you to create complex analysis systems.

1. Drag and Drop editor allowing you to stitch together custom tools and applications

2. Rich API and developer support for rapid text analysis prototyping

3. Large set of language oriented and related libraries with comprehensible API, see below

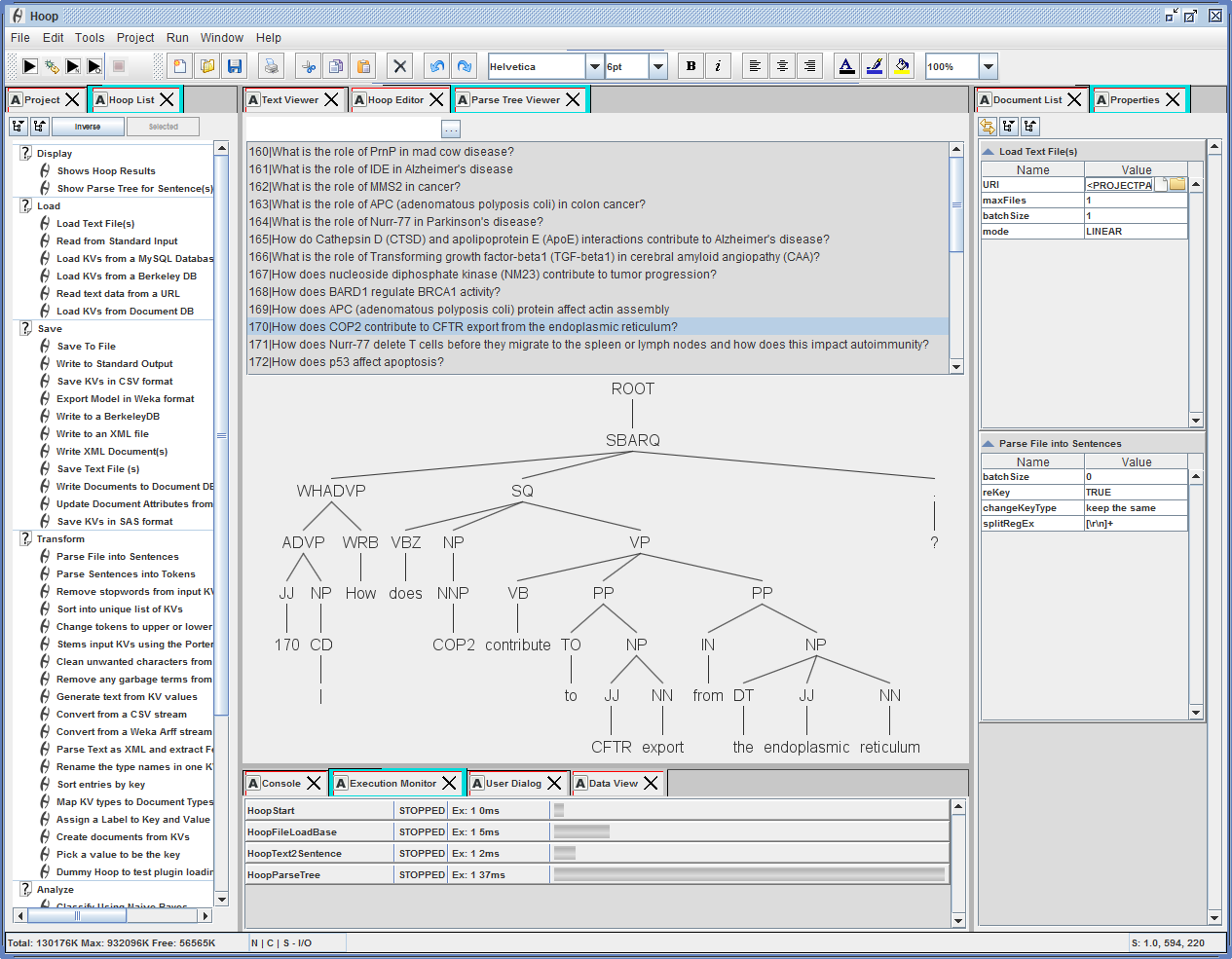

Many of the popular language processing tools are natively available directly from within the IDE. For example, shown below is a Hoop graph in which the last hoop is a visualization wrapper for the Standford NLP parse tree visualization panel. Below is an example of a graph that parses and shows a text file containing TREC questions.

Within large complex language analysis systems we often focus on analyzing our results statistically without examining the correctness of the individual steps. Even worse, we tend to not look at those cases that get rejected by parsers or have been mis-classified by machine learning classifiers. Hoop attempts to provide a means whereby each hoop in a transformation process or analysis step can be examined and interrogated as it is doing its job. All in all Hoop attempts to provide:

- Inspectability

Most systems allow you to do an after-action review of a completed pipeline (a CPE in UIMA terms). This makes it very difficult to inspect what data was produced in each step (CASes) and what data was discarded. Hoop integrates an inspection system which can be activated at any time during or after the running of a Hoop sequence. By clicking on the magnifying glass in a selected Hoop panel you can see the data that was created in that step and you can also inspect what data was discarded.

For more information see the main page for Inspectability

- Explainability

In a complex system that runs on a cluster in which essentially all the steps happen in a parallel fashion, it can be difficult if not impossible to understand what exactly is happening to the system and the data is processes. Hoop aims to provide both visualization tools to understand how data is managed, manipulated and pushed through the pipeline, as well as make the results comprehensible through enhanced text visualizations (e.g. a document wall showing text highlighting based on likelihood estimates)

For more information see the main page for Explainability

- Repeatability

Initially the Hoop code should make it possible to repeat an experiment hundreds or thousands of times, perhaps in such a way that each time different permutations are tried of a pipeline. However the ultimate goal is to create system that can be run indefinitely akin to online learning but with a strong feedback loop that can integrate previously discarded data if the system detects faults in previously used assumptions.

For more information see the main page for Repeatability

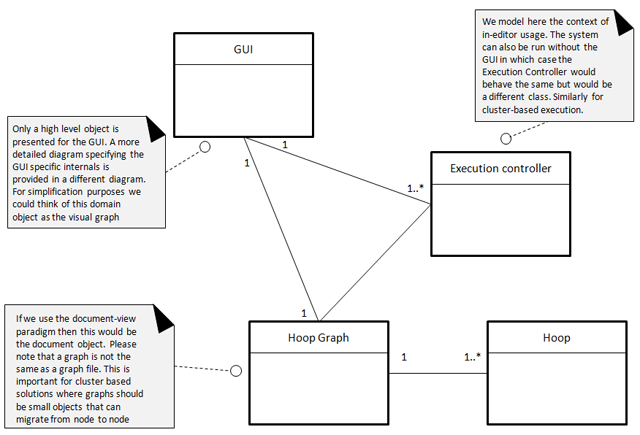

At a basic level the Hoop linguistic workbench is a Document/View/Controller architecture, but with a twist. For example you can disconnect or remove the Controller/Document part and run it on a cluster. Or you can run the Document/View part and only use it for modeling tasks. We've included the user documentation below but that will of course be provided separately for those only interested in using the tools as-is.

Initially written as a set of support code for graduate classes and smaller narrative projects, the code is slowly growing to encompass a larger text-based data mining framework.