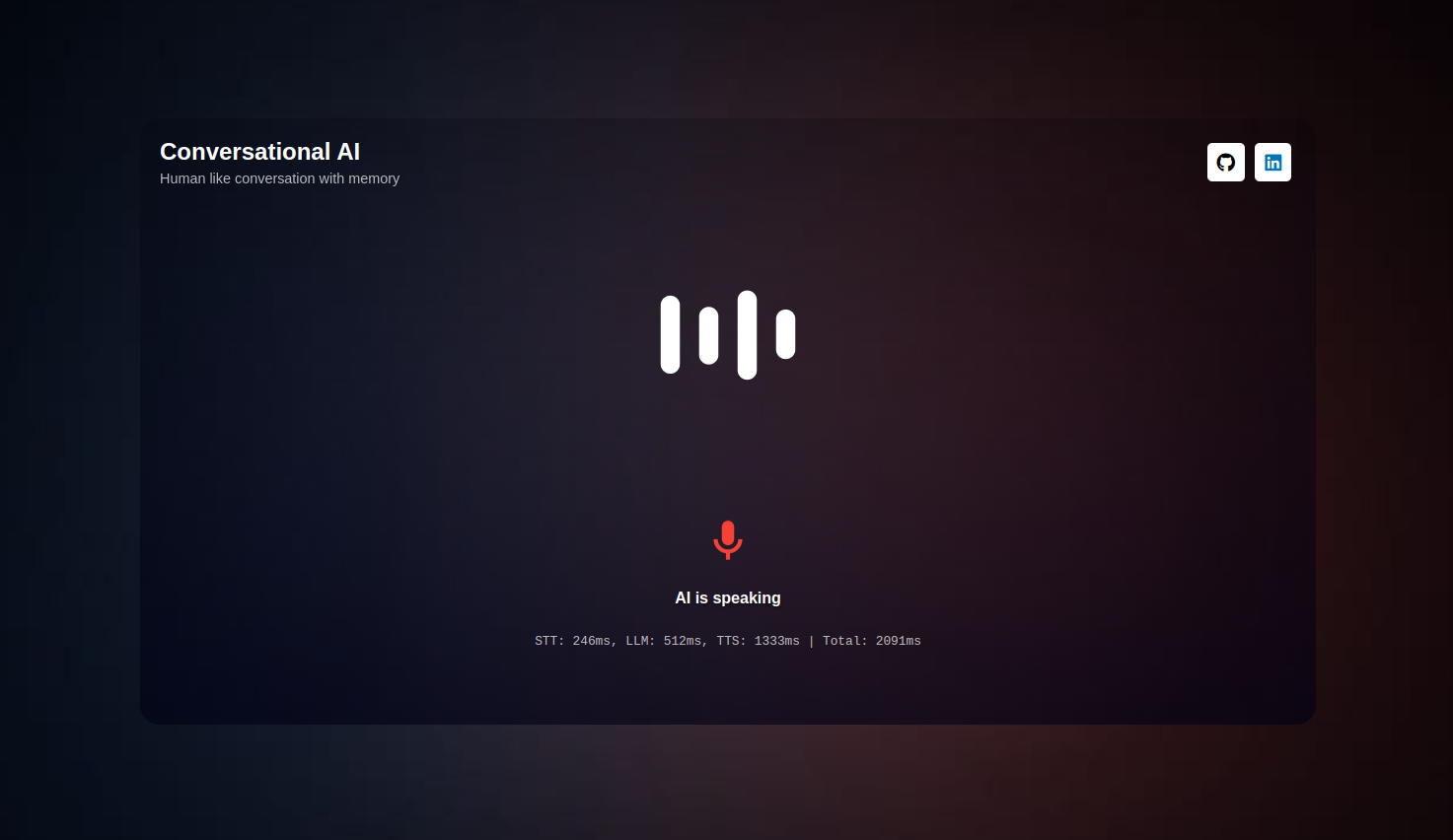

A real-time voice AI enabling human-like conversations with memory and interruptions. This project integrates models for Speech-to-Text (STT), Groq-fast LLM, and Text-to-Speech (TTS), all communicating via WebSocket with ~1900ms latency.

If you find this helpful, consider giving it a ⭐!

Note: This is an initial version. Future plans include integrating a low-latency Speech-to-Speech model from OpenAI.

Clone the project

git clone https://github.com/JoyalAJohney/Conversational-AI.gitGo to the project directory

cd Conversational-AICreate .env file with API Keys

GROQ_API_KEY=

DEEPGRAM_API_KEY=Run docker container

docker-compose up --buildGo to http://localhost:8000 to see the magic!