PDL is a declarative language designed for developers to create reliable, composable LLM prompts and integrate them into software systems. It provides a structured way to specify prompt templates, enforce validation, and compose LLM calls with traditional rule-based systems.

Quick Start | Example | GUI | Key Features | Documentation | API Cheat Sheet

A PDL program is written declaratively, in YAML. The pdl command

line tool interprets this program, accumulating messages and sending

them to the models as specified by your program. PDL supports both

hosted and local models. See

here

for instructions on how to install an Ollama model locally.

To install the pdl command line tool:

pip install prompt-declaration-languageCheck out AutoPDL, PDL's prompt optimizer tool Spiess et al. (2025)! AutoPDL can be used to optimize any part of a PDL program. This includes few-shots examples and textual prompts, but also prompting patterns. It outputs an optimized PDL program with optimal values.

For a tutorial on how to use AutoPDL, see AutoPDL

The following program accumulates a single message write a hello world example… and sends it to the ollama/granite-3.2:8b model:

text:

- "write a hello world example, and explain how to run it"

- model: ollama/granite-3.2:8bTo run this program:

pdl <path/to/example.pdl>For more information on the pdl CLI see

here. To try the

screenshot on the right live, click

here.

The screenshot on the right (above) shows PDL's graphical user

interface. This GUI allows for interactive debugging and live

programming. You may install this via brew install pdl on MacOS. For

other platforms, downloads are available

here. You

may also kick the tires with a web version of the GUI

here.

To generate a trace for use in the GUI:

pdl --trace <file.json> <my-example.pdl> - LLM Integration: Compatible with any LLM, including IBM watsonx

- Prompt Engineering:

- Template system for single/multi-shot prompting

- Composition of multiple LLM calls

- Integration with tools (code execution & APIs)

- Development Tools:

- Type checking for model I/O

- Python SDK

- Chat API support

- Live document visualization for debugging

- Control Flow: Variables, conditionals, loops, and functions

- I/O Operations: File/stdin reading, JSON parsing

- API Integration: Native REST API support (Python)

PDL requires Python 3.11+ (Windows users should use WSL).

# Basic installation

pip install prompt-declaration-language

# Development installation with examples

pip install 'prompt-declaration-language[examples]'You can run PDL with LLM models in local using Ollama, or other cloud service. See here for instructions on how to install an Ollama model locally.

If you use watsonx:

export WX_URL="https://{region}.ml.cloud.ibm.com"

export WX_API_KEY="your-api-key"

export WATSONX_PROJECT_ID="your-project-id"If you use Replicate:

export REPLICATE_API_TOKEN="your-token"Install the YAML Language Support by Red Hat extension in VSCode.

VSCode setup for syntax highlighting and validation:

// .vscode/settings.json

{

"yaml.schemas": {

"https://ibm.github.io/prompt-declaration-language/dist/pdl-schema.json": "*.pdl"

},

"files.associations": {

"*.pdl": "yaml",

}

}In this example we use external content data.yaml and watsonx as an LLM provider.

description: Template with variables

defs:

user_input:

read: ../code/data.yaml

parser: yaml

text:

- model: watsonx/ibm/granite-34b-code-instruct

input: |

Process this input: ${user_input}

Format the output as JSON.description: Code execution example

text:

- "\nFind a random number between 1 and 20\n"

- def: N

lang: python

code: |

import random

# (In PDL, set `result` to the output you wish for your code block.)

result = random.randint(1, 20)

- "\nthe result is (${ N })\n"chat interactions:

description: chatbot

text:

- read:

def: user_input

message: "hi? [/bye to exit]\n"

contribute: [context]

- repeat:

text:

- model: ollama/granite-code:8b

- read:

def: user_input

message: "> "

contribute: [context]

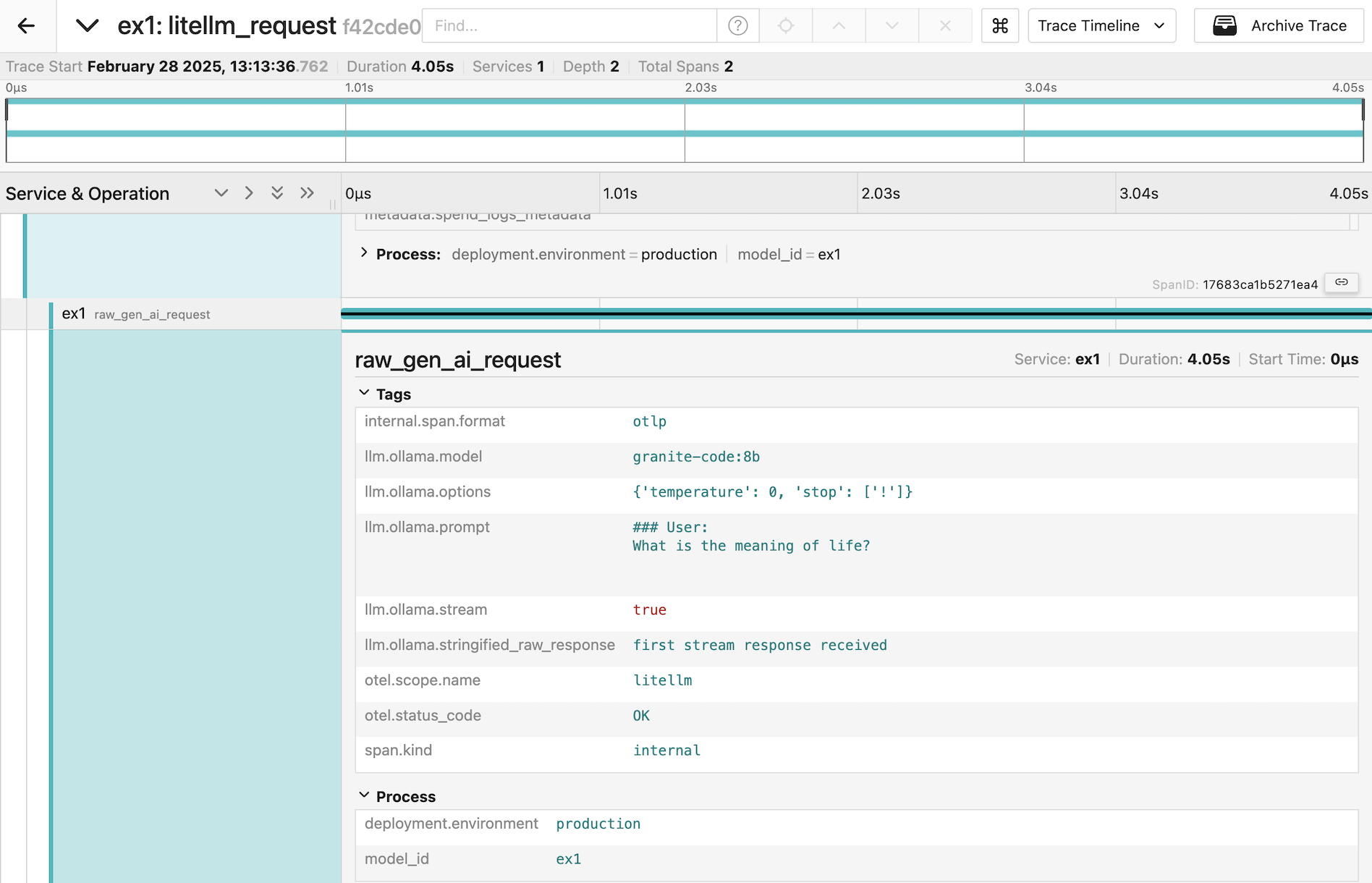

until: ${ user_input == '/bye'}PDL includes experimental support for gathering trace telemetry. This can be used for debugging or performance analysis, and to see the shape of prompts sent by LiteLLM to models.

For more information see here.

See the contribution guidelines for details on:

- Code style

- Testing requirements

- PR process

- Issue reporting