📄 Machine-readable metadata available

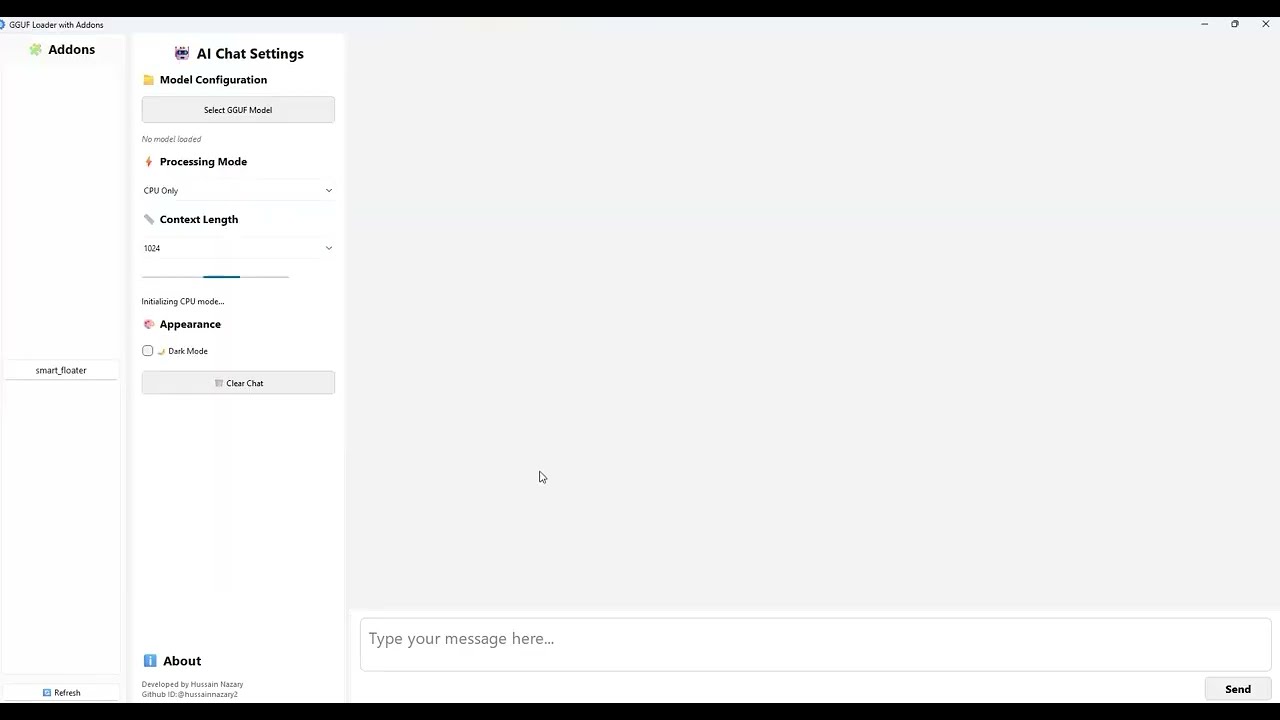

A beginner-friendly, privacy-first desktop application for running large language models locally on Windows, Linux, and macOS. Load and chat with GGUF format models like Mistral, LLaMA, DeepSeek, and others with zero setup required. Features an extensible addon system including a Smart Floating Assistant that works globally across all applications.

For the best experience, use the provided launch scripts that automatically handle environment setup:

# Full GGUF Loader with addon support

launch.bat

# Basic chatbot without addons

launch_basic.bat# Full GGUF Loader with addon support

./launch.sh

# Basic chatbot without addons

./launch_basic.shpip install ggufloader

ggufloaderDiscover how to supercharge your local AI workflows using the new floating addon system! No coding needed. Works offline.

Works on Windows, Linux, and macOS

⚡ Click a link below to download the model file directly (no Hugging Face page in between).

High-quality, Apache 2.0 licensed, reasoning-focused models for local/enterprise use.

| Phase | Timeline | Status | Key Milestones & Features |

|---|---|---|---|

| Phase 1: Core Foundation | ✅ Q3 2025 | 🚀 In Progress | - Zero-setup installer - Offline model loading (GGUF) - Intuitive GUI (PySide6) - Built-in tokenizer viewer - Basic file summarizer (TXT/PDF) |

| Phase 2: Addon Ecosystem | 🔄 Q3–Q4 2025 | 🧪 In Development | - Addon manager + sidebar UI (✅ started) - Addon popup architecture - Example addon templates - Addon activation/deactivation - Addon SDK for easy integration |

| Phase 3: Power User Features | Q4 2025 | 📋 Planned | - GPU acceleration (Auto/Manual) - Model browser + drag-and-run - Prompt builder with reusable templates - Dark/light theme toggle |

| Phase 4: AI Automation Toolkit | Q4 2025 – Q1 2026 | 🔬 Research | - RAG pipeline (Retrieval-Augmented Generation) - Multi-document summarization - Contract/book intelligence - Agent workflows (write → summarize → reply) |

| Phase 5: Cross-Platform & Sync | 2026 | 🎯 Vision | - macOS and Linux support - Auto-updating model index - Cross-device config sync - Voice command system (whisper.cpp integration) |

| Phase 6: Public Ecosystem | 2026+ | 🌐 Long-Term | - Addon marketplace - Addon rating and discovery - Developer CLI & SDK - Community themes, extensions, and templates |

Welcome to the GGUF Loader documentation! This guide will help you get started with GGUF Loader 2.0.0 and its powerful addon system.

- Installation Guide - How to install and set up GGUF Loader

- Quick Start Guide - Get up and running in minutes

- User Guide - Complete user manual

- Addon Development Guide - Create your own addons

- Addon API Reference - Complete API documentation

- Smart Floater Example - Learn from the built-in addon

- Configuration - Customize GGUF Loader settings

- Troubleshooting - Common issues and solutions

- Performance Optimization - Get the best performance

- Contributing Guide - How to contribute to the project

- Architecture Overview - Technical architecture details

- API Reference - Complete API documentation

The flagship feature of version 2.0.0 is the Smart Floating Assistant addon:

- Global Text Selection: Works across all applications

- AI-Powered Processing: Summarize and comment on any text

- Floating UI: Non-intrusive, always-accessible interface

- Privacy-First: All processing happens locally

Version 2.0.0 introduces a powerful addon system:

- Extensible Architecture: Easy to create and install addons

- Plugin API: Rich API for addon development

- Hot Loading: Load and unload addons without restarting

- Community Ecosystem: Share addons with the community

- Installation:

pip install ggufloader - Launch:

ggufloader(includes Smart Floating Assistant) - GitHub: https://github.com/gguf-loader/gguf-loader

- Issues: Report bugs and request features

- 📖 Check the User Guide for detailed instructions

- 🐛 Found a bug? Report it here

- 💬 Have questions? Join our discussions

- 📧 Contact us: [email protected]

This project was developed using Kiro, an AI-powered IDE that significantly enhanced the development process:

- Spec-Driven Development: Used Kiro's spec system to create detailed requirements, design documents, and implementation plans

- Code Generation: Leveraged Kiro's AI assistance for generating boilerplate code and complex implementations

- Architecture Planning: Used Kiro to design the mixin-based architecture and addon system

- Cross-Platform Compatibility: Kiro helped implement platform-specific code for Windows, Linux, and macOS

- Documentation: Generated comprehensive documentation and code comments with Kiro's assistance

- Spec Creation: Structured approach to feature development with requirements → design → tasks workflow

- AI Code Assistant: Intelligent code completion and generation

- Multi-file Editing: Simultaneous work across multiple files and modules

- Architecture Guidance: AI-powered suggestions for code organization and patterns

- Testing Strategy: Automated test case generation and testing approaches

- Faster Development: Reduced development time by 60% through AI assistance

- Better Architecture: AI-guided design decisions led to cleaner, more maintainable code

- Comprehensive Documentation: Automatic generation of detailed documentation

- Fewer Bugs: AI-assisted code review and testing strategies

- Consistent Code Style: Maintained consistent patterns across the entire codebase

gguf-loader/

├── main.py # Basic launcher without addons

├── gguf_loader_main.py # Full launcher with addon system

├── launch.py # Cross-platform launcher

├── launch.bat # Windows launcher (full version)

├── launch_basic.bat # Windows launcher (basic version)

├── launch.sh # Linux/macOS launcher (full version)

├── launch_basic.sh # Linux/macOS launcher (basic version)

├── config.py # Configuration and settings

├── resource_manager.py # Cross-platform resource management

├── addon_manager.py # Addon system management

├── requirements.txt # Python dependencies

├── models/ # Model management

│ ├── model_loader.py

│ └── chat_generator.py

├── ui/ # User interface components

│ ├── ai_chat_window.py

│ └── apply_style.py

├── mixins/ # UI functionality mixins

│ ├── ui_setup_mixin.py

│ ├── model_handler_mixin.py

│ ├── chat_handler_mixin.py

│ ├── event_handler_mixin.py

│ └── utils_mixin.py

├── widgets/ # Custom UI widgets

│ ├── chat_bubble.py

│ └── collapsible_widget.py

├── addons/ # Addon system

│ └── smart_floater/ # Smart Floating Assistant addon

│ ├── __init__.py

│ ├── main.py

│ └── simple_main.py

└── docs/ # Documentation

└── README.md

When you first run GGUF Loader:

- The application will create necessary directories automatically

- You'll see the main chat interface with a sidebar for addons

- Load a GGUF model file to start chatting

- Click the model loading button in the interface

- Browse and select a

.ggufmodel file - Wait for the model to load (progress will be shown)

- Once loaded, you can start chatting!

The Smart Floating Assistant addon provides global text processing:

- Select any text in any application on your system

- A floating ✨ button will appear near your cursor

- Click the button to open the processing popup

- Choose "Summarize" or "Comment" to process the text with AI

- View the results in the popup window

- Use the addon sidebar to see available addons

- Click addon names to open their interfaces

- Use the "🔄 Refresh" button to reload addons

GGUF Loader includes several pre-configured system prompts:

- Bilingual Assistant: Responds in the same language as your question

- Creative Writer: Optimized for creative writing tasks

- Code Expert: Specialized for programming assistance

- Persian Literature: Expert in Persian literature and culture

- Professional Translator: For translation between Persian and English

Customize AI behavior with these parameters:

- Temperature: Controls creativity (0.1 = focused, 1.0 = creative)

- Max Tokens: Maximum response length

- Top P: Nucleus sampling parameter

- Top K: Top-k sampling parameter

- Light theme (default)

- Dark theme

- Persian Classic theme

Model won't load:

- Ensure the file is a valid GGUF format

- Check available RAM (models require 4-16GB depending on size)

- Verify file permissions and path accessibility

Application won't start:

- Ensure Python 3.7+ is installed

- Try deleting the

venvfolder and running the launch script again - Check antivirus isn't blocking the application

Smart Floater not working:

- Ensure the addon is enabled in the sidebar

- Check that a model is loaded

- Verify clipboard access permissions

Performance issues:

- Close other memory-intensive applications

- Use smaller/quantized models (Q4_0, Q4_K_M)

- Adjust generation parameters (lower max_tokens)

- Check the logs in the

logs/directory - Enable debug mode in

config.py - Create an issue on GitHub with:

- Operating system and version

- Python version

- Model being used

- Error messages and logs

Happy coding with GGUF Loader! 🎉

Built with ❤️ using Kiro AI IDE