Try it online! | API Docs | Community Forum

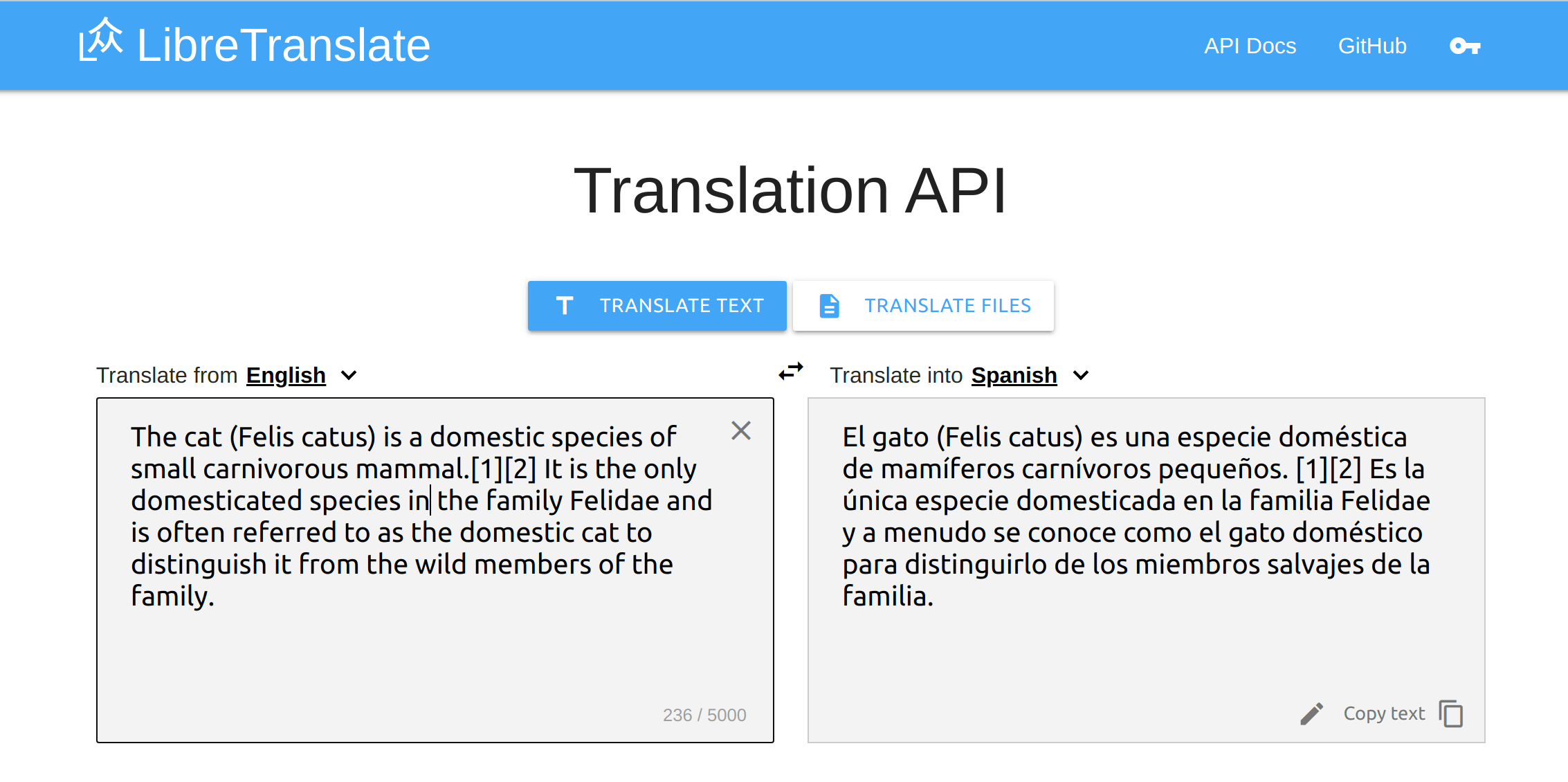

Free and Open Source Machine Translation API, entirely self-hosted. Unlike other APIs, it doesn't rely on proprietary providers such as Google or Azure to perform translations. Instead, its translation engine is powered by the open source Argos Translate library.

Request:

const res = await fetch("https://libretranslate.com/translate", {

method: "POST",

body: JSON.stringify({

q: "Hello!",

source: "en",

target: "es"

}),

headers: { "Content-Type": "application/json" }

});

console.log(await res.json());Response:

{

"translatedText": "¡Hola!"

}List of language codes: https://libretranslate.com/languages

Request:

const res = await fetch("https://libretranslate.com/translate", {

method: "POST",

body: JSON.stringify({

q: "Ciao!",

source: "auto",

target: "en"

}),

headers: { "Content-Type": "application/json" }

});

console.log(await res.json());Response:

{

"detectedLanguage": {

"confidence": 83,

"language": "it"

},

"translatedText": "Bye!"

}Request:

const res = await fetch("https://libretranslate.com/translate", {

method: "POST",

body: JSON.stringify({

q: '<p class="green">Hello!</p>',

source: "en",

target: "es",

format: "html"

}),

headers: { "Content-Type": "application/json" }

});

console.log(await res.json());Response:

{

"translatedText": "<p class=\"green\">¡Hola!</p>"

}Request:

const res = await fetch("https://libretranslate.com/translate", {

method: "POST",

body: JSON.stringify({

q: "Hello",

source: "en",

target: "it",

format: "text",

alternatives: 3

}),

headers: { "Content-Type": "application/json" }

});

console.log(await res.json());Response:

{

"alternatives": [

"Salve",

"Pronto"

],

"translatedText": "Ciao"

}You can run your own API server with just a few lines of setup!

Make sure you have Python installed (3.8 or higher is recommended), then simply run:

pip install libretranslate

libretranslate [args]Then open a web browser to http://localhost:5000

On Ubuntu 20.04 you can also use the install script available at https://github.com/argosopentech/LibreTranslate-init

You can also run the application with docker:

./run.sh [args]run.bat [args]See CONTRIBUTING.md for information on how to build and run the project yourself.

You can use hardware acceleration to speed up translations on a GPU machine with CUDA 11.2 and nvidia-docker installed.

Run this version with:

docker compose -f docker-compose.cuda.yml up -d --buildArguments passed to the process or set via environment variables are split into two kinds.

-

Settings or runtime flags used to toggle specific runmodes or disable parts of the application. These act as toggle when added or removed.

-

Configuration parameters to set various limits and configure the application. These require a parameter to be passed to function, if removed the default parameters are used.

| Argument | Description | Default Setting | Env. name |

|---|---|---|---|

| --debug | Enable debug environment | Disabled |

LT_DEBUG |

| --ssl | Whether to enable SSL | Disabled |

LT_SSL |

| --api-keys | Enable API keys database for per-client rate limits when --req-limit is reached | Don't use API keys |

LT_API_KEYS |

| --require-api-key-origin | Require use of an API key for programmatic access to the API, unless the request origin matches this domain | No restrictions on domain origin |

LT_REQUIRE_API_KEY_ORIGIN |

| --require-api-key-secret | Require use of an API key for programmatic access to the API, unless the client also sends a secret match | No secrets required |

LT_REQUIRE_API_KEY_SECRET |

| --suggestions | Allow user suggestions | Disabled |

LT_SUGGESTIONS |

| --disable-files-translation | Disable files translation | File translation allowed |

LT_DISABLE_FILES_TRANSLATION |

| --disable-web-ui | Disable web ui | Web Ui enabled |

LT_DISABLE_WEB_UI |

| --update-models | Update language models at startup | Only on if no models found |

LT_UPDATE_MODELS |

| --metrics | Enable the /metrics endpoint for exporting Prometheus usage metrics | Disabled |

LT_METRICS |

| Argument | Description | Default Parameter | Env. name |

|---|---|---|---|

| --host | Set host to bind the server to | 127.0.0.1 |

LT_HOST |

| --port | Set port to bind the server to | 5000 |

LT_PORT |

| --char-limit | Set character limit | No limit |

LT_CHAR_LIMIT |

| --req-limit | Set maximum number of requests per minute per client (outside of limits set by api keys) | No limit |

LT_REQ_LIMIT |

| --req-limit-storage | Storage URI to use for request limit data storage. See Flask Limiter | memory:// |

LT_REQ_LIMIT_STORAGE |

| --req-time-cost | Considers a time cost (in seconds) for request limiting purposes. If a request takes 10 seconds and this value is set to 5, the request cost is either 2 or the actual request cost (whichever is greater). | No time cost |

LT_REQ_TIME_COST |

| --batch-limit | Set maximum number of texts to translate in a batch request | No limit |

LT_BATCH_LIMIT |

| --ga-id | Enable Google Analytics on the API client page by providing an ID | Empty (no tracking) |

LT_GA_ID |

| --frontend-language-source | Set frontend default language - source | auto |

LT_FRONTEND_LANGUAGE_SOURCE |

| --frontend-language-target | Set frontend default language - target | locale (match site's locale) |

LT_FRONTEND_LANGUAGE_TARGET |

| --frontend-timeout | Set frontend translation timeout | 500 |

LT_FRONTEND_TIMEOUT |

| --api-keys-db-path | Use a specific path inside the container for the local database. Can be absolute or relative | db/api_keys.db |

LT_API_KEYS_DB_PATH |

| --api-keys-remote | Use this remote endpoint to query for valid API keys instead of using the local database | Empty (use local db instead) |

LT_API_KEYS_REMOTE |

| --get-api-key-link | Show a link in the UI where to direct users to get an API key | Empty (no link shown on web ui) |

LT_GET_API_KEY_LINK |

| --shared-storage | Shared storage URI to use for multi-process data sharing (e.g. when using gunicorn) | memory:// |

LT_SHARED_STORAGE |

| --secondary | Mark this instance as a secondary instance to avoid conflicts with the primary node in multi-node setups | Primary node |

LT_SECONDARY |

| --load-only | Set available languages | Empty (use all from argostranslate) |

LT_LOAD_ONLY |

| --threads | Set number of threads | 4 |

LT_THREADS |

| --metrics-auth-token | Protect the /metrics endpoint by allowing only clients that have a valid Authorization Bearer token | Empty (no auth required) |

LT_METRICS_AUTH_TOKEN |

| --url-prefix | Add prefix to URL: example.com:5000/url-prefix/ | / |

LT_URL_PREFIX |

-

Each argument has an equivalent environment variable that can be used instead. The env. variables overwrite the default values but have lower priority than the command arguments and are particularly useful if used with Docker. The environment variable names are the upper-snake-case of the equivalent command argument's name with a

LTprefix. -

To configure requirement for api key to use, set

--req-limitto0and add the--api-keysflag. Requests made without a proper api key will be rejected. -

Setting

--update-modelswill update models regardless of whether updates are available or not.

If you installed with pip:

pip install -U libretranslate

If you're using docker:

docker pull libretranslate/libretranslate

Start the program with the --update-models argument. For example: libretranslate --update-models or ./run.sh --update-models.

Alternatively you can also run the scripts/install_models.py script.

pip install gunicorn

gunicorn --bind 0.0.0.0:5000 'wsgi:app'You can pass application arguments directly to Gunicorn via:

gunicorn --bind 0.0.0.0:5000 'wsgi:app(api_keys=True)'See Medium article by JM Robles and the improved k8s.yaml by @rasos.

Based on @rasos work you can now install LibreTranslate on Kubernetes using Helm.

A Helm chart is now available in the helm-chart repository where you can find more details.

You can quickly install LibreTranslate on Kubernetes using Helm with the following command:

helm repo add libretranslate https://libretranslate.github.io/helm-chart/

helm repo update

helm search repo libretranslate

helm install libretranslate libretranslate/libretranslate --namespace libretranslate --create-namespaceLibreTranslate supports per-user limit quotas, e.g. you can issue API keys to users so that they can enjoy higher requests limits per minute (if you also set --req-limit). By default all users are rate-limited based on --req-limit, but passing an optional api_key parameter to the REST endpoints allows a user to enjoy higher request limits. You can also specify different character limits that bypass the default --char-limit value on a per-key basis.

To use API keys simply start LibreTranslate with the --api-keys option. If you modified the API keys database path with the option --api-keys-db-path, you must specify the path with the same argument flag when using the ltmanage keys command.

To issue a new API key with 120 requests per minute limits:

ltmanage keys add 120To issue a new API key with 120 requests per minute and a maximum of 5,000 characters per request:

ltmanage keys add 120 --char-limit 5000If you changed the API keys database path:

ltmanage keys --api-keys-db-path path/to/db/dbName.db add 120ltmanage keys remove <api-key>ltmanage keysLibreTranslate has Prometheus exporter capabilities when you pass the --metrics argument at startup (disabled by default). When metrics are enabled, a /metrics endpoint is mounted on the instance:

# HELP libretranslate_http_requests_in_flight Multiprocess metric

# TYPE libretranslate_http_requests_in_flight gauge

libretranslate_http_requests_in_flight{api_key="",endpoint="/translate",request_ip="127.0.0.1"} 0.0

# HELP libretranslate_http_request_duration_seconds Multiprocess metric

# TYPE libretranslate_http_request_duration_seconds summary

libretranslate_http_request_duration_seconds_count{api_key="",endpoint="/translate",request_ip="127.0.0.1",status="200"} 0.0

libretranslate_http_request_duration_seconds_sum{api_key="",endpoint="/translate",request_ip="127.0.0.1",status="200"} 0.0

You can then configure prometheus.yml to read the metrics:

scrape_configs:

- job_name: "libretranslate"

# Needed only if you use --metrics-auth-token

#authorization:

#credentials: "mytoken"

static_configs:

- targets: ["localhost:5000"]To secure the /metrics endpoint you can also use --metrics-auth-token mytoken.

If you use Gunicorn, make sure to create a directory for storing multiprocess data metrics and set PROMETHEUS_MULTIPROC_DIR:

mkdir -p /tmp/prometheus_data

rm /tmp/prometheus_data/*

export PROMETHEUS_MULTIPROC_DIR=/tmp/prometheus_data

gunicorn -c scripts/gunicorn_conf.py --bind 0.0.0.0:5000 'wsgi:app(metrics=True)'You can use the LibreTranslate API using the following bindings:

- Rust: https://github.com/DefunctLizard/libretranslate-rs

- Node.js: https://github.com/franciscop/translate

- TypeScript: https://github.com/tderflinger/libretranslate-ts

- .Net: https://github.com/sigaloid/LibreTranslate.Net

- Go: https://github.com/SnakeSel/libretranslate

- Python: https://github.com/argosopentech/LibreTranslate-py

- PHP: https://github.com/jefs42/libretranslate

- C++: https://github.com/argosopentech/LibreTranslate-cpp

- Swift: https://github.com/wacumov/libretranslate

- Unix: https://github.com/argosopentech/LibreTranslate-sh

- Shell: https://github.com/Hayao0819/Hayao-Tools/tree/master/libretranslate-sh

- Java: https://github.com/suuft/libretranslate-java

- Ruby: https://github.com/noesya/libretranslate

- R: https://github.com/myanesp/libretranslateR

You can use the official discourse translator plugin to translate Discourse topics with LibreTranslate. To install it simply modify /var/discourse/containers/app.yml:

## Plugins go here

## see https://meta.discourse.org/t/19157 for details

hooks:

after_code:

- exec:

cd: $home/plugins

cmd:

- git clone https://github.com/discourse/docker_manager.git

- git clone https://github.com/discourse/discourse-translator

...Then issue ./launcher rebuild app. From the Discourse's admin panel then select "LibreTranslate" as a translation provider and set the relevant endpoint configurations.

See it in action on this page.

- LibreTranslator is an Android app available on the Play Store and in the F-Droid store that uses the LibreTranslate API.

- LiTranslate is an iOS app available on the App Store that uses the LibreTranslate API.

- minbrowser is a web browser with integrated LibreTranslate support.

- A LibreTranslate Firefox addon is currently a work in progress.

This is a list of public LibreTranslate instances, some require an API key. If you want to add a new URL, please open a pull request.

| URL | API Key Required | Links |

|---|---|---|

| libretranslate.com | ✔️ | [ Get API Key ] [ Service Status ] |

| translate.terraprint.co | - | |

| trans.zillyhuhn.com | - | |

| translate.lotigara.ru | - |

| URL |

|---|

| lt.vernccvbvyi5qhfzyqengccj7lkove6bjot2xhh5kajhwvidqafczrad.onion |

| lt.vern.i2p |

You have two options to create new language models:

Most of the training data is from Opus, which is an open source parallel corpus. Check also NLLU

The LibreTranslate Web UI is available in all the languages for which LibreTranslate can translate to. It can also (roughly) translate itself! Some languages might not appear in the UI since they haven't been reviewed by a human yet. You can enable all languages by turning on --debug mode.

To help improve or review the UI translations:

- Go to https://hosted.weblate.org/projects/libretranslate/app/#translations. All changes are automatically pushed to this repository.

- Once all strings have been reviewed/edited, open a pull request and change

libretranslate/locales/{code}/meta.json:

{

"name": "<Language>",

"reviewed": true <-- Change this from false to true

}| Language | Reviewed | Weblate Link |

|---|---|---|

| Arabic | Edit | |

| Azerbaijani | Edit | |

| Chinese | Edit | |

| Chinese (Traditional) | Edit | |

| Czech | ✔️ | Edit |

| Danish | Edit | |

| Dutch | Edit | |

| English | ✔️ | Edit |

| Esperanto | ✔️ | Edit |

| Finnish | Edit | |

| French | ✔️ | Edit |

| German | ✔️ | Edit |

| Greek | Edit | |

| Hebrew | Edit | |

| Hindi | Edit | |

| Hungarian | Edit | |

| Indonesian | Edit | |

| Irish | Edit | |

| Italian | ✔️ | Edit |

| Japanese | Edit | |

| Kabyle | Edit | |

| Korean | ✔️ | Edit |

| Occitan | Edit | |

| Persian | Edit | |

| Polish | Edit | |

| Portuguese | ✔️ | Edit |

| Russian | ✔️ | Edit |

| Slovak | Edit | |

| Spanish | ✔️ | Edit |

| Swedish | Edit | |

| Turkish | Edit | |

| Ukranian | ✔️ | Edit |

| Vietnamese | Edit |

Help us by opening a pull request!

- Language bindings for every computer language

- Improved translations

Any other idea is welcome also.

In short, yes, but only if you buy an API key. You can always run LibreTranslate for free on your own server of course.

By default language models are loaded from the argos-index. Sometimes we deploy models on libretranslate.com that haven't been added to the argos-index yet, such as those converted from OPUS (thread)

In $HOME/.local/share/argos-translate/packages. On Windows that's C:\Users\youruser\.local\share\argos-translate\packages.

Yes, here are config examples for Apache2 and Caddy that redirect a subdomain (with HTTPS certificate) to LibreTranslate running on a docker at localhost.

sudo docker run -ti --rm -p 127.0.0.1:5000:5000 libretranslate/libretranslateYou can remove 127.0.0.1 on the above command if you want to be able to access it from domain.tld:5000, in addition to subdomain.domain.tld (this can be helpful to determine if there is an issue with Apache2 or the docker container).

Add --restart unless-stopped if you want this docker to start on boot, unless manually stopped.

Apache config

Replace [YOUR_DOMAIN] with your full domain; for example, translate.domain.tld or libretranslate.domain.tld.

Remove # on the ErrorLog and CustomLog lines to log requests.

#Libretranslate

#Redirect http to https

<VirtualHost *:80>

ServerName http://[YOUR_DOMAIN]

Redirect / https://[YOUR_DOMAIN]

# ErrorLog ${APACHE_LOG_DIR}/error.log

# CustomLog ${APACHE_LOG_DIR}/tr-access.log combined

</VirtualHost>

#https

<VirtualHost *:443>

ServerName https://[YOUR_DOMAIN]

ProxyPass / http://127.0.0.1:5000/

ProxyPassReverse / http://127.0.0.1:5000/

ProxyPreserveHost On

SSLEngine on

SSLCertificateFile /etc/letsencrypt/live/[YOUR_DOMAIN]/fullchain.pem

SSLCertificateKeyFile /etc/letsencrypt/live/[YOUR_DOMAIN]/privkey.pem

SSLCertificateChainFile /etc/letsencrypt/live/[YOUR_DOMAIN]/fullchain.pem

# ErrorLog ${APACHE_LOG_DIR}/tr-error.log

# CustomLog ${APACHE_LOG_DIR}/tr-access.log combined

</VirtualHost>Add this to an existing site config, or a new file in /etc/apache2/sites-available/new-site.conf and run sudo a2ensite new-site.conf.

To get a HTTPS subdomain certificate, install certbot (snap), run sudo certbot certonly --manual --preferred-challenges dns and enter your information (with subdomain.domain.tld as the domain). Add a DNS TXT record with your domain registrar when asked. This will save your certificate and key to /etc/letsencrypt/live/{subdomain.domain.tld}/. Alternatively, comment the SSL lines out if you don't want to use HTTPS.

Caddy config

Replace [YOUR_DOMAIN] with your full domain; for example, translate.domain.tld or libretranslate.domain.tld.

#Libretranslate

[YOUR_DOMAIN] {

reverse_proxy localhost:5000

}Add this to an existing Caddyfile or save it as Caddyfile in any directory and run sudo caddy reload in that same directory.

NGINX config

Replace [YOUR_DOMAIN] with your full domain; for example, translate.domain.tld or libretranslate.domain.tld.

Remove # on the access_log and error_log lines to disable logging.

server {

listen 80;

server_name [YOUR_DOMAIN];

return 301 https://$server_name$request_uri;

}

server {

listen 443 http2 ssl;

server_name [YOUR_DOMAIN];

#access_log off;

#error_log off;

# SSL Section

ssl_certificate /etc/letsencrypt/live/[YOUR_DOMAIN]/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/[YOUR_DOMAIN]/privkey.pem;

ssl_protocols TLSv1.2 TLSv1.3;

# Using the recommended cipher suite from: https://wiki.mozilla.org/Security/Server_Side_TLS

ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384';

ssl_session_timeout 10m;

ssl_session_cache shared:MozSSL:10m; # about 40000 sessions

ssl_session_tickets off;

# Specifies a curve for ECDHE ciphers.

ssl_ecdh_curve prime256v1;

# Server should determine the ciphers, not the client

ssl_prefer_server_ciphers on;

# Header section

add_header Strict-Transport-Security "max-age=31536000; includeSubDomains; preload" always;

add_header Referrer-Policy "strict-origin" always;

add_header X-Frame-Options "SAMEORIGIN" always;

add_header X-XSS-Protection "1; mode=block" always;

add_header X-Content-Type-Options "nosniff" always;

add_header X-Download-Options "noopen" always;

add_header X-Robots-Tag "none" always;

add_header Feature-Policy "microphone 'none'; camera 'none'; geolocation 'none';" always;

# Newer header but not everywhere supported

add_header Permissions-Policy "microphone=(), camera=(), geolocation=()" always;

# Remove X-Powered-By, which is an information leak

fastcgi_hide_header X-Powered-By;

# Do not send nginx server header

server_tokens off;

# GZIP Section

gzip on;

gzip_disable "msie6";

gzip_vary on;

gzip_proxied any;

gzip_comp_level 6;

gzip_buffers 16 8k;

gzip_http_version 1.1;

gzip_min_length 256;

gzip_types text/xml text/javascript font/ttf font/eot font/otf application/x-javascript application/atom+xml application/javascript application/json application/manifest+json application/rss+xml application/x-web-app-manifest+json application/xhtml+xml application/xml image/svg+xml image/x-icon text/css text/plain;

location / {

proxy_pass http://127.0.0.1:5000/;

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

client_max_body_size 0;

}

}

Add this to an existing NGINX config or save it as libretranslate in the /etc/nginx/site-enabled directory and run sudo nginx -s reload.

Yes, pass an array of strings instead of a string to the q field:

const res = await fetch("https://libretranslate.com/translate", {

method: "POST",

body: JSON.stringify({

q: ["Hello", "world"],

source: "en",

target: "es"

}),

headers: { "Content-Type": "application/json" }

});

console.log(await res.json());

// {

// "translatedText": [

// "Hola",

// "mundo"

// ]

// }We welcome contributions! Here's some ideas:

- Train a new language model using Locomotive. For example, we want to train improved neural networks for German and many other languages.

- Can you beat the performance of our language models? Train a new one and let's compare it. To submit your model make a post on the community forum with a link to download your .argosmodel file and some sample text that your model has translated.

- Pick an issue to work on.

This work is largely possible thanks to Argos Translate, which powers the translation engine.