Open manipulator application pacakges.

2019 R-BIZ ROBOTIS Open Manipulator Challenge.

Auturbo team potman

http://emanual.robotis.com/docs/en/platform/openmanipulator_x/ros_setup/#install-ros-packages

http://emanual.robotis.com/docs/en/platform/openmanipulator_x/ros_applications/#installation-1

$ sudo apt-get install ros-kinetic-jsk-recognition

$ sudo apt-get install ros-kinetic-jsk-topic-tools

$ sudo apt-get install ros-kinetic-libsiftfast

$ sudo apt-get install ros-kinetic-laser-assembler

$ sudo apt-get install ros-kinetic-octomap-server

$ sudo apt-get install ros-kinetic-nodelet

$ sudo apt-get install ros-kinetic-depth-image-proc

$ cd ~/catkin_ws/src/

$ git clone https://github.com/jsk-ros-pkg/jsk_common.git

$ catkin_ws && catkin_make$ cd ~/catkin_ws/src/

$ git clone --recursive https://github.com/minwoominwoominwoo7/darknet_ros.git

$ git clone https://github.com/AuTURBO/open_manipulator_potman.git

$ git clone https://github.com/minwoominwoominwoo7/open_manipulator_gazebo_potman.git

$ catkin_ws && catkin_makeYou install the below application to phone.

https://github.com/minwoominwoominwoo7/ros-app-connectedphone/blob/master/android_pubCommandVoice-debug.apk

You install the below two applications to phone.

https://github.com/minwoominwoominwoo7/ros-app-connectedphone/blob/master/android_pubFaceTracker-debug.apk

https://github.com/minwoominwoominwoo7/facetracker-app-connectedphone/blob/master/facetracker.apk

The facetracker.apk performs face detection and broadcasts the recognized face coordinates to the android_pubFaceTracker-debug.apk .

The android_pubFaceTracker-debug.apk performs ros android.

The android_pubFaceTracker-debug.apk send face detection data to ROS PC by wifi.

roslaunch open_manipulator_controller open_manipulator_controller.launch

roslaunch open_manipulator_potman yolo_jsk_pose.launch camera_model:=realsense_d435

roslaunch open_manipulator_potman potman.launch

roslaunch open_manipulator_potman key_command.launchStep1. click icon of android_pubCommandVoice-debug.apk.

Step2. Enter ip address of PC's ROS core. and Press connection button.

.png)

Step3. And you can control the operation of the open manipulator by voice or button of application.

Of course, you can also control the operation of the open manipulator by entering key to terminal of command.launch on PC, even if you are not using the application

Step1. click icon of android_pubFaceTracker-debug.apk

Step2. Enter ip address of PC's ROS core. and Press connection button.

Step3. And press the Home key to run the application as BackGround. Never press the back key.

Step4. click icon of facetracker.apk

Only the largest face detected by the application is displayed on the camera preveiw screen.

There is 9 mode, You can control by PC( terminal of key_command.launch ) or phone ( voice control or button )

We read the fixed joint position coordinates from the saved file and moved Robot Arm(Open manipulator).

We use Open Manipulator Control Package to control open manipulator by joint control.

The way to save the joint movement file are located at the bottom of the page.

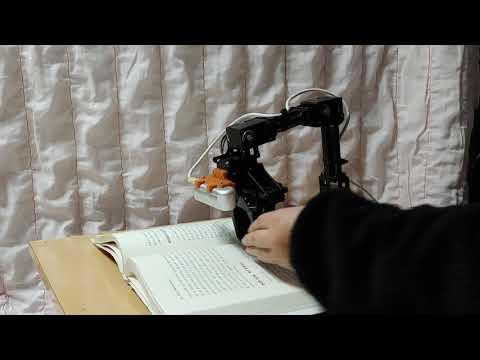

Click image to link to YouTube video.

Click image to link to YouTube video.

Used Packages

. We use Darknet ROS Package to detect cup object. The used model is yolo V3.

. We use JSK Package to calculation 3D position.

. We use Open Manipulator Control Package to control open manipulator by inverse kinematic.

We read the fixed joint position coordinates from the saved file and moved Robot Arm(Open manipulator).

We use Open Manipulator Control Package to control open manipulator by joint control.

The way to save the joint movement file are located at the bottom of the page.

Click image to link to YouTube video.

The face detection algorithm is LargestFaceFocusingProcessor of com.google.android.gms.vision.face.FaceDetector.

https://developers.google.com/android/reference/com/google/android/gms/vision/face/LargestFaceFocusingProcessor

Click image to link to YouTube video.

roslaunch open_manipulator_gazebo_potman open_manipulator_gazebo_potman.launch

roslaunch open_manipulator_controller open_manipulator_controller.launch use_platform:=false

roslaunch open_manipulator_potman yolo_jsk_pose.launch camera_model:=realsense_d435 use_platform:=false

roslaunch open_manipulator_potman potman.launch use_platform:=false

roslaunch open_manipulator_potman key_command.launchClick image to link to YouTube video.

- Start Pour and 3. Serve Food is fixed movement by file.

- Start Pour use https://github.com/AuTURBO/open_manipulator_potman/blob/master/cfg/output_pour.txt

- Serve Food use https://github.com/AuTURBO/open_manipulator_potman/blob/master/cfg/output_serve.txt

you can make movement file by open_manipulator_save_and_load

https://github.com/minwoominwoominwoo7/open_manipulator_save_and_load

.png)

.png)