This project is part of the semester project for the course of Robotics & Vision II of the Master in Advanced robotics at SDU (Project report)(Video). It covers the pose estimation section in which the goal is to detect the object of interest in a scene point cloud in order for the UR arm to pick it. The scene point cloud is provided by a Intel Realsense D435 stereo-camera.

The pose estimation proces has multiple stages, (As shown in the picture below). For a reasoning about the different steps, please check the project report.

This project has been tested in Ubuntu 20.04.1 LTS and ROS Neotic.

Since this program was integrated in a bigger project, there are some dependencies to the RobWork library. Mostly regarding operations with Transformation matrices.

Additional steps for the camera installation.

We need ROS for the communication between the camera and the pose estimaton nodes.

-

Install ROS package for RGB-D devices:

$ sudo apt-get install ros-noetic-rgbd-launch - Build catkin workspace in the repository folder:

$ cd /path/to/repository/folder

$ catkin_make In order to run the pose estimation process, first we have to source the catkin workspace:

$ source devel/setup.bash The program offers two main options, we can get the point cloud from the camera via ROS or we can load a point cloud (.pcd) saved in disk.

$ rosrun vision pose_estimation -h

This program estimates the position of the piece in the scene.

Options:

-h,--help Show this help message

-s,--save Save file eg: -c -s test.pcd

-l,--load Load file eg: -l Pointcloud_samples/test.pcd

-c,--camera enable ros node and real time camera If we want to use the ROS option, first we have to initialize the roscore.

$ roscoreThen we can launch the camera node that will publish the point cloud data.

$ roslaunch realsense2_camera rs_rgbd.launch

The last step consists on starting the pose estimation node. This node will subscribe to the camera node in order to get the scene information. The scene will be visualized, showing a live update of the pointcloud. Once we have the scene ready, we can close the visualizer. This will save the last scene shown in it and the program will proceed with the pose estimation.

$ rosrun vision pose_estimation -cWe can test the load functionality with:

$ rosrun vision pose_estimation -l utilities/scene_sample.pcdIn case we want to estimate the pose of a different object, we need to go to /src/vision/src*pose_estimation.cpp line 85 and change the object path with the path to the point cloud (.pcd) with the new object of interest.

85 utilities.load_object_of_interest(object, "./utilities/object.pcd");After updating the code, we have to rebuild the catkin workspace.

The whole process should go as follows:

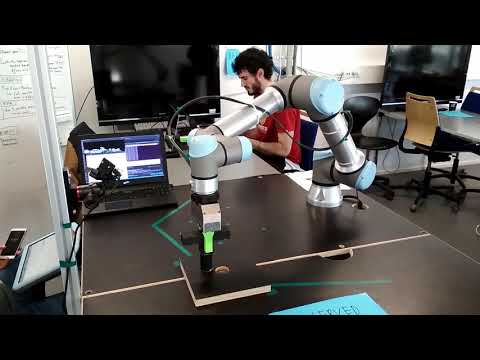

Video of the whole ROVI II project performing the pose estimation plus the forced based insertion: