This repository focuses on implementing advanced filtering-based localization methods to seamlessly fuse data from various sensors such as wheel encoders, GPS, IMU, and cameras. The goal is to achieve highly accurate robot localization capabilities, suitable for both indoor and outdoor environments, especially for differential drive wheel mobile robots but can be extened to other configuration robots as well.

- Integration of multiple sensors for robust localization.

- Utilization of Docker for streamlined deployment and reproducibility.

- Developed using ROS 2 for enhanced compatibility and flexibility.

The current demo showcases the localization system in action, visualized using Gazebo, a powerful physics-based simulation tool.

You should already have Docker, Docker Compose and VSCode (if using vs-code option) with the remote containers plugin installed on your system.

- Click on "code" and copy the link to repository

- Clone it in your local system

- Copy the path to the ros_ws directory you recently cloned

- Paste the copied path in the docker-compose.yml file in the volumes section as shown below

-

After updating the docker-compose.yml, run:

-

Giving Display Permissions

xhost + -

Change your directory to the cloned repo that is "ros_ws"

-

Building the Docker file and creating a container

docker-compose build -

Run the container

docker-compose up

-

-

Running the above commands will run the simulation and show it in the gazebo window and at the end of simulation, all the plots will be displayed.

-

In order to close the simulation, go to the same terminal where you run the last command and press

ctrl + c

-

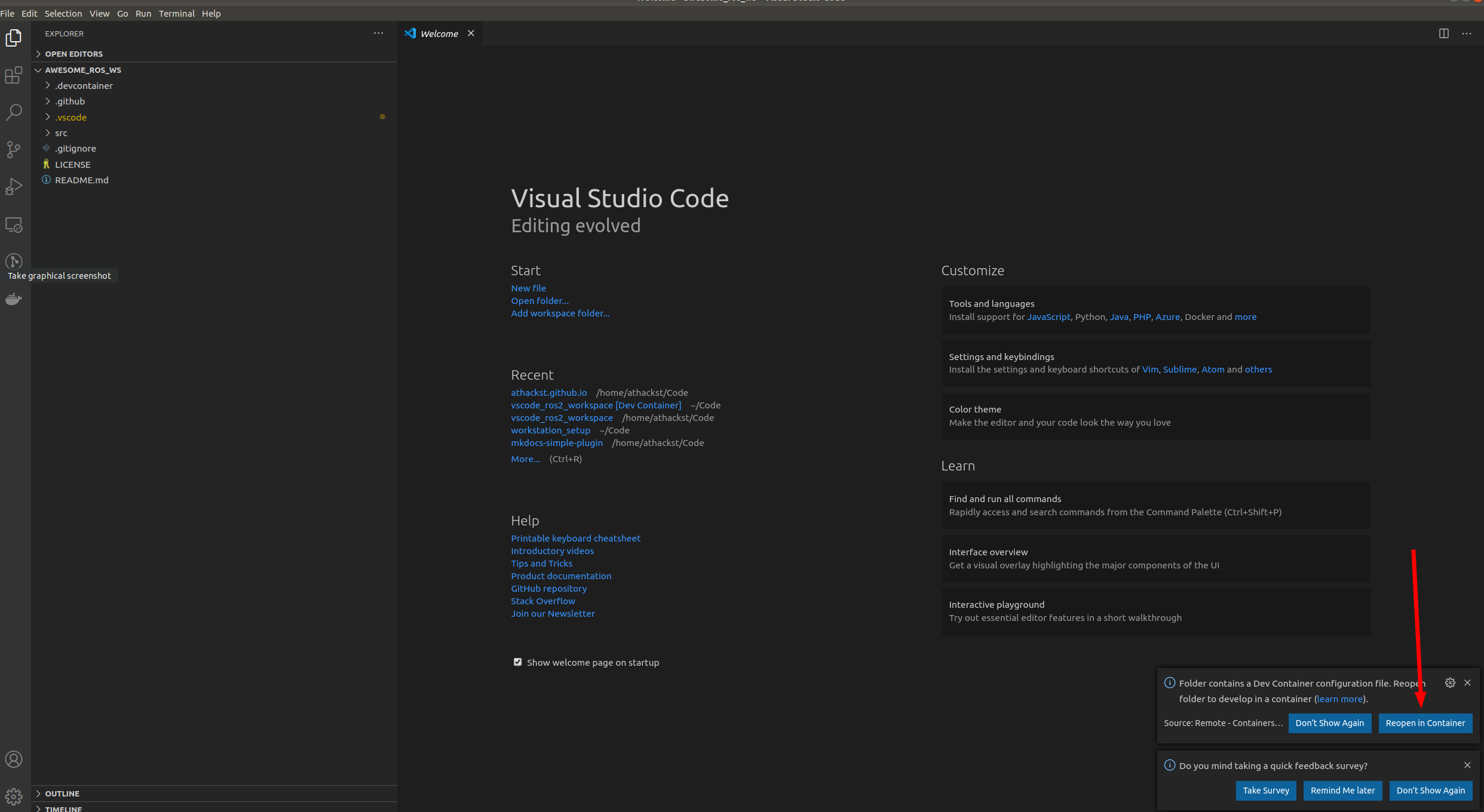

Now that you've cloned the repo onto your computer, you can open it in VSCode (File->Open Folder).

-

When you open it for the first time, you should see a little popup that asks you if you would like to open it in a container. Say yes!

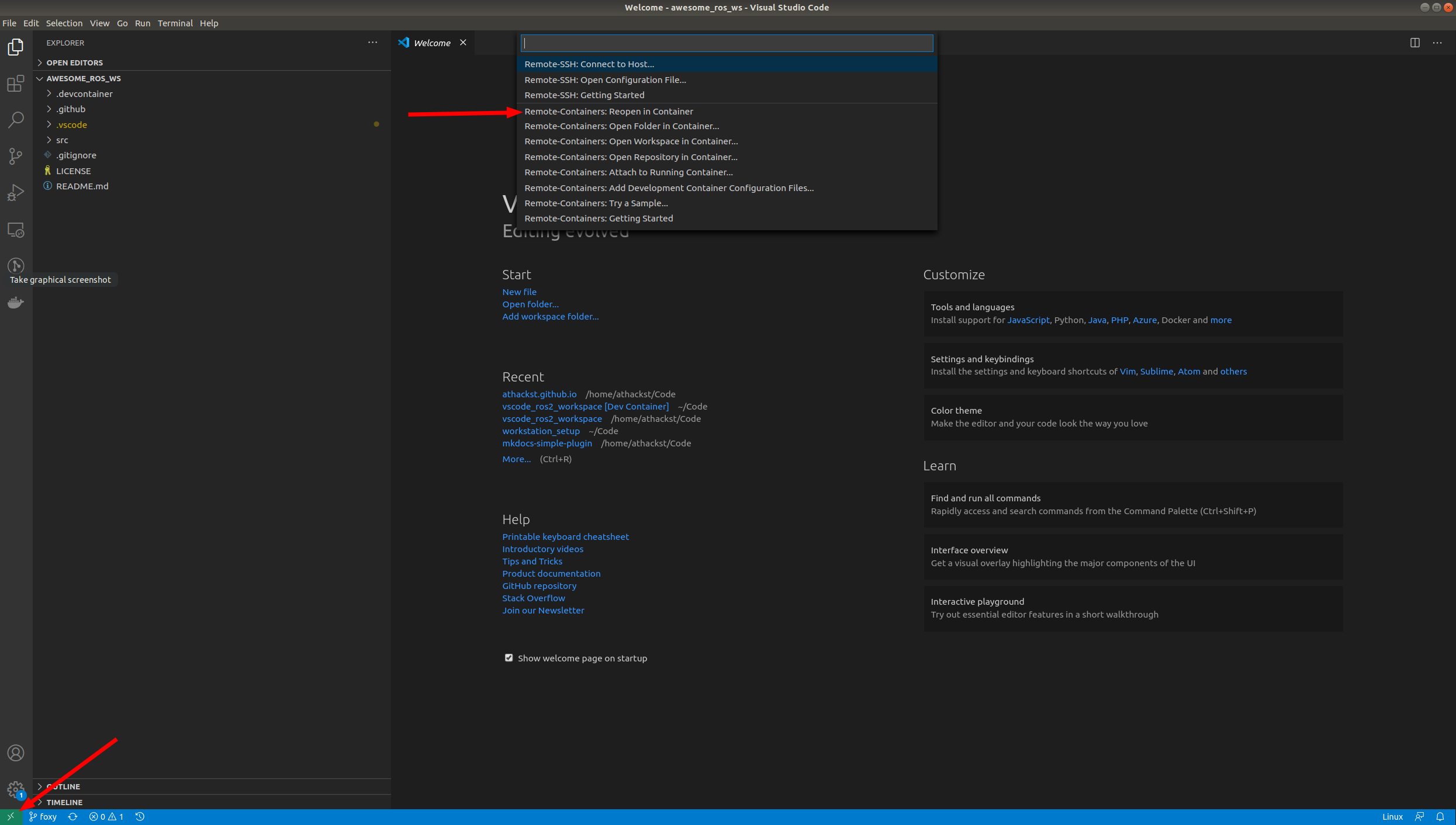

- If you don't see the pop-up, click on the little green square in the bottom left corner, which should bring up the container dialog

-

In the dialog, select "Remote Containers: Reopen in container"

-

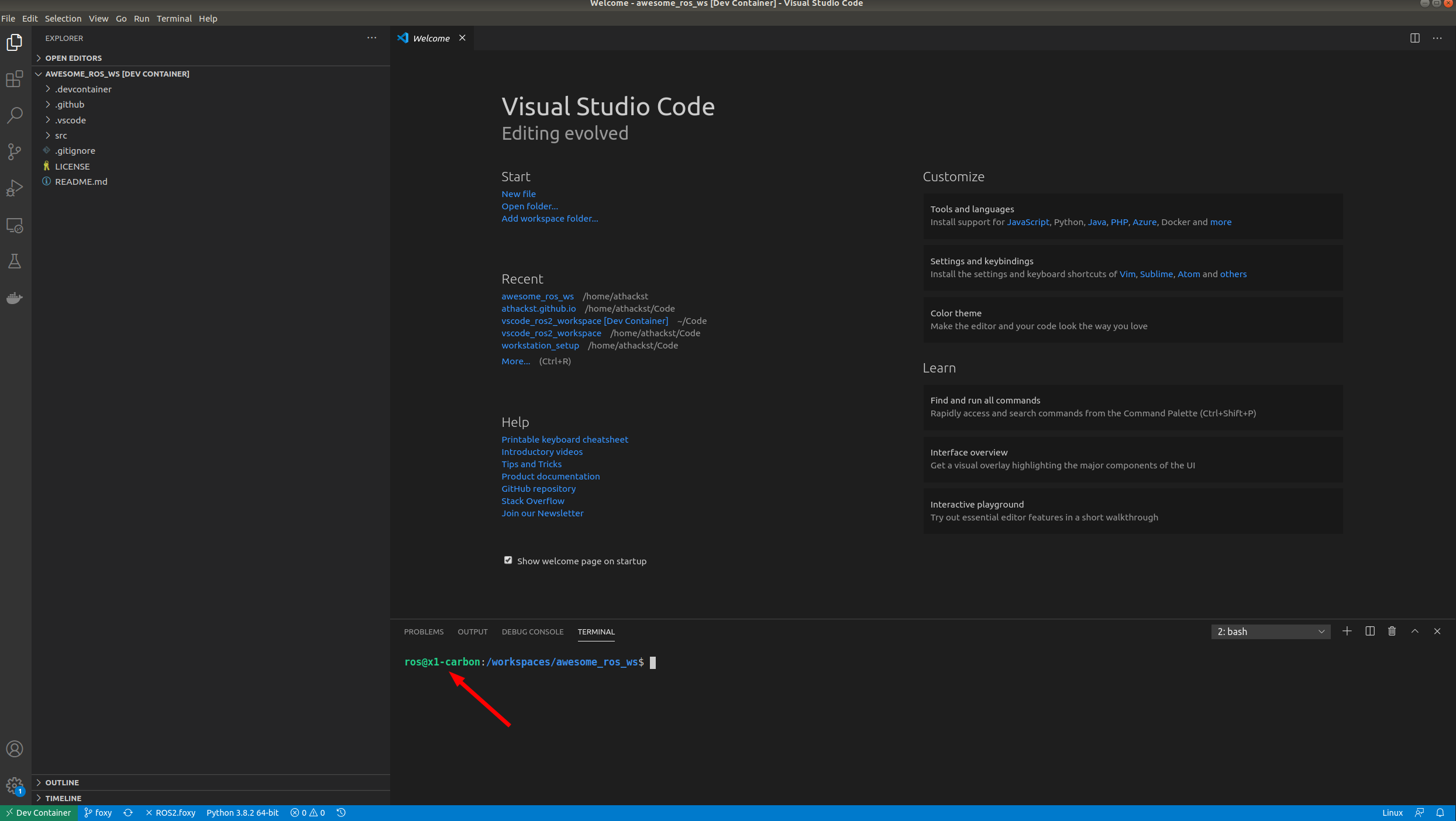

VSCode will build the dockerfile inside of

.devcontainerfor you. If you open a terminal inside VSCode (Terminal->New Terminal), you should see that your username has been changed toros, and the bottom left green corner should say "Dev Container"

-

Since you will be building this docker environment for the first time and there are some heavy files being installed, it will take few minutes for it to build

-

Once the docker is built and container is ready you have to open terminal by clicking on terminal in top bar and selecting new terminal

-

After the terminal opens at the bottom, you have to run the following command

./run_demo.sh

- On running these commands the gazebo window will show up on the screen where you can see the robot in gazebo town world along with 2 walls at the center which denote the indoor and outdoor environment.

- The robot will follow a rectangular trajectory using a PID based controller

- It will transition through those 2 walls where the GPS signal will be dropped and robot will still localize itself using the rest of the sensors

- After it completes the rectangular trajectory , it will display the plots of the trajectory followed as a result of the estimated positions using localization algorithm

- In the plots you could see that the robot looses the gps signal when

x=5.0and-2.0 < Y < -8.0. At these coordinates the estimated estimated positions of the robot goes slightly out of track and then it comes back on track using the localization algorithm. As compared to either pure sensor data or pure wheel odometry data, sensor fusion based localization seems to have higher accuracy

- Currently I am trying to incorporate camera sensor to this robot in order to use visual odometry along with other sensor when GPS data is not available.

- Once it is developed, it will be published on this repo soon !!