diff --git a/.github/workflows/build.yml b/.github/workflows/build.yml

index e9b1ef7..400b86d 100644

--- a/.github/workflows/build.yml

+++ b/.github/workflows/build.yml

@@ -4,7 +4,7 @@ on:

push:

branches: master

pull_request:

- branches: "*"

+ branches: '*'

jobs:

build:

@@ -70,7 +70,7 @@ jobs:

strategy:

fail-fast: false

matrix:

- python-version: ["3.8", "3.9", "3.10"]

+ python-version: ['3.8', '3.9', '3.10']

steps:

- name: Checkout

@@ -91,15 +91,24 @@ jobs:

python -m pip install tljh_repo2docker*.whl

npm -g install configurable-http-proxy

- - name: Run Tests

+ - name: Run local build backend tests

+ working-directory: tljh_repo2docker/tests

run: |

- python -m pytest --cov

+ python -m pytest local_build --cov

+

+ - name: Run binderhub build backend tests

+ working-directory: tljh_repo2docker/tests

+ run: |

+ python -m pytest binderhub_build --cov

integration-tests:

name: Integration tests

needs: build

runs-on: ubuntu-latest

-

+ strategy:

+ fail-fast: false

+ matrix:

+ build-backend: ['local', 'binderhub']

env:

PLAYWRIGHT_BROWSERS_PATH: ${{ github.workspace }}/pw-browsers

@@ -139,10 +148,10 @@ jobs:

run: npx playwright install chromium

working-directory: ui-tests

- - name: Execute integration tests

+ - name: Execute integration tests with ${{ matrix.build-backend }} build backend

working-directory: ui-tests

run: |

- npx playwright test

+ npm run test:${{ matrix.build-backend }}

- name: Upload Playwright Test report

if: always()

@@ -150,5 +159,6 @@ jobs:

with:

name: tljh-playwright-tests

path: |

- ui-tests/test-results

+ ui-tests/local-test-results

+ ui-tests/binderhub-test-results

ui-tests/playwright-report

diff --git a/.gitignore b/.gitignore

index 0f8645b..41869a8 100644

--- a/.gitignore

+++ b/.gitignore

@@ -30,3 +30,4 @@ lib/

# Hatch version

_version.py

+*.sqlite

\ No newline at end of file

diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

index 459c00f..59add32 100644

--- a/CONTRIBUTING.md

+++ b/CONTRIBUTING.md

@@ -46,10 +46,16 @@ docker pull quay.io/jupyterhub/repo2docker:main

## Run

-Finally, start `jupyterhub` with the config in `debug` mode:

+Finally, start `jupyterhub` with local build backend:

```bash

-python -m jupyterhub -f jupyterhub_config.py --debug

+python -m jupyterhub -f ui-tests/jupyterhub_config_local.py --debug

+```

+

+or using `binderhub` build backend

+

+```bash

+python -m jupyterhub -f ui-tests/jupyterhub_config_binderhub.py --debug

```

Open https://localhost:8000 in a web browser.

diff --git a/README.md b/README.md

index 2df4ef6..6479de2 100644

--- a/README.md

+++ b/README.md

@@ -2,7 +2,7 @@

-TLJH plugin providing a JupyterHub service to build and use Docker images as user environments. The Docker images are built using [`repo2docker`](https://repo2docker.readthedocs.io/en/latest/).

+TLJH plugin provides a JupyterHub service to build and use Docker images as user environments. The Docker images can be built locally using [`repo2docker`](https://repo2docker.readthedocs.io/en/latest/) or via the [`binderhub`](https://binderhub.readthedocs.io/en/latest/) service.

## Requirements

@@ -46,8 +46,10 @@ The available settings for this service are:

- `default_memory_limit`: Default memory limit of a user server; defaults to `None`

- `default_cpu_limit`: Default CPU limit of a user server; defaults to `None`

- `machine_profiles`: Instead of entering directly the CPU and Memory value, `tljh-repo2docker` can be configured with pre-defined machine profiles and users can only choose from the available option; defaults to `[]`

+- `binderhub_url`: The optional URL of the `binderhub` service. If it is available, `tljh-repo2docker` will use this service to build images.

+- `db_url`: The connection string of the database. `tljh-repo2docker` needs a database to store the image metadata. By default, it will create a `sqlite` database in the starting directory of the service. To use other databases (`PostgreSQL` or `MySQL`), users need to specify the connection string via this config and install the additional drivers (`asyncpg` or `aiomysql`).

-This service requires the following scopes : `read:users`, `admin:servers` and `read:roles:users`. Here is an example of registering `tljh_repo2docker`'s service with JupyterHub

+This service requires the following scopes : `read:users`, `admin:servers` and `read:roles:users`. If `binderhub` service is used, ` access:services!service=binder`is also needed. Here is an example of registering `tljh_repo2docker`'s service with JupyterHub

```python

# jupyterhub_config.py

@@ -78,7 +80,12 @@ c.JupyterHub.load_roles = [

{

"description": "Role for tljh_repo2docker service",

"name": "tljh-repo2docker-service",

- "scopes": ["read:users", "admin:servers", "read:roles:users"],

+ "scopes": [

+ "read:users",

+ "read:roles:users",

+ "admin:servers",

+ "access:services!service=binder",

+ ],

"services": ["tljh_repo2docker"],

},

{

@@ -147,25 +154,30 @@ c.JupyterHub.load_roles = [

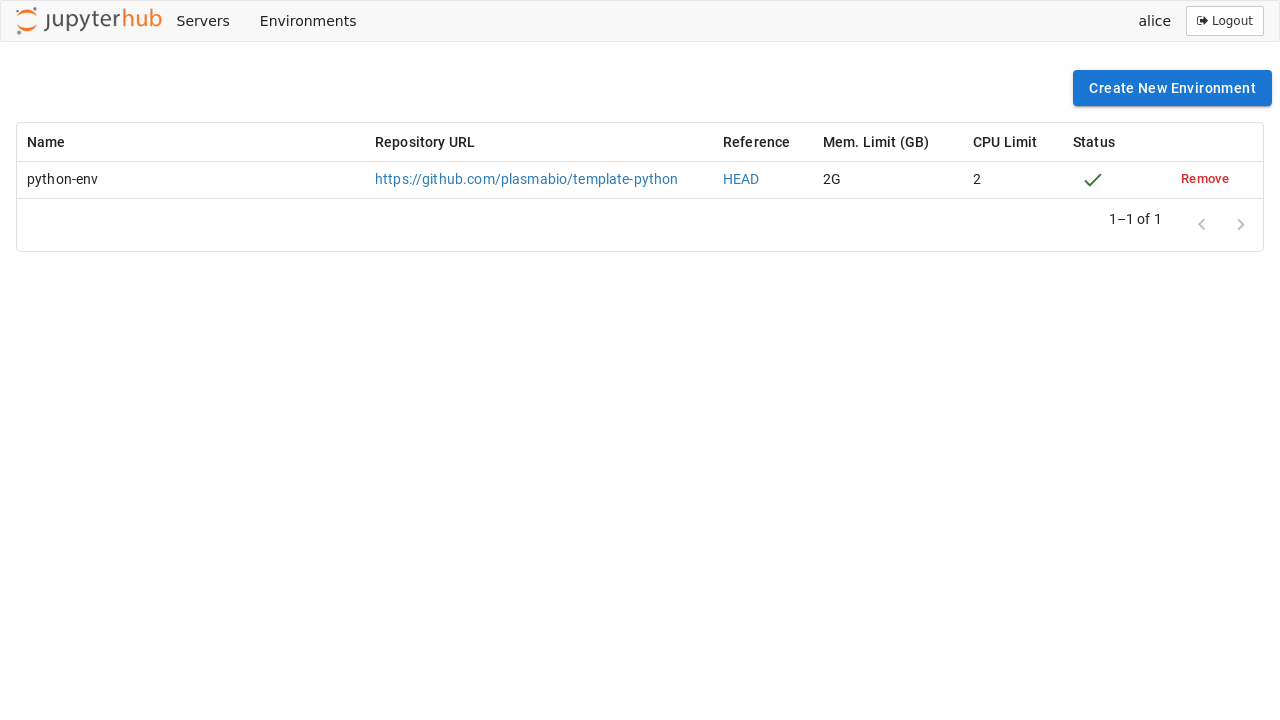

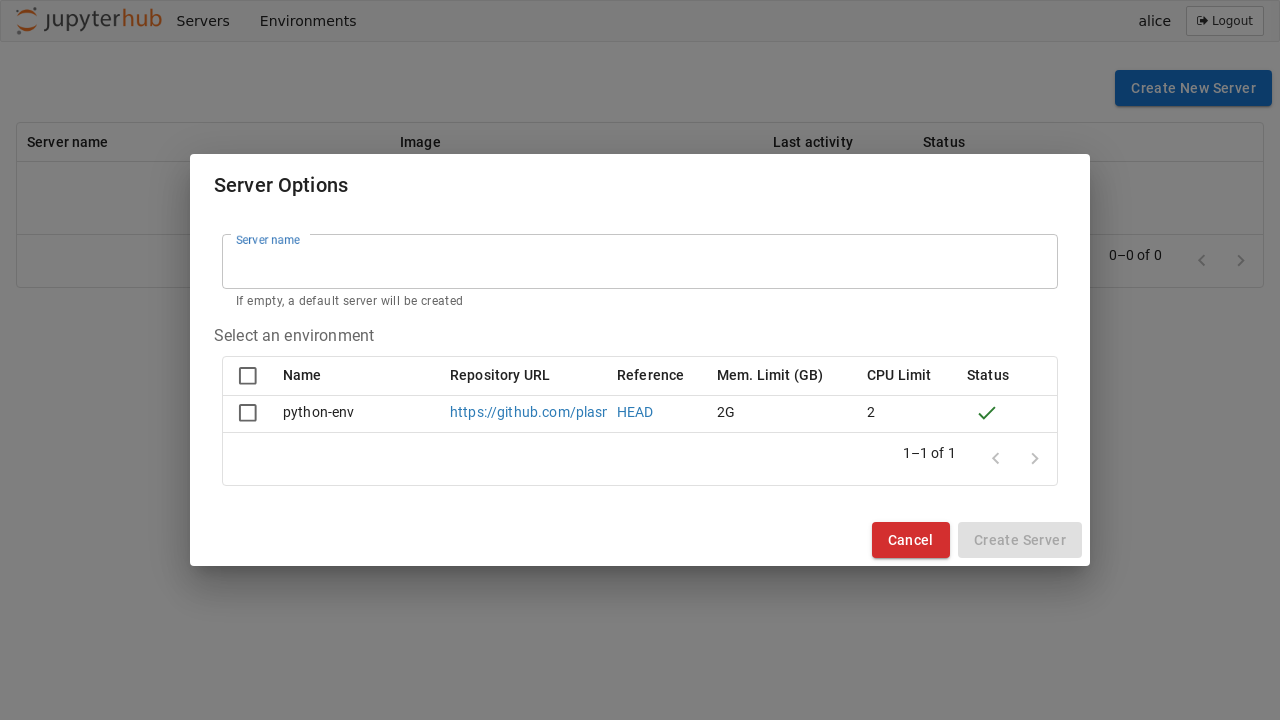

The _Environments_ page shows the list of built environments, as well as the ones currently being built:

-

+

### Add a new environment

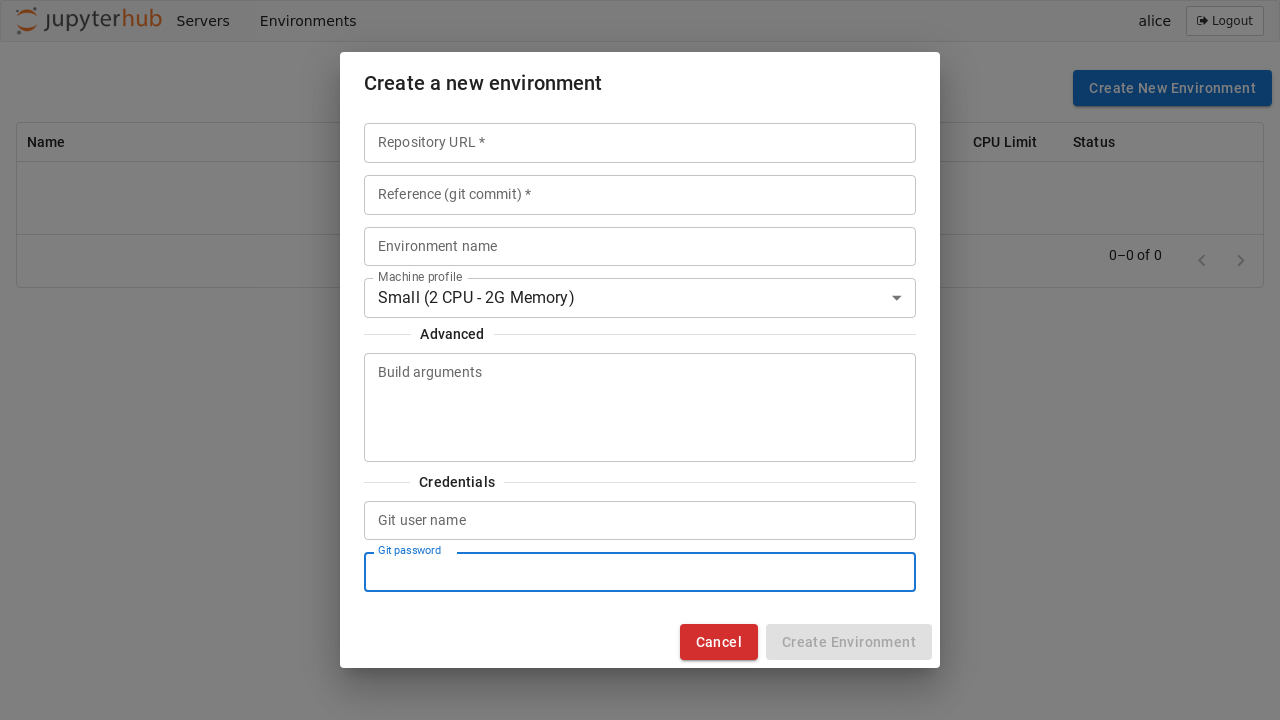

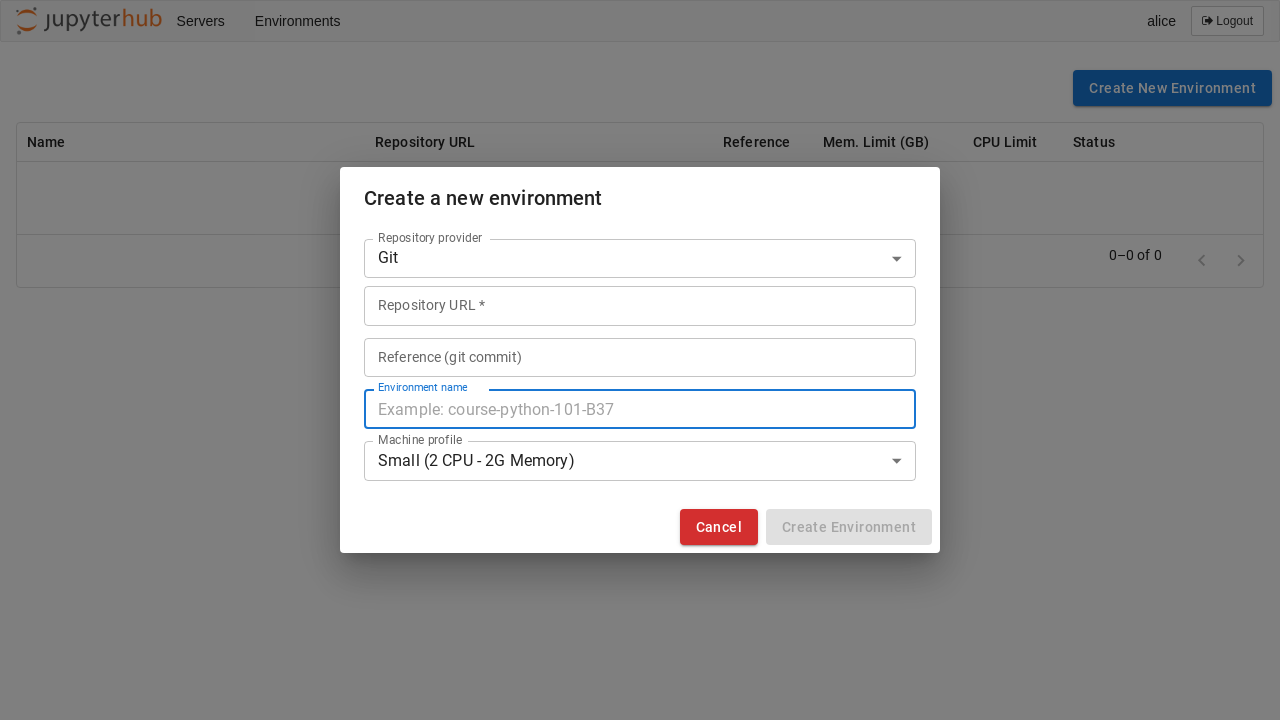

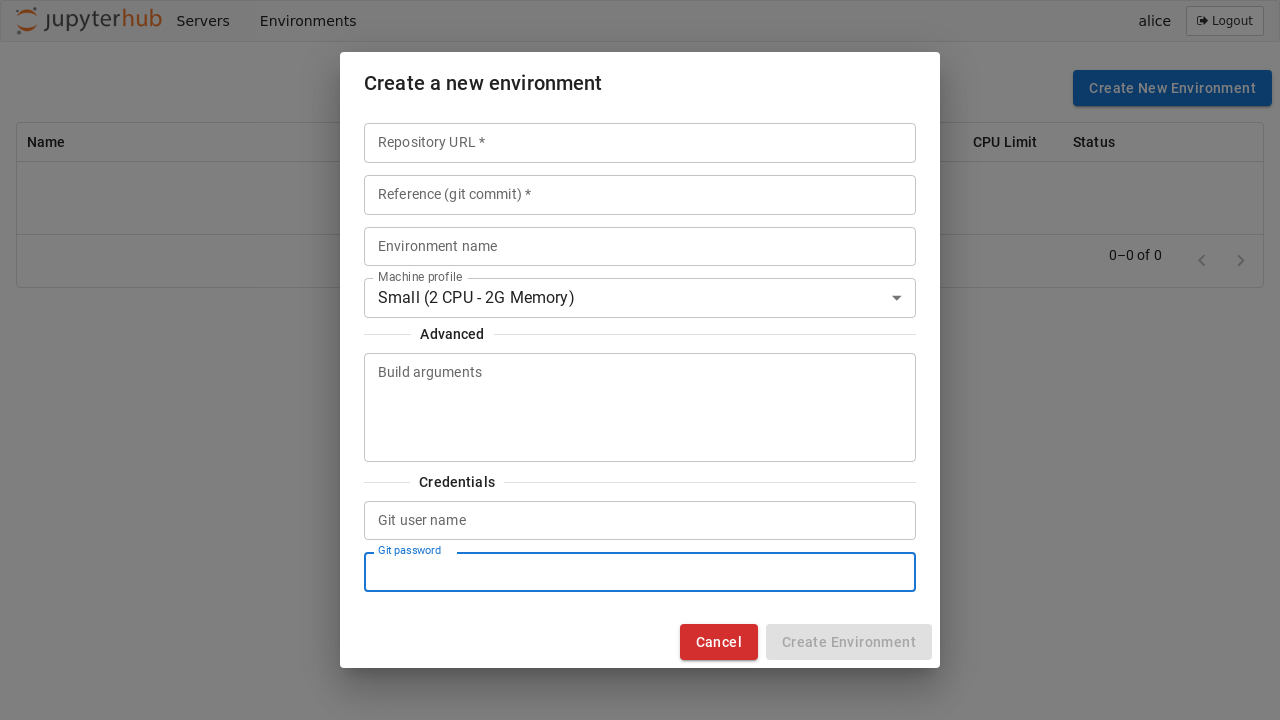

Just like on [Binder](https://mybinder.org), new environments can be added by clicking on the _Add New_ button and providing a URL to the repository. Optional names, memory, and CPU limits can also be set for the environment:

-

+

+

+> [!NOTE]

+> If the build backend is `binderhub` service, users need to select the [repository provider](https://binderhub.readthedocs.io/en/latest/developer/repoproviders.html) and can not specify the custom build arguments

+

+

### Follow the build logs

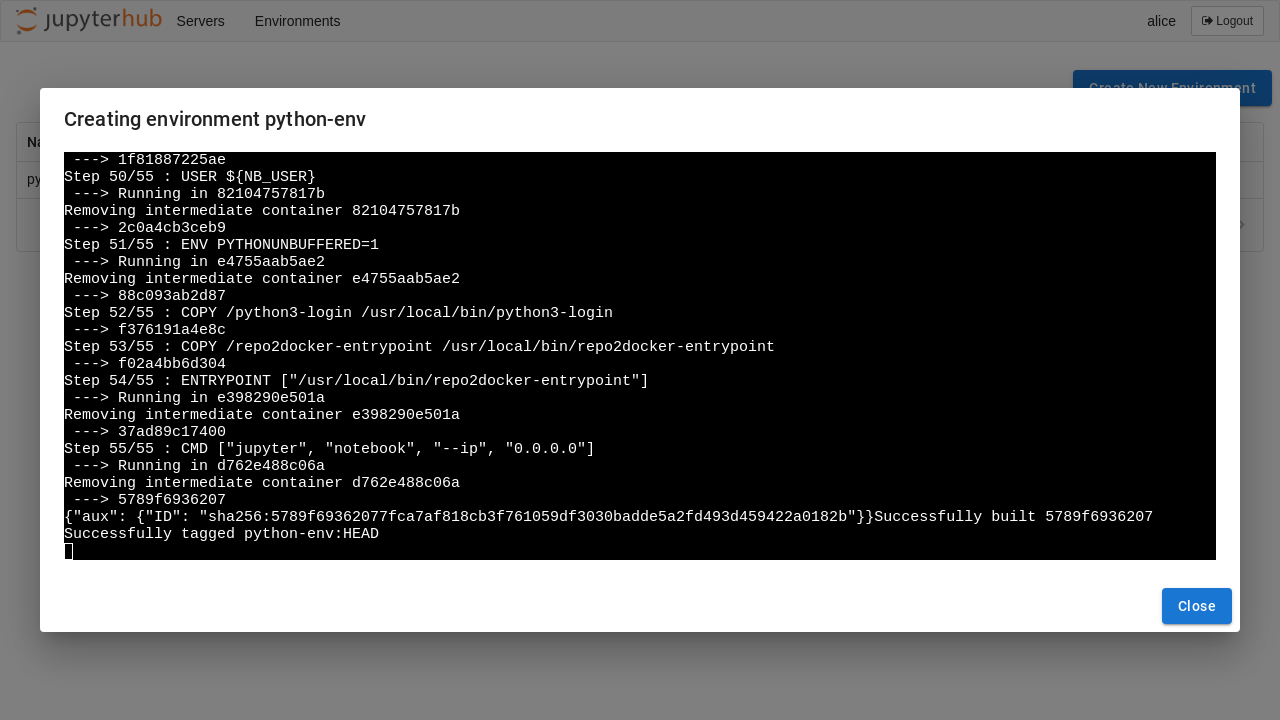

Clicking on the _Logs_ button will open a new dialog with the build logs:

-

+

### Select an environment

Once ready, the environments can be selected from the JupyterHub spawn page:

-

+

### Private Repositories

@@ -173,12 +185,15 @@ Once ready, the environments can be selected from the JupyterHub spawn page:

It is possible to provide the `username` and `password` in the `Credentials` section of the form:

-

+

On GitHub and GitLab, a user might have to first create an access token with `read` access to use as the password:

+> [!NOTE]

+> The `binderhub` build backend does not support configuring private repositories credentials from the interface.

+

### Machine profiles

Instead of entering directly the CPU and Memory value, `tljh-repo2docker` can be configured with pre-defined machine profiles and users can only choose from the available options. The following configuration will add 3 machines with labels Small, Medium and Large to the profile list:

diff --git a/dev-requirements.txt b/dev-requirements.txt

index 5a9414a..77d4592 100644

--- a/dev-requirements.txt

+++ b/dev-requirements.txt

@@ -1,5 +1,7 @@

git+https://github.com/jupyterhub/the-littlest-jupyterhub@1.0.0

+git+https://github.com/jupyterhub/binderhub.git@main

jupyterhub>=4,<5

+alembic>=1.13.0,<1.14

pytest

pytest-aiohttp

pytest-asyncio

diff --git a/pyproject.toml b/pyproject.toml

index 8a3f29f..240a096 100644

--- a/pyproject.toml

+++ b/pyproject.toml

@@ -7,13 +7,21 @@ dependencies = [

"aiodocker~=0.19",

"dockerspawner~=12.1",

"jupyter_client>=6.1,<8",

- "httpx"

+ "httpx",

+ "sqlalchemy>=2",

+ "pydantic>=2,<3",

+ "alembic>=1.13,<2",

+ "jupyter-repo2docker>=2024,<2025",

+ "aiosqlite~=0.19.0"

]

dynamic = ["version"]

license = {file = "LICENSE"}

name = "tljh-repo2docker"

readme = "README.md"

+[project.scripts]

+tljh_repo2docker_upgrade_db = "tljh_repo2docker.dbutil:main"

+

[project.entry-points.tljh]

tljh_repo2docker = "tljh_repo2docker"

@@ -56,10 +64,7 @@ source_dir = "src"

version_cmd = "hatch version"

[tool.jupyter-releaser.hooks]

-before-build-npm = [

- "npm install",

- "npm run build:prod",

-]

+before-build-npm = ["npm install", "npm run build:prod"]

before-build-python = ["npm run clean"]

before-bump-version = ["python -m pip install hatch"]

diff --git a/src/environments/App.tsx b/src/environments/App.tsx

index 6a3779c..5a301ef 100644

--- a/src/environments/App.tsx

+++ b/src/environments/App.tsx

@@ -16,6 +16,8 @@ export interface IAppProps {

default_cpu_limit: string;

default_mem_limit: string;

machine_profiles: IMachineProfile[];

+ use_binderhub: boolean;

+ repo_providers?: { label: string; value: string }[];

}

export default function App(props: IAppProps) {

const jhData = useJupyterhub();

@@ -35,6 +37,8 @@ export default function App(props: IAppProps) {

default_cpu_limit={props.default_cpu_limit}

default_mem_limit={props.default_mem_limit}

machine_profiles={props.machine_profiles}

+ use_binderhub={props.use_binderhub}

+ repo_providers={props.repo_providers}

/>

diff --git a/src/environments/EnvironmentList.tsx b/src/environments/EnvironmentList.tsx

index 25aff38..296ad7f 100644

--- a/src/environments/EnvironmentList.tsx

+++ b/src/environments/EnvironmentList.tsx

@@ -59,7 +59,7 @@ const columns: GridColDef[] = [

) : params.value === 'building' ? (

) : null;

}

@@ -75,7 +75,7 @@ const columns: GridColDef[] = [

return (

);

}

diff --git a/src/environments/LogDialog.tsx b/src/environments/LogDialog.tsx

index 45e95ca..845acfd 100644

--- a/src/environments/LogDialog.tsx

+++ b/src/environments/LogDialog.tsx

@@ -65,13 +65,13 @@ function _EnvironmentLogButton(props: IEnvironmentLogButton) {

eventSource.onmessage = event => {

const data = JSON.parse(event.data);

+ terminal.write(data.message);

+ fitAddon.fit();

if (data.phase === 'built') {

eventSource.close();

setBuilt(true);

return;

}

- terminal.write(data.message);

- fitAddon.fit();

};

}

}, [jhData, props.image]);

diff --git a/src/environments/NewEnvironmentDialog.tsx b/src/environments/NewEnvironmentDialog.tsx

index a36c9e3..335b666 100644

--- a/src/environments/NewEnvironmentDialog.tsx

+++ b/src/environments/NewEnvironmentDialog.tsx

@@ -13,7 +13,14 @@ import {

Select,

Typography

} from '@mui/material';

-import { Fragment, memo, useCallback, useMemo, useState } from 'react';

+import {

+ Fragment,

+ memo,

+ useCallback,

+ useEffect,

+ useMemo,

+ useState

+} from 'react';

import { useAxios } from '../common/AxiosContext';

import { SmallTextField } from '../common/SmallTextField';

@@ -28,9 +35,12 @@ export interface INewEnvironmentDialogProps {

default_cpu_limit: string;

default_mem_limit: string;

machine_profiles: IMachineProfile[];

+ use_binderhub: boolean;

+ repo_providers?: { label: string; value: string }[];

}

interface IFormValues {

+ provider?: string;

repo?: string;

ref?: string;

name?: string;

@@ -74,11 +84,53 @@ function _NewEnvironmentDialog(props: INewEnvironmentDialogProps) {

[setFormValues]

);

const validated = useMemo(() => {

- return Boolean(formValues.repo) && Boolean(formValues.ref);

- }, [formValues]);

+ return Boolean(formValues.repo);

+ }, [formValues.repo]);

const [selectedProfile, setSelectedProfile] = useState(0);

+ const [selectedProvider, setSelectedProvider] = useState(0);

+

+ const onMachineProfileChange = useCallback(

+ (value?: string | number) => {

+ if (value !== undefined) {

+ const index = parseInt(value + '');

+ const selected = props.machine_profiles[index];

+ if (selected !== undefined) {

+ updateFormValue('cpu', selected.cpu + '');

+ updateFormValue('memory', selected.memory + '');

+ setSelectedProfile(index);

+ }

+ }

+ },

+ [props.machine_profiles, updateFormValue]

+ );

+ const onRepoProviderChange = useCallback(

+ (value?: string | number) => {

+ if (value !== undefined) {

+ const index = parseInt(value + '');

+ const selected = props.repo_providers?.[index];

+ if (selected !== undefined) {

+ updateFormValue('provider', selected.value);

+ setSelectedProvider(index);

+ }

+ }

+ },

+ [props.repo_providers, updateFormValue]

+ );

+ useEffect(() => {

+ if (props.machine_profiles.length > 0) {

+ onMachineProfileChange(0);

+ }

+ if (props.repo_providers && props.repo_providers.length > 0) {

+ onRepoProviderChange(0);

+ }

+ }, [

+ props.machine_profiles,

+ props.repo_providers,

+ onMachineProfileChange,

+ onRepoProviderChange

+ ]);

const MemoryCpuSelector = useMemo(() => {

return (

@@ -120,16 +172,7 @@ function _NewEnvironmentDialog(props: INewEnvironmentDialogProps) {

value={selectedProfile}

label="Machine profile"

size="small"

- onChange={e => {

- const value = e.target.value;

- if (value) {

- const index = parseInt(value + '');

- const selected = props.machine_profiles[index];

- updateFormValue('cpu', selected.cpu + '');

- updateFormValue('memory', selected.memory + '');

- setSelectedProfile(index);

- }

- }}

+ onChange={e => onMachineProfileChange(e.target.value)}

>

{props.machine_profiles.map((it, idx) => {

return (

@@ -141,7 +184,8 @@ function _NewEnvironmentDialog(props: INewEnvironmentDialogProps) {

);

- }, [updateFormValue, props.machine_profiles, selectedProfile]);

+ }, [props.machine_profiles, selectedProfile, onMachineProfileChange]);

+

return (

@@ -167,6 +211,7 @@ function _NewEnvironmentDialog(props: INewEnvironmentDialogProps) {

.replace('https://', '')

.replace(/\//g, '-')

.replace(/\./g, '-');

+ data.ref = data.ref && data.ref.length > 0 ? data.ref : 'HEAD';

data.cpu = data.cpu ?? '2';

data.memory = data.memory ?? '2';

data.username = data.username ?? '';

@@ -186,6 +231,29 @@ function _NewEnvironmentDialog(props: INewEnvironmentDialogProps) {

>

Create a new environment

+ {props.use_binderhub && props.repo_providers && (

+

+

+ Repository provider

+

+

+

+ )}

updateFormValue('ref', e.target.value)}

value={formValues.ref ?? ''}

/>

@@ -221,56 +290,64 @@ function _NewEnvironmentDialog(props: INewEnvironmentDialogProps) {

{props.machine_profiles.length > 0

? MachineProfileSelector

: MemoryCpuSelector}

-

-

- Advanced

-

-

- updateFormValue('buildargs', e.target.value)}

- />

-

-

- Credentials

-

-

- updateFormValue('username', e.target.value)}

- />

-

+ {!props.use_binderhub && (

+

+

+

+ Advanced

+

+

+ updateFormValue('buildargs', e.target.value)}

+ />

+

+ )}

+ {!props.use_binderhub && (

+

+

+

+ Credentials

+

+

+ updateFormValue('username', e.target.value)}

+ />

+

+

+ )}