-

Notifications

You must be signed in to change notification settings - Fork 29

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Potential memory leak with each call inside runBlocking #114

Comments

|

After some trial and error I found out that the problem is probably linked to the invokeOnCompletion handler in bindScopeCancellationToCall in CallExts.kt because the memory leak disappears if it is commented out: |

|

According to the documentation of invokeOnCompletion:

Since the job can last a lot longer than a kroto+ call, I think the invokeOnCompletion-Handler must be disposed by kroto+ after the gRPC call is completed to avoid this memory leak. This handler is used in all gRPC call types in kroto+ and none are cleaned up. |

|

First I want to give a big thanks for opening this issue and providing so much detail. After looking everything over I think the scope of impact is limited to clients executing many calls in a single long-lived coroutine. Server implementations are affected since each server rpc request creates a new job. The source of this issue, as you stated, is the fact that we do not have a check after call completion to dispose of the It looks like the solution for this will involve updating the client exts to add a call completion callback to that will check if the scopes job has completed yet and dispose of the Thoughts? |

|

Thank you @marcoferrer for confirming the issue.

|

It looks like there is memory leak in kroto+ when many unary calls are made inside a runBlocking-block, reproduced here: https://github.com/blachris/kroto-plus/commit/223417b1a05294a0387d13f421c0fa8aec4c477d

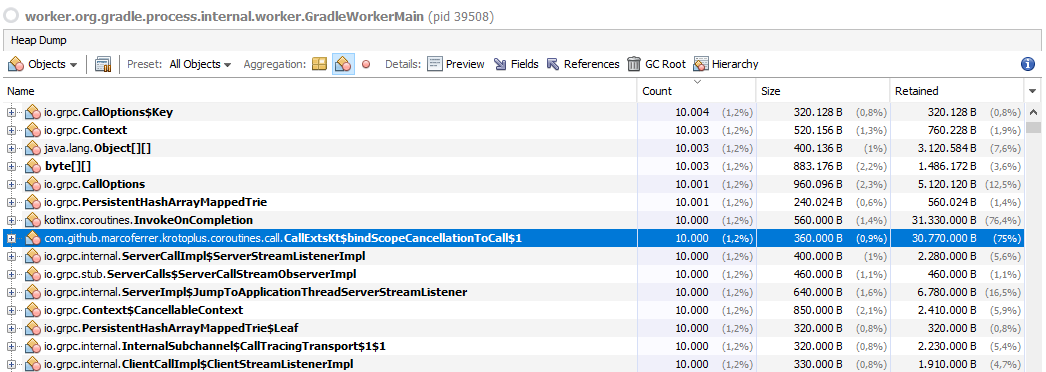

When you take a heap dump before exiting the runBlocking-block, you will see a number of instances that scale exactly with the number of calls made, for example here after 10000 completed, sequential calls:

It seems there is an unbroken chain of references with

InvokeOnCompletionthrough every single call made.After the runBlocking-block, the entire clutter is cleaned up so as a workaround, one can regularly exit and enter runBlocking. However I often see this pattern in main-functions with runBlocking which will reliably encounter this problem over time:

Could you please check if you can avoid this issue in kroto+ or if this is caused by kotlin's runBlocking?

The text was updated successfully, but these errors were encountered: