block within , so we wish to replace parent node.

+ [].push.apply(mermaids, document.getElementsByClassName('language-mermaid'));

+ for (let i = 0; i < mermaids.length; i++) {

+ // Convert

block to and add `mermaid` class so that Mermaid will parse it.

+ let mermaidCodeElement = mermaids[i];

+ let newElement = document.createElement('div');

+ newElement.innerHTML = mermaidCodeElement.innerHTML;

+ newElement.classList.add('mermaid');

+ if (render) {

+ window.mermaid.mermaidAPI.render(`mermaid-${i}`, newElement.textContent, function (svgCode) {

+ newElement.innerHTML = svgCode;

+ });

+ }

+ mermaidCodeElement.parentNode.replaceWith(newElement);

+ }

+ console.debug(`Processed ${mermaids.length} Mermaid code blocks`);

+}

+

+/**

+ * @param {Element} parent

+ * @param {Element} child

+ */

+function scrollParentToChild(parent, child) {

+ // Where is the parent on the page?

+ const parentRect = parent.getBoundingClientRect();

+

+ // What can the client see?

+ const parentViewableArea = {

+ height: parent.clientHeight,

+ width: parent.clientWidth,

+ };

+

+ // Where is the child?

+ const childRect = child.getBoundingClientRect();

+

+ // Is the child in view?

+ const isChildInView =

+ childRect.top >= parentRect.top && childRect.bottom <= parentRect.top + parentViewableArea.height;

+

+ // If the child isn't in view, attempt to scroll the parent to it.

+ if (!isChildInView) {

+ // Scroll by offset relative to parent.

+ parent.scrollTop = childRect.top + parent.scrollTop - parentRect.top;

+ }

+}

+

+export {fixMermaid, scrollParentToChild};

diff --git a/js/wowchemy.js b/js/wowchemy.js

new file mode 100644

index 00000000..2a4bb3a7

--- /dev/null

+++ b/js/wowchemy.js

@@ -0,0 +1,345 @@

+

+/*************************************************

+ * Wowchemy

+ * https://github.com/wowchemy/wowchemy-hugo-modules

+ *

+ * Core JS functions and initialization.

+ **************************************************/

+

+// Stripped-down version, only kept filtering

+

+/* ---------------------------------------------------------------------------

+ * Filter publications.

+ * --------------------------------------------------------------------------- */

+

+// Active publication filters.

+let pubFilters = {};

+

+// Search term.

+let searchRegex;

+

+// Filter values (concatenated).

+let filterValues;

+

+// Publication container.

+let $grid_pubs = $('#container-publications');

+

+// Initialise Isotope publication layout if required.

+if ($grid_pubs.length) {

+ $grid_pubs.isotope({

+ itemSelector: '.isotope-item',

+ percentPosition: true,

+ masonry: {

+ // Use Bootstrap compatible grid layout.

+ columnWidth: '.grid-sizer',

+ },

+ filter: function () {

+ let $this = $(this);

+ let searchResults = searchRegex ? $this.text().match(searchRegex) : true;

+ let filterResults = filterValues ? $this.is(filterValues) : true;

+ return searchResults && filterResults;

+ },

+ });

+

+ // Filter by search term.

+ let $quickSearch = $('.filter-search').keyup(

+ debounce(function () {

+ searchRegex = new RegExp($quickSearch.val(), 'gi');

+ $grid_pubs.isotope();

+ }),

+ );

+

+ $('.pub-filters').on('change', function () {

+ let $this = $(this);

+

+ // Get group key.

+ let filterGroup = $this[0].getAttribute('data-filter-group');

+

+ // Set filter for group.

+ pubFilters[filterGroup] = this.value;

+

+ // Combine filters.

+ filterValues = concatValues(pubFilters);

+

+ // Activate filters.

+ $grid_pubs.isotope();

+

+ // If filtering by publication type, update the URL hash to enable direct linking to results.

+ if (filterGroup === 'pubtype') {

+ // Set hash URL to current filter.

+ let url = $(this).val();

+ if (url.substr(0, 9) === '.pubtype-') {

+ window.location.hash = url.substr(9);

+ } else {

+ window.location.hash = '';

+ }

+ }

+ });

+}

+

+// Debounce input to prevent spamming filter requests.

+function debounce(fn, threshold) {

+ let timeout;

+ threshold = threshold || 100;

+ return function debounced() {

+ clearTimeout(timeout);

+ let args = arguments;

+ let _this = this;

+

+ function delayed() {

+ fn.apply(_this, args);

+ }

+

+ timeout = setTimeout(delayed, threshold);

+ };

+}

+

+// Flatten object by concatenating values.

+function concatValues(obj) {

+ let value = '';

+ for (let prop in obj) {

+ value += obj[prop];

+ }

+ return value;

+}

+

+// Filter publications according to hash in URL.

+function filter_publications() {

+ // Check for Isotope publication layout.

+ if (!$grid_pubs.length) return;

+

+ let urlHash = window.location.hash.replace('#', '');

+ let filterValue = '*';

+

+ // Check if hash is numeric.

+ if (urlHash != '' && !isNaN(urlHash)) {

+ filterValue = '.pubtype-' + urlHash;

+ }

+

+ // Set filter.

+ let filterGroup = 'pubtype';

+ pubFilters[filterGroup] = filterValue;

+ filterValues = concatValues(pubFilters);

+

+ // Activate filters.

+ $grid_pubs.isotope();

+

+ // Set selected option.

+ $('.pubtype-select').val(filterValue);

+}

+

+/* ---------------------------------------------------------------------------

+ * On window loaded.

+ * --------------------------------------------------------------------------- */

+

+$(window).on('load', function () {

+ // Re-initialize Scrollspy with dynamic navbar height offset.

+ fixScrollspy();

+

+ // Detect instances of the Portfolio widget.

+ let isotopeInstances = document.querySelectorAll('.projects-container');

+ let isotopeInstancesCount = isotopeInstances.length;

+

+ // Fix ScrollSpy highlighting previous Book page ToC link for some anchors.

+ // Check if isotopeInstancesCount>0 as that case performs its own scrollToAnchor.

+ if (window.location.hash && isotopeInstancesCount === 0) {

+ scrollToAnchor(decodeURIComponent(window.location.hash), 0);

+ }

+

+ // Scroll Book page's active ToC sidebar link into view.

+ // Action after calling scrollToAnchor to fix Scrollspy highlighting otherwise wrong link may have active class.

+ let child = document.querySelector('.docs-toc .nav-link.active');

+ let parent = document.querySelector('.docs-toc');

+ if (child && parent) {

+ scrollParentToChild(parent, child);

+ }

+

+ // Enable images to be zoomed.

+ let zoomOptions = {};

+ if (document.body.classList.contains('dark')) {

+ zoomOptions.background = 'rgba(0,0,0,0.9)';

+ } else {

+ zoomOptions.background = 'rgba(255,255,255,0.9)';

+ }

+ // mediumZoom('[data-zoomable]', zoomOptions);

+

+ // Init Isotope Layout Engine for instances of the Portfolio widget.

+ let isotopeCounter = 0;

+ isotopeInstances.forEach(function (isotopeInstance, index) {

+ console.debug(`Loading Isotope instance ${index}`);

+

+ // Isotope instance

+ let iso;

+

+ // Get the layout for this Isotope instance

+ let isoSection = isotopeInstance.closest('section');

+ let layout = '';

+ if (isoSection.querySelector('.isotope').classList.contains('js-layout-row')) {

+ layout = 'fitRows';

+ } else {

+ layout = 'masonry';

+ }

+

+ // Get default filter (if any) for this instance

+ let defaultFilter = isoSection.querySelector('.default-project-filter');

+ let filterText = '*';

+ if (defaultFilter !== null) {

+ filterText = defaultFilter.textContent;

+ }

+ console.debug(`Default Isotope filter: ${filterText}`);

+

+ // Init Isotope instance once its images have loaded.

+ imagesLoaded(isotopeInstance, function () {

+ iso = new Isotope(isotopeInstance, {

+ itemSelector: '.isotope-item',

+ layoutMode: layout,

+ masonry: {

+ gutter: 20,

+ },

+ filter: filterText,

+ });

+

+ // Filter Isotope items when a toolbar filter button is clicked.

+ let isoFilterButtons = isoSection.querySelectorAll('.project-filters a');

+ isoFilterButtons.forEach((button) =>

+ button.addEventListener('click', (e) => {

+ e.preventDefault();

+ let selector = button.getAttribute('data-filter');

+

+ // Apply filter

+ console.debug(`Updating Isotope filter to ${selector}`);

+ iso.arrange({filter: selector});

+

+ // Update active toolbar filter button

+ button.classList.remove('active');

+ button.classList.add('active');

+ let buttonSiblings = getSiblings(button);

+ buttonSiblings.forEach((buttonSibling) => {

+ buttonSibling.classList.remove('active');

+ buttonSibling.classList.remove('all');

+ });

+ }),

+ );

+

+ // Check if all Isotope instances have loaded.

+ incrementIsotopeCounter();

+ });

+ });

+

+ // Hook to perform actions once all Isotope instances have loaded.

+ function incrementIsotopeCounter() {

+ isotopeCounter++;

+ if (isotopeCounter === isotopeInstancesCount) {

+ console.debug(`All Portfolio Isotope instances loaded.`);

+ // Once all Isotope instances and their images have loaded, scroll to hash (if set).

+ // Prevents scrolling to the wrong location due to the dynamic height of Isotope instances.

+ // Each Isotope instance height is affected by applying filters and loading images.

+ // Without this logic, the scroll location can appear correct, but actually a few pixels out and hence Scrollspy

+ // can highlight the wrong nav link.

+ if (window.location.hash) {

+ scrollToAnchor(decodeURIComponent(window.location.hash), 0);

+ }

+ }

+ }

+

+ // Enable publication filter for publication index page.

+ if ($('.pub-filters-select')) {

+ filter_publications();

+ // Useful for changing hash manually (e.g. in development):

+ // window.addEventListener('hashchange', filter_publications, false);

+ }

+

+ // Load citation modal on 'Cite' click.

+ $('.js-cite-modal').click(function (e) {

+ e.preventDefault();

+ let filename = $(this).attr('data-filename');

+ let modal = $('#modal');

+ modal.find('.modal-body code').load(filename, function (response, status, xhr) {

+ if (status == 'error') {

+ let msg = 'Error: ';

+ $('#modal-error').html(msg + xhr.status + ' ' + xhr.statusText);

+ } else {

+ $('.js-download-cite').attr('href', filename);

+ }

+ });

+ modal.modal('show');

+ });

+

+ // Copy citation text on 'Copy' click.

+ $('.js-copy-cite').click(function (e) {

+ e.preventDefault();

+ // Get selection.

+ let range = document.createRange();

+ let code_node = document.querySelector('#modal .modal-body');

+ range.selectNode(code_node);

+ window.getSelection().addRange(range);

+ try {

+ // Execute the copy command.

+ document.execCommand('copy');

+ } catch (e) {

+ console.log('Error: citation copy failed.');

+ }

+ // Remove selection.

+ window.getSelection().removeRange(range);

+ });

+

+ // Initialise Google Maps if necessary.

+ // initMap();

+

+ // Print latest version of GitHub projects.

+ // // let githubReleaseSelector = '.js-github-release';

+ // // if ($(githubReleaseSelector).length > 0) {

+ // // printLatestRelease(githubReleaseSelector, $(githubReleaseSelector).data('repo'));

+ // // }

+

+ // // Parse Wowchemy keyboard shortcuts.

+ // document.addEventListener('keyup', (event) => {

+ // if (event.code === 'Escape') {

+ // const body = document.body;

+ // if (body.classList.contains('searching')) {

+ // // Close search dialog.

+ // toggleSearchDialog();

+ // }

+ // }

+ // // Use `key` to check for slash. Otherwise, with `code` we need to check for modifiers.

+ // if (event.key === '/') {

+ // let focusedElement =

+ // (document.hasFocus() &&

+ // document.activeElement !== document.body &&

+ // document.activeElement !== document.documentElement &&

+ // document.activeElement) ||

+ // null;

+ // let isInputFocused = focusedElement instanceof HTMLInputElement || focusedElement instanceof HTMLTextAreaElement;

+ // if (searchEnabled && !isInputFocused) {

+ // // Open search dialog.

+ // event.preventDefault();

+ // toggleSearchDialog();

+ // }

+ // }

+ // });

+

+ // // Search event handler

+ // // Check that built-in search or Algolia enabled.

+ // if (searchEnabled) {

+ // // On search icon click toggle search dialog.

+ // $('.js-search').click(function (e) {

+ // e.preventDefault();

+ // toggleSearchDialog();

+ // });

+ // }

+

+ // Init. author notes (tooltips).

+ $('[data-toggle="tooltip"]').tooltip();

+});

+

+

+// Make Scrollspy responsive.

+function fixScrollspy() {

+ let $body = $('body');

+ let data = $body.data('bs.scrollspy');

+ if (data) {

+ data._config.offset = getNavBarHeight();

+ $body.data('bs.scrollspy', data);

+ $body.scrollspy('refresh');

+ }

+}

diff --git a/ldbc-graphalytics-0/index.html b/ldbc-graphalytics-0/index.html

new file mode 100644

index 00000000..66b5ac71

--- /dev/null

+++ b/ldbc-graphalytics-0/index.html

@@ -0,0 +1,10 @@

+

+

+

+

https://ldbcouncil.org/benchmarks/graphalytics/

+

+

+

+

+

+

diff --git a/leadership/index.html b/leadership/index.html

new file mode 100644

index 00000000..e8ef8318

--- /dev/null

+++ b/leadership/index.html

@@ -0,0 +1,397 @@

+

+

+

+

+

Leadership

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

Leadership

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

Board of Directors

+

Contact: info AT ldbcouncil DOT org

+

+

+

+

Administation and Task Forces

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

\ No newline at end of file

diff --git a/licensing/LICENSE.txt b/licensing/LICENSE.txt

new file mode 100644

index 00000000..75b52484

--- /dev/null

+++ b/licensing/LICENSE.txt

@@ -0,0 +1,202 @@

+

+ Apache License

+ Version 2.0, January 2004

+ http://www.apache.org/licenses/

+

+ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+ 1. Definitions.

+

+ "License" shall mean the terms and conditions for use, reproduction,

+ and distribution as defined by Sections 1 through 9 of this document.

+

+ "Licensor" shall mean the copyright owner or entity authorized by

+ the copyright owner that is granting the License.

+

+ "Legal Entity" shall mean the union of the acting entity and all

+ other entities that control, are controlled by, or are under common

+ control with that entity. For the purposes of this definition,

+ "control" means (i) the power, direct or indirect, to cause the

+ direction or management of such entity, whether by contract or

+ otherwise, or (ii) ownership of fifty percent (50%) or more of the

+ outstanding shares, or (iii) beneficial ownership of such entity.

+

+ "You" (or "Your") shall mean an individual or Legal Entity

+ exercising permissions granted by this License.

+

+ "Source" form shall mean the preferred form for making modifications,

+ including but not limited to software source code, documentation

+ source, and configuration files.

+

+ "Object" form shall mean any form resulting from mechanical

+ transformation or translation of a Source form, including but

+ not limited to compiled object code, generated documentation,

+ and conversions to other media types.

+

+ "Work" shall mean the work of authorship, whether in Source or

+ Object form, made available under the License, as indicated by a

+ copyright notice that is included in or attached to the work

+ (an example is provided in the Appendix below).

+

+ "Derivative Works" shall mean any work, whether in Source or Object

+ form, that is based on (or derived from) the Work and for which the

+ editorial revisions, annotations, elaborations, or other modifications

+ represent, as a whole, an original work of authorship. For the purposes

+ of this License, Derivative Works shall not include works that remain

+ separable from, or merely link (or bind by name) to the interfaces of,

+ the Work and Derivative Works thereof.

+

+ "Contribution" shall mean any work of authorship, including

+ the original version of the Work and any modifications or additions

+ to that Work or Derivative Works thereof, that is intentionally

+ submitted to Licensor for inclusion in the Work by the copyright owner

+ or by an individual or Legal Entity authorized to submit on behalf of

+ the copyright owner. For the purposes of this definition, "submitted"

+ means any form of electronic, verbal, or written communication sent

+ to the Licensor or its representatives, including but not limited to

+ communication on electronic mailing lists, source code control systems,

+ and issue tracking systems that are managed by, or on behalf of, the

+ Licensor for the purpose of discussing and improving the Work, but

+ excluding communication that is conspicuously marked or otherwise

+ designated in writing by the copyright owner as "Not a Contribution."

+

+ "Contributor" shall mean Licensor and any individual or Legal Entity

+ on behalf of whom a Contribution has been received by Licensor and

+ subsequently incorporated within the Work.

+

+ 2. Grant of Copyright License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ copyright license to reproduce, prepare Derivative Works of,

+ publicly display, publicly perform, sublicense, and distribute the

+ Work and such Derivative Works in Source or Object form.

+

+ 3. Grant of Patent License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ (except as stated in this section) patent license to make, have made,

+ use, offer to sell, sell, import, and otherwise transfer the Work,

+ where such license applies only to those patent claims licensable

+ by such Contributor that are necessarily infringed by their

+ Contribution(s) alone or by combination of their Contribution(s)

+ with the Work to which such Contribution(s) was submitted. If You

+ institute patent litigation against any entity (including a

+ cross-claim or counterclaim in a lawsuit) alleging that the Work

+ or a Contribution incorporated within the Work constitutes direct

+ or contributory patent infringement, then any patent licenses

+ granted to You under this License for that Work shall terminate

+ as of the date such litigation is filed.

+

+ 4. Redistribution. You may reproduce and distribute copies of the

+ Work or Derivative Works thereof in any medium, with or without

+ modifications, and in Source or Object form, provided that You

+ meet the following conditions:

+

+ (a) You must give any other recipients of the Work or

+ Derivative Works a copy of this License; and

+

+ (b) You must cause any modified files to carry prominent notices

+ stating that You changed the files; and

+

+ (c) You must retain, in the Source form of any Derivative Works

+ that You distribute, all copyright, patent, trademark, and

+ attribution notices from the Source form of the Work,

+ excluding those notices that do not pertain to any part of

+ the Derivative Works; and

+

+ (d) If the Work includes a "NOTICE" text file as part of its

+ distribution, then any Derivative Works that You distribute must

+ include a readable copy of the attribution notices contained

+ within such NOTICE file, excluding those notices that do not

+ pertain to any part of the Derivative Works, in at least one

+ of the following places: within a NOTICE text file distributed

+ as part of the Derivative Works; within the Source form or

+ documentation, if provided along with the Derivative Works; or,

+ within a display generated by the Derivative Works, if and

+ wherever such third-party notices normally appear. The contents

+ of the NOTICE file are for informational purposes only and

+ do not modify the License. You may add Your own attribution

+ notices within Derivative Works that You distribute, alongside

+ or as an addendum to the NOTICE text from the Work, provided

+ that such additional attribution notices cannot be construed

+ as modifying the License.

+

+ You may add Your own copyright statement to Your modifications and

+ may provide additional or different license terms and conditions

+ for use, reproduction, or distribution of Your modifications, or

+ for any such Derivative Works as a whole, provided Your use,

+ reproduction, and distribution of the Work otherwise complies with

+ the conditions stated in this License.

+

+ 5. Submission of Contributions. Unless You explicitly state otherwise,

+ any Contribution intentionally submitted for inclusion in the Work

+ by You to the Licensor shall be under the terms and conditions of

+ this License, without any additional terms or conditions.

+ Notwithstanding the above, nothing herein shall supersede or modify

+ the terms of any separate license agreement you may have executed

+ with Licensor regarding such Contributions.

+

+ 6. Trademarks. This License does not grant permission to use the trade

+ names, trademarks, service marks, or product names of the Licensor,

+ except as required for reasonable and customary use in describing the

+ origin of the Work and reproducing the content of the NOTICE file.

+

+ 7. Disclaimer of Warranty. Unless required by applicable law or

+ agreed to in writing, Licensor provides the Work (and each

+ Contributor provides its Contributions) on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

+ implied, including, without limitation, any warranties or conditions

+ of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

+ PARTICULAR PURPOSE. You are solely responsible for determining the

+ appropriateness of using or redistributing the Work and assume any

+ risks associated with Your exercise of permissions under this License.

+

+ 8. Limitation of Liability. In no event and under no legal theory,

+ whether in tort (including negligence), contract, or otherwise,

+ unless required by applicable law (such as deliberate and grossly

+ negligent acts) or agreed to in writing, shall any Contributor be

+ liable to You for damages, including any direct, indirect, special,

+ incidental, or consequential damages of any character arising as a

+ result of this License or out of the use or inability to use the

+ Work (including but not limited to damages for loss of goodwill,

+ work stoppage, computer failure or malfunction, or any and all

+ other commercial damages or losses), even if such Contributor

+ has been advised of the possibility of such damages.

+

+ 9. Accepting Warranty or Additional Liability. While redistributing

+ the Work or Derivative Works thereof, You may choose to offer,

+ and charge a fee for, acceptance of support, warranty, indemnity,

+ or other liability obligations and/or rights consistent with this

+ License. However, in accepting such obligations, You may act only

+ on Your own behalf and on Your sole responsibility, not on behalf

+ of any other Contributor, and only if You agree to indemnify,

+ defend, and hold each Contributor harmless for any liability

+ incurred by, or claims asserted against, such Contributor by reason

+ of your accepting any such warranty or additional liability.

+

+ END OF TERMS AND CONDITIONS

+

+ APPENDIX: How to apply the Apache License to your work.

+

+ To apply the Apache License to your work, attach the following

+ boilerplate notice, with the fields enclosed by brackets "[]"

+ replaced with your own identifying information. (Don't include

+ the brackets!) The text should be enclosed in the appropriate

+ comment syntax for the file format. We also recommend that a

+ file or class name and description of purpose be included on the

+ same "printed page" as the copyright notice for easier

+ identification within third-party archives.

+

+ Copyright [yyyy] [name of copyright owner]

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

diff --git a/licensing/NOTICE.txt b/licensing/NOTICE.txt

new file mode 100644

index 00000000..d2fbb4a6

--- /dev/null

+++ b/licensing/NOTICE.txt

@@ -0,0 +1,13 @@

+ Copyright [2020-]2022 Linked Data Benchmark Council

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

\ No newline at end of file

diff --git a/licensing/ldbc-data-set-license.txt b/licensing/ldbc-data-set-license.txt

new file mode 100644

index 00000000..6da393e7

--- /dev/null

+++ b/licensing/ldbc-data-set-license.txt

@@ -0,0 +1,5 @@

+# LDBC Data set license (2022/03/15)

+

+LDBC BENCHMARK is a registered trademark of Linked Data Benchmark Council (LDBC). LDBC wishes to avoid mistaken or false claims that performance test results are LDBC benchmark test results. We are working on developing a policy on fair use of the term "LDBC benchmark" when reporting performance test results, backed up by the grant of trademark licences, or a commitment not to pursue those who follow our fair use policies for trademark infringement.

+

+In the interim, you are not licensed to refer to any presentation of the results of running benchmark tests using these data sets using the words "LDBC benchmark" or anything that is likely to be reasonably construed as being equivalent in meaning to the words "LDBC benchmark". However, if you contact LDBC at info@ldbcouncil.org then we will discuss with you a form of words to describe or characterize any test results you produce in a way that accurately states the relationship of any report, presentation or other publication of those results to LBDC benchmark results, and which will avoid the possibility of you infringing our trademark inadvertently.

diff --git a/organizational-members/index.html b/organizational-members/index.html

new file mode 100644

index 00000000..b4671dc9

--- /dev/null

+++ b/organizational-members/index.html

@@ -0,0 +1,560 @@

+

+

+

+

+

Organizational Members

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

Organizational Members

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

Sponsor companies

+

+

+

+

Ant Group

+

+

+

Beijing Volcano Engine Technology Co.

+

+

+

+

+

+

+

+

Companies

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

Non-commercial institutes

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

\ No newline at end of file

diff --git a/pages/index.html b/pages/index.html

new file mode 100644

index 00000000..0c9d8ae0

--- /dev/null

+++ b/pages/index.html

@@ -0,0 +1,777 @@

+

+

+

+

+

Pages

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

Pages

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

Tags:

+ BENCHMARK

+ , SNB

+

+

+

+

+

We are happy to annonunce new audited results for the SNB Interactive workload, achieved by the open-source GraphScope Flex system.

+

The current audit of the system has broken several records:

+

+- It achieved 130.1k ops/s on scale factor 100, compared to the previous record of 48.8k ops/s.

+- It achieved 131.3k ops/s on scale factor 300, compared to the previous record of 48.3k ops/s.

+- It is the first system to successfully complete the benchmark on …

+

+

+

+

+

+

+

+

+

+

+

Following the publication of ISO/IEC GQL (graph query language) in April 2024, LDBC today launches open-source language engineering tools to help implementers, and assist in generation of code examples and tests for the GQL language. See this announcement from Alastair Green, Vice-chair of LDBC.

+

These tools are the work of the LDBC GQL Implementation Working Group, headed up by Michael Burbidge. Damian Wileński and Dominik Tomaszuk have worked …

+

+

+

+

+

+

+

+

+

+

+

Tags:

+ FINBENCH

+

+

+

+

+

We are delighted to announce the official release of the initial version (v0.1.0) of Financial Benchmark (FinBench).

+

The Financial Benchmark (FinBench) project defines a graph database benchmark targeting financial scenarios such as anti-fraud and risk control. It is maintained by the LDBC FinBench Task Force. The benchmark has one workload currently, Transaction Workload, capturing OLTP scenario with complex read queries that access the …

+

+

+

+

+

+

+

+

+

+

+

Tags:

+ DATAGEN

+ , SNB

+

+

+

+

+

+

+

+

+

+

+

+

Tags:

+ DATAGEN

+ , SNB

+

+

+

+

+

LDBC SNB provides a data generator, which produces synthetic datasets, mimicking a social network’s activity during a period of time. Datagen is defined by the charasteristics of realism, scalability, determinism and usability. More than two years have elapsed since my last technical update on LDBC SNB Datagen, in which I discussed the reasons for moving the code to Apache Spark from the MapReduce-based Apache Hadoop implementation and the …

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

\ No newline at end of file

diff --git a/pages/index.xml b/pages/index.xml

new file mode 100644

index 00000000..bc7b14ac

--- /dev/null

+++ b/pages/index.xml

@@ -0,0 +1,5468 @@

+

+

+

+ Pages on Linked Data Benchmark Council

+ https://ldbcouncil.org/pages/

+ Recent content in Pages on Linked Data Benchmark Council

+ Hugo -- gohugo.io

+ en-us

+ © Copyright LDBC 2024

+ Fri, 03 May 2024 00:00:00 +0000

+ -

+ Eighteenth TUC Meeting

+ https://ldbcouncil.org/event/eighteenth-tuc-meeting/

+ Fri, 30 Aug 2024 09:00:00 -0800

+

+ https://ldbcouncil.org/event/eighteenth-tuc-meeting/

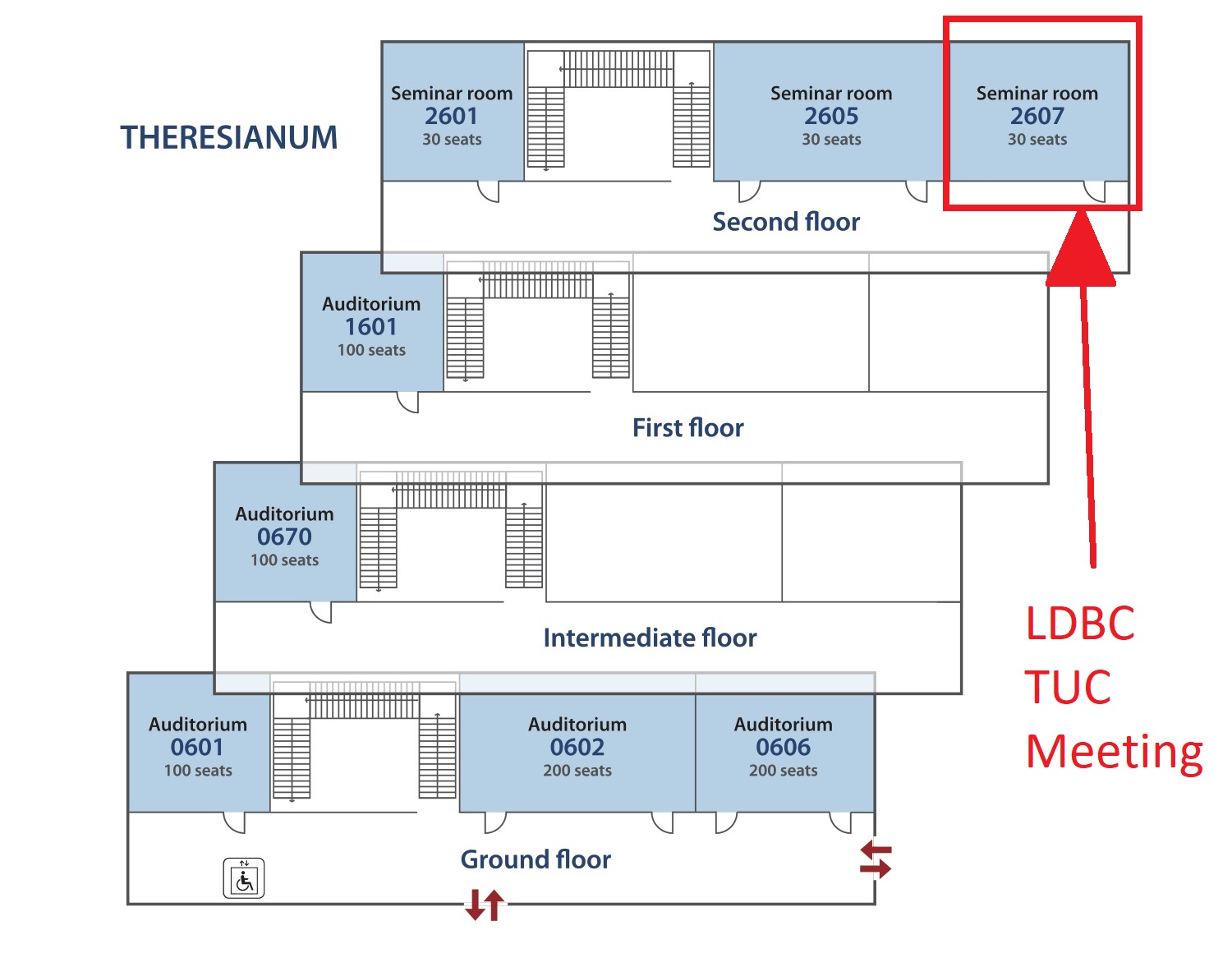

+ <p><strong>Organizers:</strong> Shipeng Qi (AntGroup), Wenyuan Yu (Alibaba Demo), Yan Zhou (CreateLink)</p>

+<p>LDBC is hosting a <strong>two-day</strong> hybrid workshop, co-located in <strong>Guangzhou</strong> with <a href="https://vldb.org/2024/">VLDB 2024</a> on <strong>August 30-31 (Friday-Saturday)</strong>.</p>

+<p>The program consists of 10- and 15-minute talks followed by a Q&A session. The talks will be recorded and made available online. <strong>If you would like to participate please register using <a href="https://forms.gle/aVPrrcxXpSwrWPnh6">our form</a>.</strong></p>

+<h3 id="program">Program</h3>

+<p><strong>All times are in PDT.</strong></p>

+<h4 id="august-30-friday">August 30, Friday</h4>

+<p><strong>Location:</strong> <a href="https://www.langhamhotels.com/en/the-langham/guangzhou/">Langham Place</a>, Guangzhou, <strong>room 1</strong>,<br>

+co-located with VLDB (N0.630-638 Xingang Dong Road, Haizhu District, Guangzhou, China). See the map <a href="https://maps.app.goo.gl/86jD3Dy9Aa7bwLs36">here</a>.</p>

+<p><strong>Agenda:</strong> TBA</p>

+<h4 id="august-31-saturday">August 31, Saturday</h4>

+<p><strong>Location:</strong> Alibaba Center, Guangzhou (N0.88 Dingxin Road, Haizhu District, Guangzhou, China), near to VLDB Langham Place. See the map <a href="https://maps.app.goo.gl/HgEVafZMRmrzUsgW8">here</a>.</p>

+<p><strong>Agenda:</strong> TBA</p>

+<h4 id="tuc-event-locations">TUC event locations</h4>

+<p>A <a href="https://www.google.com/maps/d/u/0/edit?mid=19_fi4fV-3-PZkNWCCcmhU86ct2EZXbgo">map of the LDBC TUC events</a> we hosted so far.</p>

+

+

+

+ -

+ Seventeenth TUC Meeting

+ https://ldbcouncil.org/event/seventeenth-tuc-meeting/

+ Sun, 09 Jun 2024 09:00:00 -0400

+

+ https://ldbcouncil.org/event/seventeenth-tuc-meeting/

+ <p><strong>Organizers:</strong> Renzo Angles, Sebastián Ferrada</p>

+<p>LDBC is hosting a one-day in-person workshop, co-located in <strong>Santiago de Chile</strong> with <a href="https://2024.sigmod.org/venue.shtml">SIGMOD 2024</a> on <strong>June 9 (Sunday)</strong>.</p>

+<p>The workshop will be held in the <strong>Hotel Plaza El Bosque Ebro</strong> (<a href="https://www.plazaelbosque.cl">https://www.plazaelbosque.cl</a>), which is two blocks away from SIGMOD’s venue. See the map <a href="https://maps.app.goo.gl/78oiw3zo2pH3gy5R6">here</a>.</p>

+<p><strong>If you would like to participate please register using <a href="https://forms.gle/XXgaQfwBZAMMZJb78">this form</a>.</strong></p>

+<h3 id="program">Program</h3>

+<p><strong>All times are in Chile time (GMT-4).</strong></p>

+<p><strong>Each speaker will have 20 minutes for exposition plus 5 minutes for questions.</strong></p>

+<table>

+<thead>

+<tr>

+<th>Time</th>

+<th>Speaker</th>

+<th>Title</th>

+</tr>

+</thead>

+<tbody>

+<tr>

+<td>09:00</td>

+<td>Welcome</td>

+<td>“Canelo” saloon</td>

+</tr>

+<tr>

+<td>09:30</td>

+<td>Alastair Green (LDBC Vice-chair)</td>

+<td>Status of the LDBC Extended GQL Schema Working Group</td>

+</tr>

+<tr>

+<td>10:00</td>

+<td>Hannes Voigt (Neo4j)</td>

+<td>Inside the Standardization Machine Room: How ISO/IEC 39075:2024 – GQL was produced</td>

+</tr>

+<tr>

+<td>10:30</td>

+<td>Calin Iorgulescu (Oracle)</td>

+<td>PGX.D: Distributed graph processing engine</td>

+</tr>

+<tr>

+<td>11:00</td>

+<td>Coffee break</td>

+<td></td>

+</tr>

+<tr>

+<td>11:30</td>

+<td>Ricky Sun (Ultipa, Inc.)</td>

+<td>A Unified Graph Framework with SCC (Storage-Compute Coupled) and HDC (High-Density Computing) Clustering</td>

+</tr>

+<tr>

+<td>12:00</td>

+<td>Daan de Graaf (TU Eindhoven)</td>

+<td>Algorithm Support in a Graph Database, Done Right</td>

+</tr>

+<tr>

+<td>12:30</td>

+<td>Angela Bonifati (Lyon 1 University and IUF, France)</td>

+<td>Transforming Property Graphs</td>

+</tr>

+<tr>

+<td>13:00</td>

+<td>Brunch</td>

+<td></td>

+</tr>

+<tr>

+<td>14:00</td>

+<td>Juan Sequeda (data.world)</td>

+<td>A Benchmark to Understand the Role of Knowledge Graphs on Large Language Model’s Accuracy for Question Answering on Enterprise SQL Databases</td>

+</tr>

+<tr>

+<td>14:30</td>

+<td>Olaf Hartig (Linköping University)</td>

+<td>FedShop: A Benchmark for Testing the Scalability of SPARQL Federation Engines</td>

+</tr>

+<tr>

+<td>15:00</td>

+<td>Olaf Hartig (Amazon)</td>

+<td>Datatypes for Lists and Maps in RDF Literals</td>

+</tr>

+<tr>

+<td>15:30</td>

+<td>Peter Boncz (CWI and MotherDuck)</td>

+<td>The state of DuckPGQ</td>

+</tr>

+<tr>

+<td>16:00</td>

+<td>Coffee break</td>

+<td></td>

+</tr>

+<tr>

+<td>16:30</td>

+<td>Juan Reutter (IMFD and PUC Chile)</td>

+<td>MillenniumDB: A Persistent, Open-Source, Graph Database</td>

+</tr>

+<tr>

+<td>17:00</td>

+<td>Carlos Rojas (IMFD)</td>

+<td>WDBench: A Wikidata Graph Query Benchmark</td>

+</tr>

+<tr>

+<td>17:30</td>

+<td>Sebastián Ferrada (IMFD and Univ. de Chile)</td>

+<td>An algebra for evaluating path queries</td>

+</tr>

+<tr>

+<td>19:30</td>

+<td>Dinner</td>

+<td></td>

+</tr>

+</tbody>

+</table>

+

+

+

+ -

+ Record-Breaking SNB Interactive Results for GraphScope

+ https://ldbcouncil.org/post/record-breaking-snb-interactive-results-for-graphscope/

+ Sun, 26 May 2024 00:00:00 +0000

+

+ https://ldbcouncil.org/post/record-breaking-snb-interactive-results-for-graphscope/

+ <p>We are happy to annonunce new <a href="https://ldbcouncil.org/benchmarks/snb-interactive/">audited results for the SNB Interactive workload</a>, achieved by the open-source <a href="https://github.com/alibaba/GraphScope">GraphScope Flex</a> system.</p>

+<p>The current audit of the system has broken several records:</p>

+<ul>

+<li>It achieved 130.1k ops/s on scale factor 100, compared to the previous record of 48.8k ops/s.</li>

+<li>It achieved 131.3k ops/s on scale factor 300, compared to the previous record of 48.3k ops/s.</li>

+<li>It is the first system to successfully complete the benchmark on scale factor 1000. It achieved a throughput of 127.8k ops/s</li>

+</ul>

+<p>The audit was commissioned by the <a href="https://www.alibabacloud.com/">Alibaba Cloud</a> and was conducted by <a href="https://www.linkedin.com/in/arnau-prat-a70283bb/">Dr. Arnau Prat-Pérez</a>, one of the original authors of the SNB Interactive benchmark. The queries were implemented as C++ stored procedures and the benchmark was executed on the Alibaba Cloud’s infrastructure. The <a href="https://ldbcouncil.org/benchmarks/snb/LDBC_SNB_I_20240514_SF100-300-1000_graphscope-executive_summary.pdf">executive summary</a>, <a href="https://ldbcouncil.org/benchmarks/snb/LDBC_SNB_I_20240514_SF100-300-1000_graphscope.pdf">full disclosure report</a>, and <a href="%5B/benchmarks/snb/%5D(https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/audits/LDBC_SNB_I_20240514_SF100-300-1000_graphscope-attachments.tar.gz)">supplementary package</a> describe the benchmark’s steps and include instructions for reproduction.</p>

+<p>LDBC would like to congratulate the GraphScope Flex team on their record-breaking results.</p>

+<div align="center"><img src="https://ldbcouncil.org/images/graphscope.svg" width="200"></div>

+

+

+

+ -

+ Launching open-source language tools for ISO/IEC GQL

+ https://ldbcouncil.org/post/ldbc-announces-open-source-gql-tools/

+ Thu, 09 May 2024 00:00:00 +0000

+

+ https://ldbcouncil.org/post/ldbc-announces-open-source-gql-tools/

+ <p>Following the publication of ISO/IEC GQL (graph query language) in April 2024, LDBC today launches open-source language engineering tools to help implementers, and assist in generation of code examples and tests for the GQL language. See this <a href="https://ldbcouncil.org/pages/opengql-announce">announcement from Alastair Green, Vice-chair of LDBC</a>.</p>

+<p>These tools are the work of the <strong>LDBC GQL Implementation Working Group</strong>, headed up by Michael Burbidge. Damian Wileński and Dominik Tomaszuk have worked with Michael to create these artefacts based on his ANTLR grammar for GQL.</p>

+

+

+

+ -

+ Announcing the Official Release of LDBC Financial Benchmark v0.1.0

+ https://ldbcouncil.org/post/announcing-the-official-release-of-ldbc-financial-benchmark/

+ Tue, 27 Jun 2023 00:00:00 +0000

+

+ https://ldbcouncil.org/post/announcing-the-official-release-of-ldbc-financial-benchmark/

+ <p>We are delighted to announce the official release of the initial version (v0.1.0) of <a href="https://ldbcouncil.org/benchmarks/finbench/">Financial Benchmark (FinBench)</a>.</p>

+<p>The Financial Benchmark (FinBench) project defines a graph database benchmark targeting financial scenarios such as anti-fraud and risk control. It is maintained by the <a href="https://ldbcouncil.org/benchmarks/finbench/ldbc-finbench-work-charter.pdf">LDBC FinBench Task Force</a>. The benchmark has one workload currently, <strong>Transaction Workload</strong>, capturing OLTP scenario with complex read queries that access the neighbourhood of a given node in the graph and write queries that continuously insert or delete data in the graph.</p>

+<p>Compared to LDBC SNB, the FinBench differs in application scenarios, data patterns, and workloads, resulting in different schema characteristics, latency bounds, path filters, etc. For a brief overview, see the <a href="https://ldbcouncil.org/benchmarks/finbench/finbench-talk-16th-tuc.pdf">slides</a> in the 16th TUC. The <a href="https://arxiv.org/pdf/2306.15975.pdf">Financial Benchmark’s specification</a> can be found on arXiv.</p>

+<p>The release of FinBench initial version (v0.1.0) was approved by LDBC on June 23, 2023. It is the good beginning of FinBench. In the future, the FinBench Task Force will polish the benchmark continuously.</p>

+<p>If you are interested in joining FinBench Task Force, please reach out at info at ldbcouncil.org or qishipeng.qsp at antgroup.com.</p>

+

+

+

+ -

+ Sixteenth TUC Meeting

+ https://ldbcouncil.org/event/sixteenth-tuc-meeting/

+ Fri, 23 Jun 2023 09:00:00 -0800

+

+ https://ldbcouncil.org/event/sixteenth-tuc-meeting/

+ <p><strong>Organizers:</strong> Oskar van Rest, Alastair Green, Gábor Szárnyas</p>

+<p>LDBC is hosting a <strong>two-day</strong> hybrid workshop, co-located with <a href="https://2023.sigmod.org/venue.shtml">SIGMOD 2023</a> on <strong>June 23-24 (Friday-Saturday)</strong>.</p>

+<p>The program consists of 10- and 15-minute talks followed by a Q&A session. The talks will be recorded and made available online. <strong>If you would like to participate please register using <a href="https://forms.gle/T6bwVHzK9V5FaKyR9">our form</a>.</strong></p>

+<p>LDBC will host a <strong>social event</strong> on Friday at the <a href="https://www.blackbottleseattle.com/">Black Bottle gastrotavern</a> in Belltown: <a href="https://goo.gl/maps/hQzBRR2nerZEQExw7">2600 1st Ave (on the corner of Vine), Seattle, WA 98121</a>.</p>

+<p>In addition, AWS will host a <strong>Happy Hour</strong> (rooftop grill with beverages) on Saturday on the Amazon Nitro South building’s 8th floor deck: <a href="https://goo.gl/maps/md5kWUHaNUGhR9JB7">2205 8th Ave, Seattle, WA 98121</a>.</p>

+<h3 id="program">Program</h3>

+<p><strong>All times are in PDT.</strong></p>

+<h4 id="friday">Friday</h4>

+<p><strong>Location:</strong> Hyatt Regency Bellevue on Seattle’s Eastside, <strong>room Grand K</strong>, co-located with SIGMOD (<a href="https://www.hyatt.com/en-US/hotel/washington/hyatt-regency-bellevue-on-seattles-eastside/belle">900 Bellevue Way NE, Bellevue, WA 98004-4272</a>)</p>

+<table>

+<thead>

+<tr>

+<th>start</th>

+<th>finish</th>

+<th>speaker</th>

+<th>title</th>

+</tr>

+</thead>

+<tbody>

+<tr>

+<td>08:30</td>

+<td>08:45</td>

+<td>Oskar van Rest (Oracle)</td>

+<td>LDBC – State of the union – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/oskar-van-rest-ldbc-state-of-the-union.pdf">slides</a>, <a href="https://youtu.be/Frk7ITssaSY">video</a></td>

+</tr>

+<tr>

+<td>08:50</td>

+<td>09:05</td>

+<td>Keith Hare (JCC / WG3)</td>

+<td>An update on the GQL & SQL/PGQ standards efforts – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/keith-hare-an-update-on-the-gql-and-sql-pgq-standards-efforts.pdf">slides</a>, <a href="https://youtu.be/LQYkal_0j6E">video</a></td>

+</tr>

+<tr>

+<td>09:10</td>

+<td>09:25</td>

+<td>Stefan Plantikow (Neo4j / WG3)</td>

+<td>GQL - Introduction to a new query language standard – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/stefan-plantikow-gql-v1.pdf">slides</a></td>

+</tr>

+<tr>

+<td>09:30</td>

+<td>09:45</td>

+<td>Leonid Libkin (University of Edinburgh & RelationalAI)</td>

+<td>Formalizing GQL – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/leonid-libkin-formalizing-gql.pdf">slides</a>, <a href="https://youtu.be/YZE1a00h1I4">video</a></td>

+</tr>

+<tr>

+<td>09:50</td>

+<td>10:05</td>

+<td>Semen Panenkov (JetBrains Research)</td>

+<td>Mechanizing the GQL semantics in Coq – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/semyon-panenkov-gql-in-coq.pdf">slides</a>, <a href="https://youtu.be/5xBGohqWCzo">videos</a></td>

+</tr>

+<tr>

+<td>10:10</td>

+<td>10:25</td>

+<td>Oskar van Rest (Oracle)</td>

+<td>SQL Property Graphs in Oracle Database and Oracle Graph Server (PGX) – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/oskar-van-rest-sql-property-graphs-in-oracle-database-and-oracle-graph-server-pgx.pdf">slides</a>, <a href="https://youtu.be/owM9WiQubpg">video</a></td>

+</tr>

+<tr>

+<td>10:30</td>

+<td>11:00</td>

+<td><em>coffee break</em></td>

+<td></td>

+</tr>

+<tr>

+<td>11:00</td>

+<td>11:15</td>

+<td>Alastair Green (JCC)</td>

+<td>LDBC’s organizational changes and fair use policies – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/alastair-green-ldbc-corporate-restructuring-and-fair-use-policies.pdf">slides</a></td>

+</tr>

+<tr>

+<td>11:20</td>

+<td>11:35</td>

+<td>Ioana Manolescu (INRIA)</td>

+<td>Integrating Connection Search in Graph Queries – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/ioana-manolescu-integrating-connection-search-in-graph-queries.pdf">slides</a>, <a href="https://youtu.be/LQPnmcrkUpY">video</a></td>

+</tr>

+<tr>

+<td>11:40</td>

+<td>11:55</td>

+<td>Maciej Besta (ETH Zurich)</td>

+<td>Neural Graph Databases with Graph Neural Networks – <a href="https://youtu.be/ce5qNievRNs">video</a></td>

+</tr>

+<tr>

+<td>12:00</td>

+<td>12:10</td>

+<td>Longbin Lai (Alibaba Damo Academy)</td>

+<td>To Revisit Benchmarking Graph Analytics – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/longbin-lai-benchmark-ldbc.pdf">slides</a>, <a href="https://youtu.be/s9Vtt-6t_FI">video</a></td>

+</tr>

+<tr>

+<td>12:15</td>

+<td>13:30</td>

+<td><em>lunch</em></td>

+<td></td>

+</tr>

+<tr>

+<td>13:30</td>

+<td>13:45</td>

+<td>Yuanyuan Tian (Gray Systems Lab, Microsoft)</td>

+<td>The World of Graph Databases from An Industry Perspective – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/yuanyuan-tian-world-of-graph-databases.pdf">slides</a>, <a href="https://youtu.be/AZuP_b95GPM">video</a></td>

+</tr>

+<tr>

+<td>13:50</td>

+<td>14:05</td>

+<td>Alin Deutsch (UC San Diego & TigerGraph)</td>

+<td>TigerGraph’s Parallel Computation Model – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/alin-deutsch-tigergraphs-computation-model.pdf">slides</a>, <a href="https://youtu.be/vcxdieJB80Y">video</a></td>

+</tr>

+<tr>

+<td>14:10</td>

+<td>14:25</td>

+<td>Chen Zhang (CreateLink)</td>

+<td>Applications of a Native Distributed Graph Database in the Financial Industry – <a href="https://youtu.be/GCCT79Sps9I">video</a></td>

+</tr>

+<tr>

+<td>14:30</td>

+<td>14:45</td>

+<td>Ricky Sun (Ultipa)</td>

+<td>Design of highly scalable graph database systems – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/ricky-sun-ultipa.pdf">slides</a>, <a href="https://youtu.be/Sg1F64O4vGM">video</a></td>

+</tr>

+<tr>

+<td>14:50</td>

+<td>15:30</td>

+<td><em>coffee break</em></td>

+<td></td>

+</tr>

+<tr>

+<td>15:30</td>

+<td>15:45</td>

+<td>Heng Lin (Ant Group)</td>

+<td>The LDBC SNB implementation in TuGraph – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/heng-lin-the-ldbc-snb-implementation-in-tugraph.pdf">slides</a>, <a href="https://youtu.be/fy8AuVerwnY">video</a></td>

+</tr>

+<tr>

+<td>15:50</td>

+<td>16:05</td>

+<td>Shipeng Qi (Ant Group)</td>

+<td>FinBench: The new LDBC benchmark targeting financial scenario – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/shipeng-qi-finbench.pdf">slides</a>, <a href="https://youtu.be/0xLZadDOfZk">video</a></td>

+</tr>

+<tr>

+<td>16:10</td>

+<td>17:00</td>

+<td>host: Heng Lin (Ant Group), panelists: Longbin Lai (Alibaba Damo Academy), Ricky Sun (Ultipa), Gabor Szarnyas (CWI), Yuanyuan Tian (Gray Systems Lab, Microsoft)</td>

+<td>FinBench panel – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/heng-lin-finbench-panel.pdf">slides</a></td>

+</tr>

+<tr>

+<td>19:00</td>

+<td>22:00</td>

+<td><em>dinner</em></td>

+<td><em><a href="https://www.blackbottleseattle.com/">Black Bottle gastrotavern</a> in Belltown: <a href="https://goo.gl/maps/hQzBRR2nerZEQExw7">2600 1st Ave (on the corner of Vine), Seattle, WA 98121</a></em></td>

+</tr>

+</tbody>

+</table>

+<h4 id="saturday">Saturday</h4>

+<p><strong>Location:</strong> Amazon Nitro South building, <strong>room 03.204</strong> (<a href="https://goo.gl/maps/md5kWUHaNUGhR9JB7">2205 8th Ave, Seattle, WA 98121</a>)</p>

+<table>

+<thead>

+<tr>

+<th>start</th>

+<th>finish</th>

+<th>speaker</th>

+<th>title</th>

+</tr>

+</thead>

+<tbody>

+<tr>

+<td>09:00</td>

+<td>09:45</td>

+<td>Brad Bebee (AWS)</td>

+<td>Customers don’t want a graph database, so why are we still here? – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/brad-bebee-tuc-keynote.pdf">slides</a>, <a href="https://youtu.be/bJlkpDC--fM">video</a></td>

+</tr>

+<tr>

+<td>10:00</td>

+<td>10:15</td>

+<td>Muhammad Attahir Jibril (TU Ilmenau)</td>

+<td>Fast and Efficient Update Handling for Graph H2TAP – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/muhammad-attahir-jibril-fast-and-efficient-update-handling-for-graph-h2tap.pdf">slides</a>, <a href="https://youtu.be/e8ZAszBsXV0">video</a></td>

+</tr>

+<tr>

+<td>10:20</td>

+<td>11:00</td>

+<td><em>coffee break</em></td>

+<td></td>

+</tr>

+<tr>

+<td>11:00</td>

+<td>11:15</td>

+<td>Gabor Szarnyas (CWI)</td>

+<td>LDBC Social Network Benchmark and Graphalytics – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/gabor-szarnyas-ldbc-social-network-benchmark-and-graphalytics.pdf">slides</a></td>

+</tr>

+<tr>

+<td>11:20</td>

+<td>11:30</td>

+<td>Atanas Kiryakov and Tomas Kovachev (Ontotext)</td>

+<td>GraphDB – Benchmarking against LDBC SNB & SPB – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/tomas-kovatchev-atanas-kiryakov-benchmarking-graphdb-with-snb-and-spb.pdf">slides</a>, <a href="https://youtu.be/U6OPpNFOWqg">video</a></td>

+</tr>

+<tr>

+<td>11:35</td>

+<td>11:50</td>

+<td>Roi Lipman (Redis Labs)</td>

+<td>Delta sparse matrices within RedisGraph – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/roi-lipman-delta-matrix.pdf">slides</a>, <a href="https://youtu.be/qfKsplV4Ihk">video</a></td>

+</tr>

+<tr>

+<td>11:55</td>

+<td>12:05</td>

+<td>Rathijit Sen (Microsoft)</td>

+<td>Microarchitectural Analysis of Graph BI Queries on RDBMS – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/rathijit-sen-microarchitectural-analysis.pdf">slides</a>, <a href="https://youtu.be/55B8CkH09js">video</a></td>

+</tr>

+<tr>

+<td>12:10</td>

+<td>13:30</td>

+<td><em>lunch</em></td>

+<td><em>on your own</em></td>

+</tr>

+<tr>

+<td>13:30</td>

+<td>13:45</td>

+<td>Alastair Green (JCC)</td>

+<td>LEX – LDBC Extended GQL Schema – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/alastair-green-lex.pdf">slides</a>, <a href="https://youtu.be/DVpeb4Ce9Uw">video</a></td>

+</tr>

+<tr>

+<td>13:50</td>

+<td>14:05</td>

+<td>Ora Lassila (AWS)</td>

+<td>Why limit yourself to {RDF, LPG} when you can do {RDF, LPG}, too – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/ora-lassila-why-limit-yourself-to-lpg-when-you-can-do-rdf-too.pdf">slides</a>, <a href="https://youtu.be/7uAInoUwdds">video</a></td>

+</tr>

+<tr>

+<td>14:10</td>

+<td>14:25</td>

+<td>Jan Hidders (Birkbeck, University of London)</td>

+<td>PG-Schema: a proposal for a schema language for property graphs – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/jan-hidders-pg-schema.pdf">slides</a>, <a href="https://youtu.be/yQNL8hBTE4M">video</a></td>

+</tr>

+<tr>

+<td>14:30</td>

+<td>14:45</td>

+<td>Max de Marzi (RageDB and RelationalAI)</td>

+<td>RageDB: Building a Graph Database in Anger – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/max-de-marzi-ragedb-building-a-graph-database-in-anger.pdf">slides</a>, <a href="https://youtu.be/LBbF8aslYFE">video</a></td>

+</tr>

+<tr>

+<td>14:50</td>

+<td>15:30</td>

+<td><em>coffee break</em></td>

+<td></td>

+</tr>

+<tr>

+<td>15:30</td>

+<td>15:45</td>

+<td>Umit Catalyurek (AWS)</td>

+<td>HPC Graph Analytics on the OneGraph Model – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/umit-catalyurek-onegraph-hpc.pdf">slides</a>, <a href="https://youtu.be/64tv5LA6Wr8">video</a></td>

+</tr>

+<tr>

+<td>15:50</td>

+<td>16:05</td>

+<td>David J. Haglin (Trovares)</td>

+<td>How LDBC impacts Trovares – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/david-haglin-trovares.pdf">slides</a>, <a href="">video</a></td>

+</tr>

+<tr>

+<td>16:10</td>

+<td>16:25</td>

+<td>Wenyuan Yu (Alibaba Damo Academy)</td>

+<td>GraphScope Flex: A Graph Computing Stack with LEGO-Like Modularity – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/wenyuan-yu-graphscope-flex.pdf">slides</a>, <a href="https://youtu.be/cRikoyDmMks">video</a></td>

+</tr>

+<tr>

+<td>16:30</td>

+<td>16:40</td>

+<td>Scott McMillan (Carnegie Mellon University)</td>

+<td>Graph processing using GraphBLAS – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/scott-mcmillan-graph-processing-using-graphblas.pdf">slides</a>, <a href="https://youtu.be/yb4hGBhUzQQ">video</a></td>

+</tr>

+<tr>

+<td>16:45</td>

+<td>16:55</td>

+<td>Tim Mattson (Intel)</td>

+<td>Graphs (GraphBLAS) and storage (TileDB) as Sparse Linear algebra – <a href="https://pub-383410a98aef4cb686f0c7601eddd25f.r2.dev/event/sixteenth-tuc-meeting/attachments/tim-mattson-graphblas-and-tiledb.pdf">slides</a></td>

+</tr>

+<tr>

+<td>17:00</td>

+<td>20:00</td>

+<td><em>happy hour (rooftop grill with beverages)</em></td>

+<td><em>on the Nitro South building’s 8th floor deck</em></td>

+</tr>

+</tbody>

+</table>

+<h4 id="tuc-event-locations">TUC event locations</h4>

+<p>A <a href="https://www.google.com/maps/d/u/0/edit?mid=19_fi4fV-3-PZkNWCCcmhU86ct2EZXbgo">map of the LDBC TUC events</a> we hosted so far.</p>

+

+

+

+ -

+ LDBC SNB – Early 2023 updates

+ https://ldbcouncil.org/post/ldbc-snb-early-2023-updates/

+ Wed, 15 Feb 2023 00:00:00 +0000

+

+ https://ldbcouncil.org/post/ldbc-snb-early-2023-updates/

+ <p>2023 has been an eventful year for us so far. Here is a summary of our recent activities.</p>

+<ol>

+<li>

+<p>Our paper <a href="https://ldbcouncil.org/docs/papers/ldbc-snb-bi-vldb-2022.pdf">The LDBC Social Network Benchmark: Business Intelligence Workload</a> was published in PVLDB.</p>

+</li>

+<li>

+<p>David Püroja just completed his MSc thesis on creating a design towards <a href="https://ldbcouncil.org/docs/papers/msc-thesis-david-puroja-snb-interactive-v2-2023.pdf">SNB Interactive v2</a> at CWI’s Database Architectures group. David and I gave a deep-dive talk at the FOSDEM conference’s graph developer room titled <a href="https://fosdem.org/2023/schedule/event/graph_ldbc/">The LDBC Social Network Benchmark</a> (<a href="https://www.youtube.com/watch?v=YNF6z6gtXY4">YouTube mirror</a>).</p>

+</li>

+<li>

+<p>I gave a lightning talk at FOSDEM’s HPC developer room titled <a href="https://www.youtube.com/watch?v=q26DHnQFw54">The LDBC Benchmark Suite</a> (<a href="https://www.youtube.com/watch?v=q26DHnQFw54">YouTube mirror</a>).</p>

+</li>

+<li>

+<p>Our auditors have successfully benchmark a number of systems:</p>

+<ul>

+<li>SPB with the Ontotext GraphDB systems for the SF3 and SF5 data sets (auditor: Pjotr Scholtze)</li>

+<li>SNB Interactive with the Ontotext GraphDB system for the SF30 data set (auditor: David Püroja)</li>

+<li>SNB Interactive with the TuGraph system running in the Aliyun cloud for the SF30, SF100, and SF300 data sets (auditor: Márton Búr)</li>

+</ul>

+</li>

+</ol>

+<p>The results and the full disclosure reports are available under the <a href="https://ldbcouncil.org/benchmarks/spb/">SPB</a> and <a href="https://ldbcouncil.org/benchmarks/snb/">SNB benchmark pages</a>.</p>

+

+

+

+ -

+ LDBC SNB Datagen – The winding path to SF100K

+ https://ldbcouncil.org/post/ldbc-snb-datagen-the-winding-path-to-sf100k/

+ Tue, 13 Sep 2022 00:00:00 +0000

+

+ https://ldbcouncil.org/post/ldbc-snb-datagen-the-winding-path-to-sf100k/

+ <p>LDBC SNB provides a data generator, which produces synthetic datasets, mimicking a social network’s activity during a period of time. Datagen is defined by the charasteristics of realism, scalability, determinism and usability. More than two years have elapsed since my <a href="https://ldbcouncil.org/post/speeding-up-ldbc-snb-datagen/">last technical update</a> on LDBC SNB Datagen, in which I discussed the reasons for moving the code to Apache Spark from the MapReduce-based Apache Hadoop implementation and the challenges I faced during the migration. Since then, we reached several goals such as we refactored the serializers to use Spark’s high-level writers to support the popular Parquet data format and to enable running on spot nodes; brought back factor generation; implemented support for the novel BI benchmark; and optimized the runtime to generate SF30K on 20 i3.4xlarge machines on AWS.</p>

+<h1 id="moving-to-sparksql">Moving to SparkSQL</h1>

+<p>We planned to move parts of the code to SparkSQL, an optimized runtime framework for tabular data. We hypothesized that this would benefit us on multiple fronts: SparkSQL offers an efficient batch analytics runtime, with higher level abstractions that are simpler to understand and work with, and we could easily add support for serializing to Parquet based on SparkSQL’s capabilites.</p>

+<blockquote>

+<p>Spark SQL is a Spark module for structured data processing. It provides a programming abstraction called DataFrames and can also act as a distributed SQL query engine. Spark SQL includes a cost-based optimizer, columnar storage, and code generation to make queries fast.</p>

+</blockquote>

+<p>Dealing with the dataset generator proved quite tricky, because it samples from various hand-written distributions and dictionaries, and contains complex domain logic, for which SparkSQL unsuitable. We assessed that the best thing we could do is wrap entire entity generation procedures in UDFs (user defined SQL functions). However, several of these generators return entity trees<sup id="fnref:1"><a href="#fn:1" class="footnote-ref" role="doc-noteref">1</a></sup>, which are spread across multiple tables by the serializer, and these would have needed to be split up. Further complicating matters, we would have also had to find a way to coordinate the inner random generators’ state between the UDFs to ensure deterministic execution. Weighing these and that we could not find much benefit in SparkSQL, we ultimately decided to leave entity generation as it is. We limited the SparkSQL refactor to the following areas:</p>

+<ol>

+<li>table manipulations related to shaping the output into the supported layouts and data types as set forth in the specification;</li>

+<li>deriving the Interactive and BI datasets;</li>

+<li>and generating the factor tables, which contain analytic information, such as population per country, number of friendships between city pairs, number of messages per day, etc., used by the substitution parameter generator to ensure predictable query runtimes.</li>

+</ol>

+<p>We refer to points (1.) and (2.) collectively as dataset transformation, while (3.) as factor generation. Initially, these had been part of the generator, extracted as part of this refactor, which resulted in cleaner, more maintainable design.</p>

+<p><img src="datagen_df_0.png" alt="Datagen stages"></p>

+<p>The diagram above shows the components on a high level. The generator outputs a dataset called IR (intermediate representation), which is immediately written to disk. Then, the IR is input to the dataset transformation and factor generation stages, which respectively generate the final dataset and the factor tables. We are aware that spitting out the IR adds considerable runtime overhead and doubles the disk requirements in the worst-case scenario, however, we found that there’s no simple way to avoid<br>

+it, as the generator produces entity trees, which are incompatible with the flat, tabular, column oriented layout of SparkSQL. On the positive side, this design enables us to reuse the generator output for multiple transformations and add new factor tables without regenerating the data.</p>

+<p>I’ll skip describing the social network graph dataset generator (i.e. stage 1) in any more detail, apart from its serializer, as that was the only part involved in the current refactor. If you are interested in more details, you may look up the <a href="https://ldbcouncil.org/post/speeding-up-ldbc-snb-datagen/">previous blogpost in the series</a> or the <a href="https://arxiv.org/abs/2001.02299">Interactive benchmark specification</a>.</p>

+<h1 id="transformation-pipeline">Transformation pipeline</h1>

+<p>The dataset transformation stage sets off where generation finished, and applies an array of pluggable transformations:</p>

+<ul>

+<li>explodes edges and / or attributes into separate tables,</li>

+<li>subsets the snapshot part and creates insert / delete batches for the BI workload,</li>

+<li>subsets the snapshot part for the Interactive workload,</li>

+<li>applies formatting related options such as date time representation,</li>

+<li>serializes the data to a Spark supported format (CSV, Parquet),</li>

+</ul>

+<p>We utilize a flexible data pipeline that operates on the graph.</p>

+<div class="highlight"><pre tabindex="0" style="color:#f8f8f2;background-color:#272822;-moz-tab-size:4;-o-tab-size:4;tab-size:4;"><code class="language-scala" data-lang="scala"><span style="display:flex;"><span><span style="color:#66d9ef">trait</span> <span style="color:#a6e22e">Transform</span><span style="color:#f92672">[</span><span style="color:#66d9ef">M1</span> <span style="color:#66d9ef"><:</span> <span style="color:#66d9ef">Mode</span>, <span style="color:#66d9ef">M2</span> <span style="color:#66d9ef"><:</span> <span style="color:#66d9ef">Mode</span><span style="color:#f92672">]</span> <span style="color:#a6e22e">extends</span> <span style="color:#f92672">(</span><span style="color:#a6e22e">Graph</span><span style="color:#f92672">[</span><span style="color:#66d9ef">M1</span><span style="color:#f92672">]</span> <span style="color:#66d9ef">=></span> <span style="color:#a6e22e">Graph</span><span style="color:#f92672">[</span><span style="color:#66d9ef">M2</span><span style="color:#f92672">])</span> <span style="color:#f92672">{</span>

+</span></span><span style="display:flex;"><span> <span style="color:#66d9ef">type</span> <span style="color:#66d9ef">In</span> <span style="color:#f92672">=</span> <span style="color:#a6e22e">Graph</span><span style="color:#f92672">[</span><span style="color:#66d9ef">M1</span><span style="color:#f92672">]</span>

+</span></span><span style="display:flex;"><span> <span style="color:#66d9ef">type</span> <span style="color:#66d9ef">Out</span> <span style="color:#f92672">=</span> <span style="color:#a6e22e">Graph</span><span style="color:#f92672">[</span><span style="color:#66d9ef">M2</span><span style="color:#f92672">]</span>

+</span></span><span style="display:flex;"><span> <span style="color:#66d9ef">def</span> transform<span style="color:#f92672">(</span>input<span style="color:#66d9ef">:</span> <span style="color:#66d9ef">In</span><span style="color:#f92672">)</span><span style="color:#66d9ef">:</span> <span style="color:#66d9ef">Out</span>

+</span></span><span style="display:flex;"><span> <span style="color:#66d9ef">override</span> <span style="color:#66d9ef">def</span> apply<span style="color:#f92672">(</span>v<span style="color:#66d9ef">:</span> <span style="color:#66d9ef">Graph</span><span style="color:#f92672">[</span><span style="color:#66d9ef">M1</span><span style="color:#f92672">])</span><span style="color:#66d9ef">:</span> <span style="color:#66d9ef">Graph</span><span style="color:#f92672">[</span><span style="color:#66d9ef">M2</span><span style="color:#f92672">]</span> <span style="color:#66d9ef">=</span> transform<span style="color:#f92672">(</span>v<span style="color:#f92672">)</span>

+</span></span><span style="display:flex;"><span><span style="color:#f92672">}</span>

+</span></span></code></pre></div><p>The <code>Transform</code> trait encodes a pure (side effect-free) function polymorphic over graphs, so that transformation pipelines can be expressed with ordinary function composition in a type safe manner. Let’s see some of the transformations we have.</p>

+<div class="highlight"><pre tabindex="0" style="color:#f8f8f2;background-color:#272822;-moz-tab-size:4;-o-tab-size:4;tab-size:4;"><code class="language-scala" data-lang="scala"><span style="display:flex;"><span><span style="color:#66d9ef">case</span> <span style="color:#66d9ef">class</span> <span style="color:#a6e22e">RawToBiTransform</span><span style="color:#f92672">(</span>mode<span style="color:#66d9ef">:</span> <span style="color:#66d9ef">BI</span><span style="color:#f92672">,</span> simulationStart<span style="color:#66d9ef">:</span> <span style="color:#66d9ef">Long</span><span style="color:#f92672">,</span> simulationEnd<span style="color:#66d9ef">:</span> <span style="color:#66d9ef">Long</span><span style="color:#f92672">,</span> keepImplicitDeletes<span style="color:#66d9ef">:</span> <span style="color:#66d9ef">Boolean</span><span style="color:#f92672">)</span>

+</span></span><span style="display:flex;"><span> <span style="color:#66d9ef">extends</span> <span style="color:#a6e22e">Transform</span><span style="color:#f92672">[</span><span style="color:#66d9ef">Mode.Raw.</span><span style="color:#66d9ef">type</span>, <span style="color:#66d9ef">Mode.BI</span><span style="color:#f92672">]</span> <span style="color:#f92672">{</span>

+</span></span><span style="display:flex;"><span> <span style="color:#66d9ef">override</span> <span style="color:#66d9ef">def</span> transform<span style="color:#f92672">(</span>input<span style="color:#66d9ef">:</span> <span style="color:#66d9ef">In</span><span style="color:#f92672">)</span><span style="color:#66d9ef">:</span> <span style="color:#66d9ef">Out</span> <span style="color:#f92672">=</span> <span style="color:#f92672">???</span>

+</span></span><span style="display:flex;"><span><span style="color:#f92672">}</span>

+</span></span><span style="display:flex;"><span>

+</span></span><span style="display:flex;"><span><span style="color:#66d9ef">case</span> <span style="color:#66d9ef">class</span> <span style="color:#a6e22e">RawToInteractiveTransform</span><span style="color:#f92672">(</span>mode<span style="color:#66d9ef">:</span> <span style="color:#66d9ef">Mode.Interactive</span><span style="color:#f92672">,</span> simulationStart<span style="color:#66d9ef">:</span> <span style="color:#66d9ef">Long</span><span style="color:#f92672">,</span> simulationEnd<span style="color:#66d9ef">:</span> <span style="color:#66d9ef">Long</span><span style="color:#f92672">)</span>

+</span></span><span style="display:flex;"><span> <span style="color:#66d9ef">extends</span> <span style="color:#a6e22e">Transform</span><span style="color:#f92672">[</span><span style="color:#66d9ef">Mode.Raw.</span><span style="color:#66d9ef">type</span>, <span style="color:#66d9ef">Mode.Interactive</span><span style="color:#f92672">]</span> <span style="color:#f92672">{</span>

+</span></span><span style="display:flex;"><span> <span style="color:#66d9ef">override</span> <span style="color:#66d9ef">def</span> transform<span style="color:#f92672">(</span>input<span style="color:#66d9ef">:</span> <span style="color:#66d9ef">In</span><span style="color:#f92672">)</span><span style="color:#66d9ef">:</span> <span style="color:#66d9ef">Out</span> <span style="color:#f92672">=</span> <span style="color:#f92672">???</span>

+</span></span><span style="display:flex;"><span><span style="color:#f92672">}</span>

+</span></span><span style="display:flex;"><span>

+</span></span><span style="display:flex;"><span><span style="color:#66d9ef">object</span> <span style="color:#a6e22e">ExplodeEdges</span> <span style="color:#66d9ef">extends</span> <span style="color:#a6e22e">Transform</span><span style="color:#f92672">[</span><span style="color:#66d9ef">Mode.Raw.</span><span style="color:#66d9ef">type</span>, <span style="color:#66d9ef">Mode.Raw.</span><span style="color:#66d9ef">type</span><span style="color:#f92672">]</span> <span style="color:#f92672">{</span>