Releases: dstackai/dstack

0.10.7

Services

Until now, dstack has supported dev-environment and task as configuration types. With the 0.10.7 update, we introduce

service, a dedicated configuration type for serving.

Usage example:

type: service

gateway: ${{ secrets.GATEWAY_ADDRESS }}

image: ghcr.io/huggingface/text-generation-inference:0.9.3

port: 8000

commands:

- text-generation-launcher --hostname 0.0.0.0 --port 8000 --trust-remote-codeThe gateway property represents the address of a special cloud instance that wraps the running service with a public endpoint.

Gateways

Before you can run a service, you have to configure a gateway.

First, you have to create a gateway in a project of your choice using the dstack gateway create command:

dstack gateway createOnce the gateway is up, the command will print its address. Go ahead and create a secret with this address.

dstack secrets add GATEWAY_ADDRESS <gateway address>That's it! Now you can run your service using the dstack run command, which deploys the service and forwards the traffic to the gateway, thereby providing you with a public endpoint.

This initial support for services is the first step towards providing multi-cloud and cost-effective inference. In the near future, we plan to make it more functional and easier to use.

What's changed

- Support restarting local and GCP runs by @r4victor in #590

- Add API for listing all runs by @r4victor in #591

- The

dstack initshould handle invalid Git credentials by @peterschmidt85 in #594 - Support custom run names by @r4victor in #595

dstackdoesn't work if the repo contains a submodule with SSH URL by @peterschmidt85 in #598- Always use cuda images for instances with GPU by @r4victor in #602

- Introduce gateways for services publication by @Egor-S in #596

- Small ports refactoring by @Egor-S in #603

- #588 Created All run list page. by @olgenn in #607

- Improve gateway security, show verbose errors by @Egor-S in #608

- Do not require sshd in task configuration for custom docker images by @Egor-S in #609

- #588 all runs list by @olgenn in #611

- [Bug]: Doesn't run a dev environment if

codeis not configured inPATHby @peterschmidt85 in #613 - Allow to create gateways in AWS and Azure by @Egor-S in #614

- [Bug]:

dstack initdoesn't work if.git/configor~/.gitconfigdoesn't have theusersection by @peterschmidt85 in #617 - [Bug]:

dstack configdoesn't work if~/.dstackdoesn't exist by @peterschmidt85 in #618 - Migrate to Gen2 images for Azure, add A100 support by @Egor-S in #619

- Add time.Sleep() in /logsws handler by @r4victor in #623

- Services & gateway docs by @Egor-S in #620

- Replace localhost with gateway hostname in service logs by @Egor-S in #624

Changelog: 0.10.6...0.10.7

0.10.6

Port mapping

Any task that is running on dstack can expose ports. Here's an example:

type: task

ports:

- 7860

commands:

- pip install -r requirements.txt

- gradio app.pyWhen you run it with dstack run, by default, dstack forwards the traffic from the specified port to the same port on your local machine.

With this update, you now have the option to override the local machine's port for traffic forwarding.

dstack run . -f serve.dstack.yml --port 3000:7860This command forwards the traffic to port 3000 on your local machine.

If you specify a port on your local machine already taken by another process, dstack will notify you before provisioning cloud resources.

Max duration

Previously, when running a dev environment or task with dstack and forgetting about it, it would continue indefinitely. Now, you can use the max_duration property in .dstack/profiles.yml to set a maximum time for workloads.

Example:

profiles:

- name: gcp-t4

project: gcp

resources:

memory: 24GB

gpu:

name: T4

max_duration: 2hWith this profile, dstack will automatically stop the workload after 2 hours.

If you don't specify max_duration, dstack defaults to 6h for dev environments and 72h for tasks.

To disable max duration, you can set it to off.

Imagine the amount of money your team can save with this minor configuration.

More supported GPUs

With the CUDA version updated to 11.8, dstack now supports additional GPU types, including NVIDIA T4 and NVIDIA L4. These GPUs are highly efficient for LLM development, offering excellent performance at low costs!

If you are using a custom Docker image, you can now utilize a CUDA version up to 12.2.

Last but not least, the K80 GPU is no longer supported.

Examples

Make sure to check the new page with examples.

The documentation is updated to reflect the changes in the release.

What's changed

- Elaborate error message on unmatched requirements for local backend by @r4victor in #563

- Handle no permissions for listing aws buckets by @r4victor in #566

- Support max_duration by @r4victor in #571

- Fix aws not using default region without default creds by @r4victor in #572

- Add --ports to

dstack runby @Egor-S in #573 - Add T4 support for GCP by @Egor-S in #575

- Close #537 Added field extra_regions for aws backend by @olgenn in #577

- Reserve ports before creating instance by @Egor-S in #578

- Upgrade instance images by @Egor-S in #581

- Update CUDA version to

11.8by @peterschmidt85 in #584 - Use identity ports mapping by default by @Egor-S in #587

Changelog: 0.10.5...0.10.6

0.10.5

Lambda

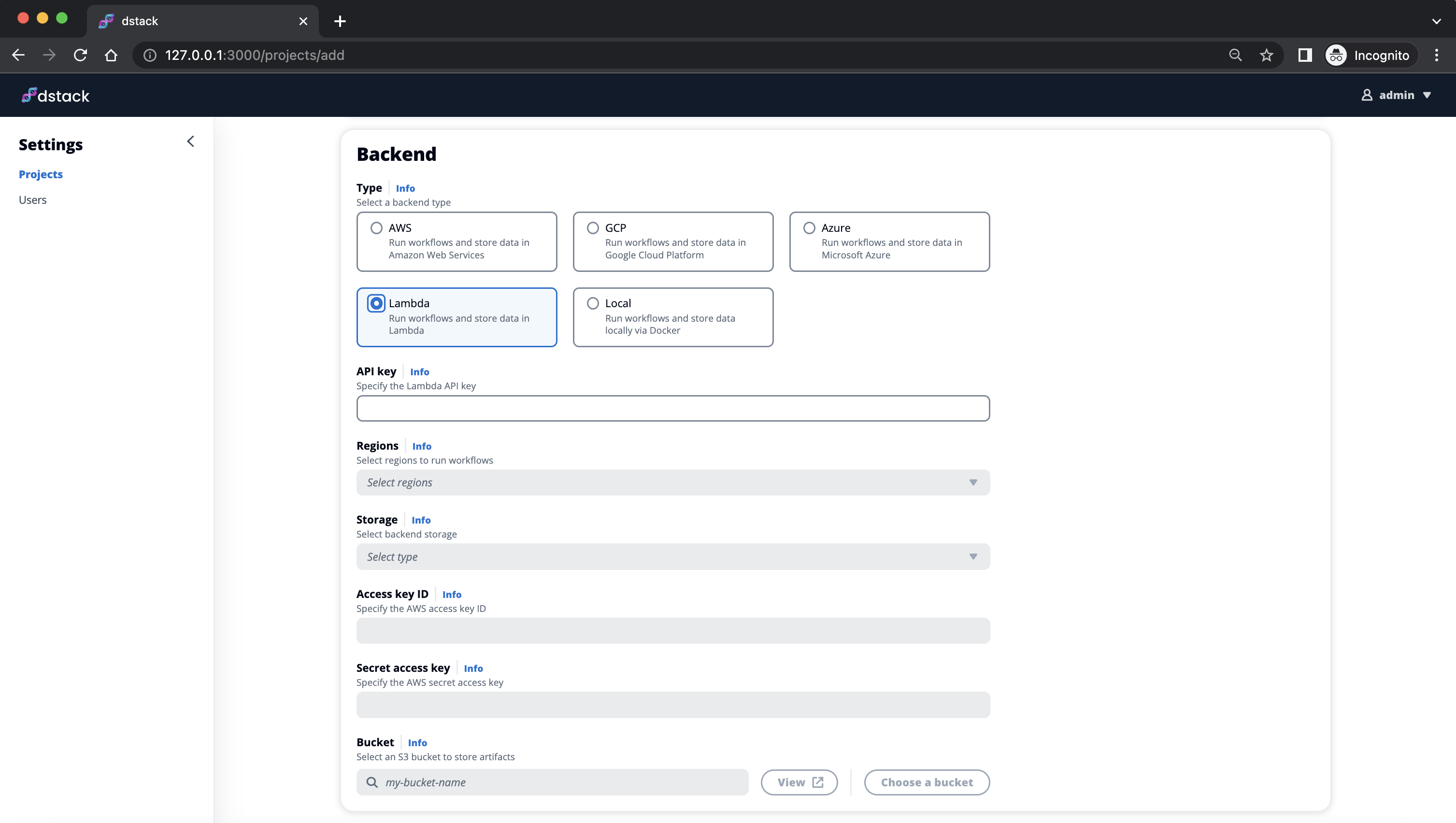

The Lambda Cloud integration has significantly improved with this release. We've added the possibility to create Lambda Cloud projects via the user interface.

All you need to do is provide your Lambda Cloud API key and specify an S3 bucket and AWS credentials for storing state and artifacts.

Check the docs for more details.

Custom Docker images

By default, dstack uses its own base Docker images to run dev environments and tasks. These base images come pre-configured with Python, Conda, and essential CUDA drivers. However, there may be times when you need additional dependencies that you don't want to install every time you run your dev environment or task.

To address this, dstack now allows specifying custom Docker images. Here's an example:

type: task

image: ghcr.io/huggingface/text-generation-inference:0.9

env:

- MODEL_ID=tiiuae/falcon-7b

ports:

- 3000

commands:

- text-generation-launcher --hostname 0.0.0.0 --port 3000 --trust-remote-codeNote

Dev environments require the Docker image to haveopenssh-serverpre-installed.

What's Changed

- Refactor providers, add custom docker images support by @Egor-S in #544

- Use nvidia docker runtime for build if available by @Egor-S in #552

- Allow env variables interpolation by @Egor-S in #553

- Display docker pull progress by @Egor-S in #554

- Handle backend creds becoming invalid by @r4victor in #556

- Add PATH to .profile by @Egor-S in #557

- Close #529 Supported lambda backend type in UI by @olgenn in #558

- #529 Fixed labels by @olgenn in #560

- 529 fixes after review by @olgenn in #562

Changelog: 0.10.4...0.10.5

0.10.4

What's changed

- [Bug]: Provisioning on Lambda Cloud doesn't work #542 by @peterschmidt85 in #543

- [Bug]: Cannot create an AWS project #545 by @peterschmidt85 in #546

Changelog: 0.10.3...0.10.4

dstack 0.10.3: A preview of Lambda Cloud support

With the 0.10.3 update, dstack now allows provisioning infrastructure in Lambda Cloud while storing state and artifacts in an S3 bucket.

See the Reference for detailed instructions on how to configure a project that uses Lambda Cloud.

Note, there are a few limitations in the preview:

- Since Lambda Cloud does not have its own object storage, dstack requires you to specify an S3 bucket, along with AWS credentials for storing state and artifacts.

- At the moment, there is no possibility to create a Lambda project via the UI. Currently, you can only create a Lambda project through an

API request.

In other news, we have pre-configured the base Docker image with the required Conda channel, enabling you to install additional CUDA tools like nvcc using conda install cuda. Note that you only need it for building a custom CUDA kernel; otherwise, the essential CUDA drivers are already pre-installed and not necessary.

The documentation and examples are updated to reflect the changes.

Give it a try and share feedback

Go ahead, and install the update, give it a spin, and share your feedback in our Slack community.

What's Changed

- Updated fields in run details page by @olgenn in #515

- Update gcp.md by @axitkhurana in #517

- Warn user about long git diff by @Egor-S in #518

- Close #523 Implemented building run status by @olgenn in #525

- Implement Lambda Labs backend by @r4victor in #528

- Restore cache functionality by @Egor-S in #527

- Runner storages refactoring by @Egor-S in #532

- Refactor backends by @r4victor in #533

- Add multi-region compute support for AWS by @r4victor in #536

- Check if the build exists before starting an instance by @Egor-S in #534

- Pre-configure the

nvidia/label/cuda-11.4.3channel forcondain the CUDA image by @peterschmidt85 in #541

New Contributors

- @axitkhurana made their first contribution in #517

Changelog: 0.10.2...0.10.3

0.9.1

Azure

First and foremost, dstack now enables running dev environments, workflows, and apps with Azure.

All you need to do is create the corresponding project via the UI and provide your Azure credentials.

For detailed instructions on setting up dstack for Azure, refer to the documentation.

User interface

Secondly, you can now browse the logs and artifacts of any run through the user interface.

Documentation

Last but not least, with the update, we have reworked the documentation to provide a greater emphasis on specific use cases.

0.2

GCP

With the release of version 0.2 of dstack, configuring a GCP as a remote is now possible. All the features that were previously available for AWS except real-time artifacts are now available for GCP as well.

To use GCP with dstack, you will require a service account.

For more details on how to configure GCP, refer to the documentation.

Once you have created a service account, proceed to execute the dstack config command. After that, you're good to go! Use the --remote flag with the dstack run command to execute workflows in GCP, and dstack will automatically create and destroy cloud instances based on resource requirements, including cost strategies like using spot instances.