-

Notifications

You must be signed in to change notification settings - Fork 16.2k

Description

To reproduce this issue you will need to create external table stored by druid in hive and try to query it from superset.

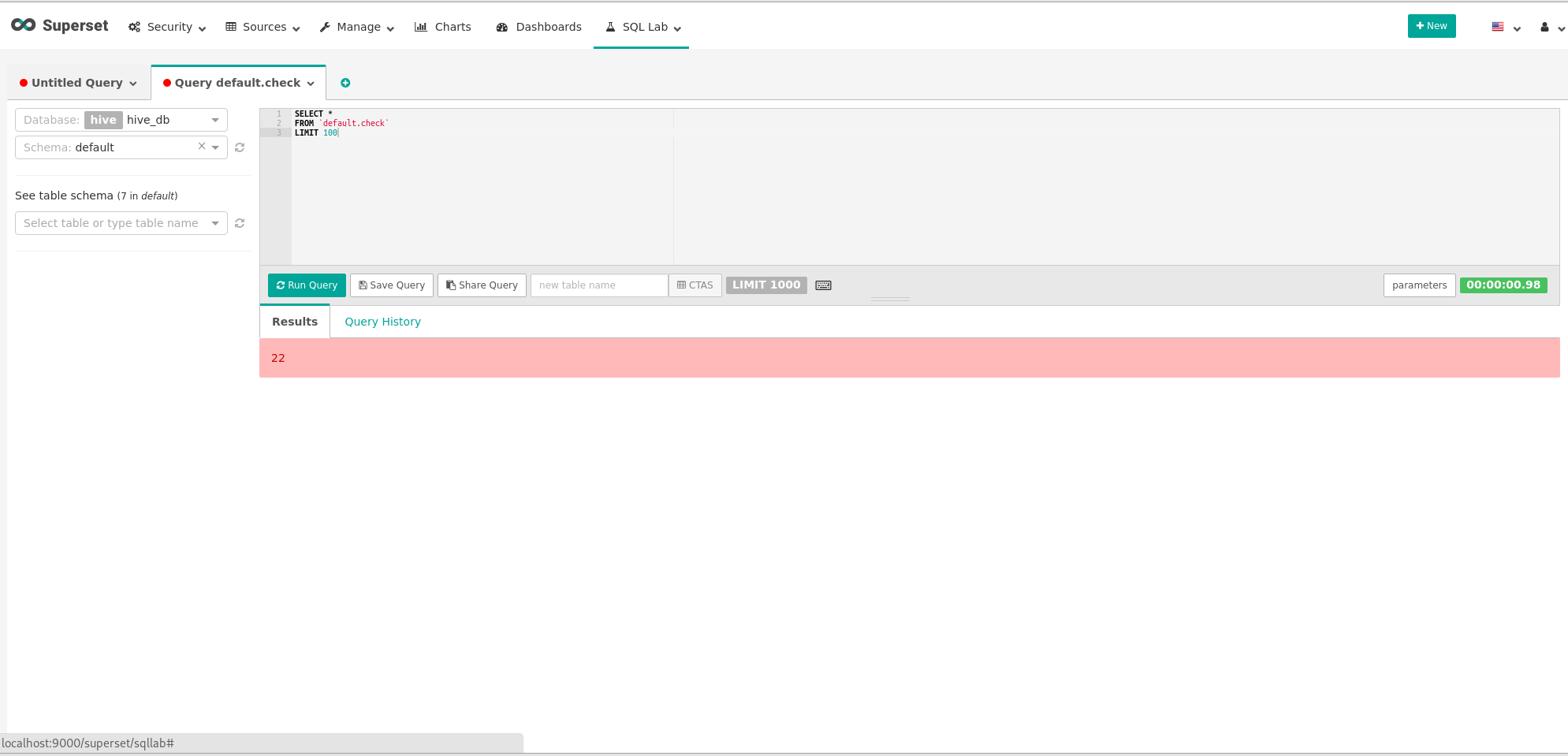

In sql lab you will see smth like this:

The real error is here:

Traceback (most recent call last):

File "/home/tvorogme/projects/incubator-superset/superset/sql_lab.py", line 196, in execute_sql_statement

data = db_engine_spec.fetch_data(cursor, query.limit)

File "/home/tvorogme/projects/incubator-superset/superset/db_engine_specs.py", line 1400, in fetch_data

return super(HiveEngineSpec, cls).fetch_data(cursor, limit)

File "/home/tvorogme/projects/incubator-superset/superset/db_engine_specs.py", line 160, in fetch_data

return cursor.fetchall()

File "/usr/lib/python3.7/site-packages/pyhive/common.py", line 136, in fetchall

return list(iter(self.fetchone, None))

File "/usr/lib/python3.7/site-packages/pyhive/common.py", line 105, in fetchone

self._fetch_while(lambda: not self._data and self._state != self._STATE_FINISHED)

File "/usr/lib/python3.7/site-packages/pyhive/common.py", line 45, in _fetch_while

self._fetch_more()

File "/usr/lib/python3.7/site-packages/pyhive/hive.py", line 389, in _fetch_more

schema = self.description

File "/usr/lib/python3.7/site-packages/pyhive/hive.py", line 320, in description

type_code = ttypes.TTypeId._VALUES_TO_NAMES[type_id]

KeyError: 22

The problem is in connector.

Lets see what is ttypes.TTypeId._VALUES_TO_NAMES:

{0: 'BOOLEAN_TYPE', 1: 'TINYINT_TYPE', 2: 'SMALLINT_TYPE', 3: 'INT_TYPE', 4: 'BIGINT_TYPE', 5: 'FLOAT_TYPE', 6: 'DOUBLE_TYPE', 7: 'STRING_TYPE', 8: 'TIMESTAMP_TYPE', 9: 'BINARY_TYPE', 10: 'ARRAY_TYPE', 11: 'MAP_TYPE', 12: 'STRUCT_TYPE', 13: 'UNION_TYPE', 14: 'USER_DEFINED_TYPE', 15: 'DECIMAL_TYPE', 16: 'NULL_TYPE', 17: 'DATE_TYPE', 18: 'VARCHAR_TYPE', 19: 'CHAR_TYPE', 20: 'INTERVAL_YEAR_MONTH_TYPE', 21: 'INTERVAL_DAY_TIME_TYPE'}

Ok. Now we need to know where type_id coming from:

primary_type_entry = col.typeDesc.types[0]

type_id = primary_type_entry.primitiveEntry.type

And now if you want to see column - it will be __time

So i have created issue in PyHive.

The easy solution for now replace 313 line in pyhive/hive.py

from

type_code = ttypes.TTypeId._VALUES_TO_NAMES[type_id]

to

type_code = ttypes.TTypeId._VALUES_TO_NAMES[type_id if type_id != 22 else 18]

18 is datetime so all will be cool:

P.S. I know that is not superset problem, but i guess many superset users use pyhive, so I just wanted to share my solution.