`.

- - Checkout [`phidata/cookbook/tools/youtube_tools`](https://github.com/phidatahq/phidata/blob/main/cookbook/tools/youtube_tools.py) for an example.

+ - Checkout [`agno/cookbook/tools/youtube_tools`](https://github.com/agno-agi/agno/blob/main/cookbook/tools/youtube_tools.py) for an example.

5. Important: Format and validate your code by running `./scripts/format.sh` and `./scripts/validate.sh`.

6. Submit a pull request.

-Message us on [Discord](https://discord.gg/4MtYHHrgA8) or post on [Discourse](https://community.phidata.com/) if you have any questions or need help with credits.

+Message us on [Discord](https://discord.gg/4MtYHHrgA8) or post on [Discourse](https://community.agno.com/) if you have any questions or need help with credits.

## 📚 Resources

-- Documentation

+- Documentation

- Discord

-- Discourse

+- Discourse

## 📝 License

diff --git a/README.md b/README.md

index 569b649211..3fd5886d45 100644

--- a/README.md

+++ b/README.md

@@ -1,156 +1,188 @@

-

- phidata

-

-

-

-

-  +

+

+

+## Overview

+

+[Agno](https://docs.agno.com) is a lightweight framework for building multi-modal Agents.

+

+## Simple, Fast, and Agnostic

-

+

+

+

+## Overview

+

+[Agno](https://docs.agno.com) is a lightweight framework for building multi-modal Agents.

+

+## Simple, Fast, and Agnostic

-

-Build multi-modal Agents with memory, knowledge, tools and reasoning.

-

+Agno is designed with three core principles:

- +- **Simplicity**: No graphs, chains, or convoluted patterns — just pure python.

+- **Uncompromising Performance**: Blazing fast agents with a minimal memory footprint.

+- **Truly Agnostic**: Any model, any provider, any modality. Future-proof agents.

-## What is phidata?

+## Key features

-**Phidata is a framework for building multi-modal agents**, use phidata to:

+Here's why you should build Agents with Agno:

-- **Build multi-modal agents with memory, knowledge, tools and reasoning.**

-- **Build teams of agents that can work together to solve problems.**

-- **Chat with your agents using a beautiful Agent UI.**

+- **Lightning Fast**: Agent creation is 6000x faster than LangGraph (see [performance](#performance)).

+- **Model Agnostic**: Use any model, any provider, no lock-in.

+- **Multi Modal**: Native support for text, image, audio and video.

+- **Multi Agent**: Delegate tasks across a team of specialized agents.

+- **Memory Management**: Store user sessions and agent state in a database.

+- **Knowledge Stores**: Use vector databases for Agentic RAG or dynamic few-shot.

+- **Structured Outputs**: Make Agents respond with structured data.

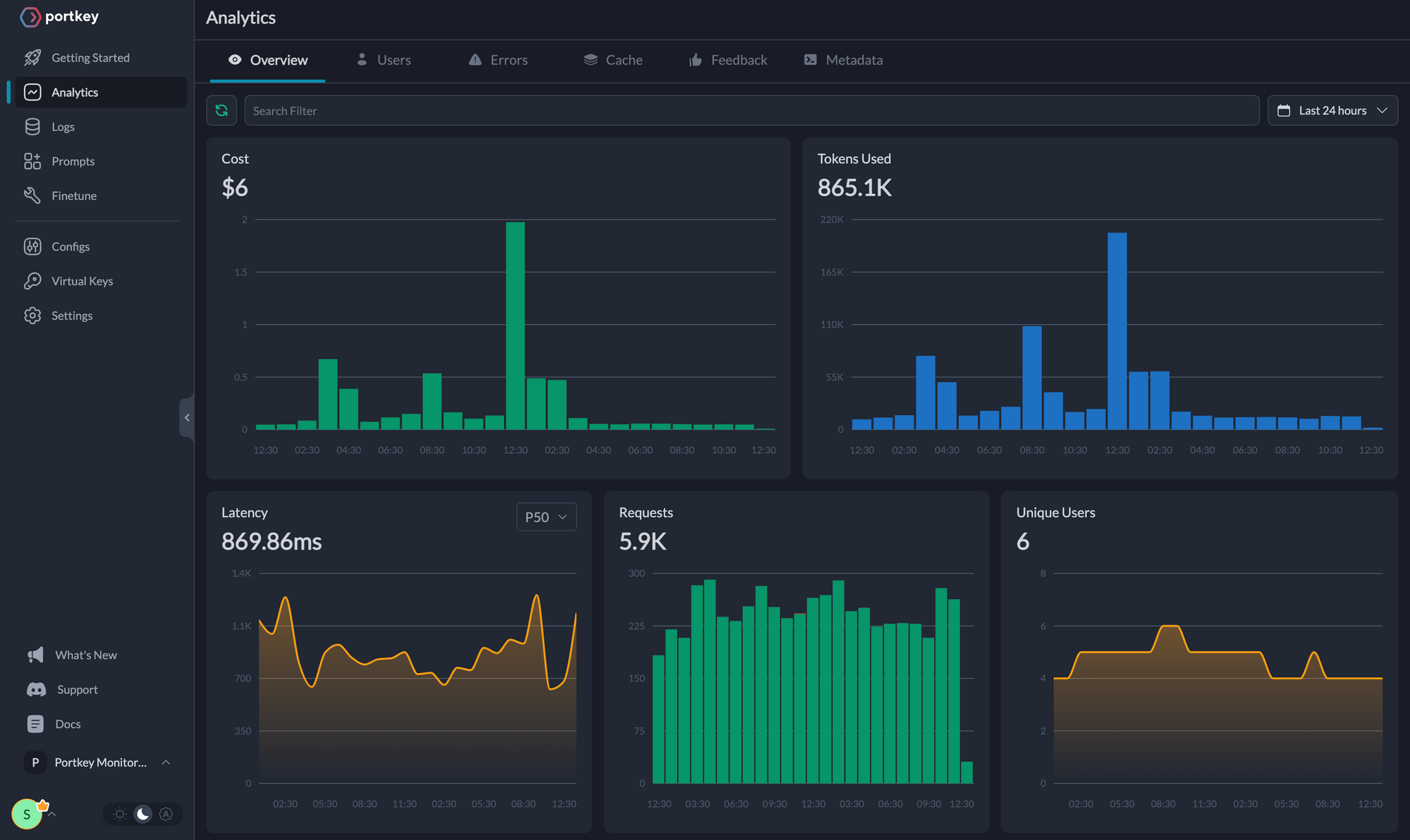

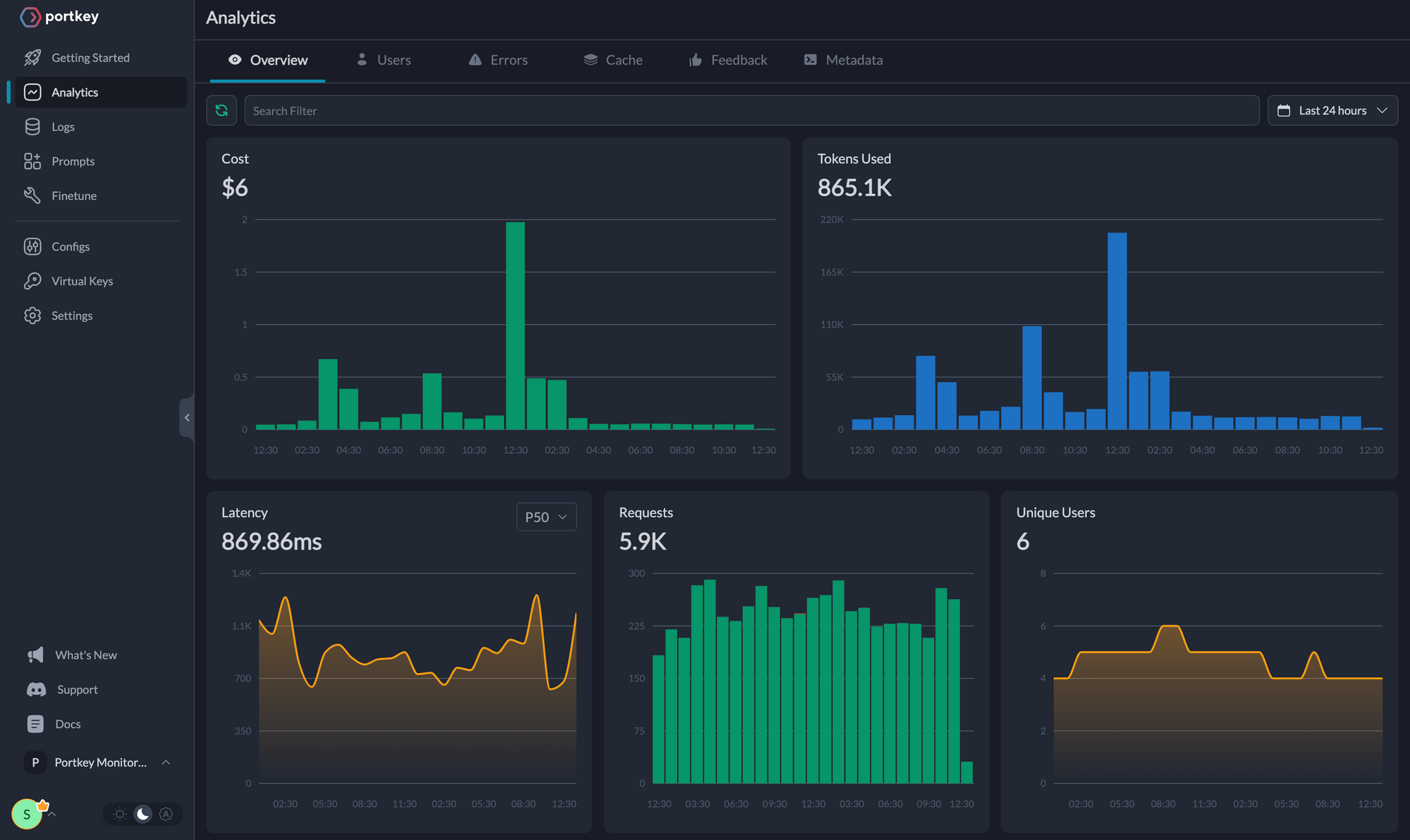

+- **Monitoring**: Track agent sessions and performance in real-time on [agno.com](https://app.agno.com).

-## Install

+

+## Installation

```shell

-pip install -U phidata

+pip install -U agno

```

-## Key Features

+## What are Agents?

-- [Simple & Elegant](#simple--elegant)

-- [Powerful & Flexible](#powerful--flexible)

-- [Multi-Modal by default](#multi-modal-by-default)

-- [Multi-Agent orchestration](#multi-agent-orchestration)

-- [A beautiful Agent UI to chat with your agents](#a-beautiful-agent-ui-to-chat-with-your-agents)

-- [Agentic RAG built-in](#agentic-rag)

-- [Structured Outputs](#structured-outputs)

-- [Reasoning Agents](#reasoning-agents-experimental)

-- [Monitoring & Debugging built-in](#monitoring--debugging)

-- [Demo Agents](#demo-agents)

+Agents are autonomous programs that use language models to achieve tasks. They solve problems by running tools, accessing knowledge and memory to improve responses.

-## Simple & Elegant

+Instead of a rigid binary definition, let's think of Agents in terms of agency and autonomy.

-Phidata Agents are simple and elegant, resulting in minimal, beautiful code.

+- **Level 0**: Agents with no tools (basic inference tasks).

+- **Level 1**: Agents with tools for autonomous task execution.

+- **Level 2**: Agents with knowledge, combining memory and reasoning.

+- **Level 3**: Teams of agents collaborating on complex workflows.

-For example, you can create a web search agent in 10 lines of code, create a file `web_search.py`

+## Example - Basic Agent

```python

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-from phi.tools.duckduckgo import DuckDuckGo

+from agno.agent import Agent

+from agno.models.openai import OpenAIChat

-web_agent = Agent(

+agent = Agent(

model=OpenAIChat(id="gpt-4o"),

- tools=[DuckDuckGo()],

- instructions=["Always include sources"],

- show_tool_calls=True,

- markdown=True,

+ description="You are an enthusiastic news reporter with a flair for storytelling!",

+ markdown=True

)

-web_agent.print_response("Tell me about OpenAI Sora?", stream=True)

+agent.print_response("Tell me about a breaking news story from New York.", stream=True)

```

-Install libraries, export your `OPENAI_API_KEY` and run the Agent:

+To run the agent, install dependencies and export your `OPENAI_API_KEY`.

```shell

-pip install phidata openai duckduckgo-search

+pip install agno openai

export OPENAI_API_KEY=sk-xxxx

-python web_search.py

+python basic_agent.py

```

-## Powerful & Flexible

+[View this example in the cookbook](./cookbook/getting_started/01_basic_agent.py)

-Phidata agents can use multiple tools and follow instructions to achieve complex tasks.

+## Example - Agent with tools

-For example, you can create a finance agent with tools to query financial data, create a file `finance_agent.py`

+This basic agent will obviously make up a story, lets give it a tool to search the web.

```python

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-from phi.tools.yfinance import YFinanceTools

+from agno.agent import Agent

+from agno.models.openai import OpenAIChat

+from agno.tools.duckduckgo import DuckDuckGoTools

-finance_agent = Agent(

- name="Finance Agent",

+agent = Agent(

model=OpenAIChat(id="gpt-4o"),

- tools=[YFinanceTools(stock_price=True, analyst_recommendations=True, company_info=True, company_news=True)],

- instructions=["Use tables to display data"],

+ description="You are an enthusiastic news reporter with a flair for storytelling!",

+ tools=[DuckDuckGoTools()],

show_tool_calls=True,

- markdown=True,

+ markdown=True

)

-finance_agent.print_response("Summarize analyst recommendations for NVDA", stream=True)

+agent.print_response("Tell me about a breaking news story from New York.", stream=True)

```

-Install libraries and run the Agent:

+Install dependencies and run the Agent:

```shell

-pip install yfinance

+pip install duckduckgo-search

-python finance_agent.py

+python agent_with_tools.py

```

-## Multi-Modal by default

+Now you should see a much more relevant result.

+

+[View this example in the cookbook](./cookbook/getting_started/02_agent_with_tools.py)

-Phidata agents support text, images, audio and video.

+## Example - Agent with knowledge

-For example, you can create an image agent that can understand images and make tool calls as needed, create a file `image_agent.py`

+Agents can store knowledge in a vector database and use it for RAG or dynamic few-shot learning.

+

+**Agno agents use Agentic RAG** by default, which means they will search their knowledge base for the specific information they need to achieve their task.

```python

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-from phi.tools.duckduckgo import DuckDuckGo

+from agno.agent import Agent

+from agno.models.openai import OpenAIChat

+from agno.embedder.openai import OpenAIEmbedder

+from agno.tools.duckduckgo import DuckDuckGoTools

+from agno.knowledge.pdf_url import PDFUrlKnowledgeBase

+from agno.vectordb.lancedb import LanceDb, SearchType

agent = Agent(

model=OpenAIChat(id="gpt-4o"),

- tools=[DuckDuckGo()],

- markdown=True,

+ description="You are a Thai cuisine expert!",

+ instructions=[

+ "Search your knowledge base for Thai recipes.",

+ "If the question is better suited for the web, search the web to fill in gaps.",

+ "Prefer the information in your knowledge base over the web results."

+ ],

+ knowledge=PDFUrlKnowledgeBase(

+ urls=["https://agno-public.s3.amazonaws.com/recipes/ThaiRecipes.pdf"],

+ vector_db=LanceDb(

+ uri="tmp/lancedb",

+ table_name="recipes",

+ search_type=SearchType.hybrid,

+ embedder=OpenAIEmbedder(id="text-embedding-3-small"),

+ ),

+ ),

+ tools=[DuckDuckGoTools()],

+ show_tool_calls=True,

+ markdown=True

)

-agent.print_response(

- "Tell me about this image and give me the latest news about it.",

- images=["https://upload.wikimedia.org/wikipedia/commons/b/bf/Krakow_-_Kosciol_Mariacki.jpg"],

- stream=True,

-)

+# Comment out after the knowledge base is loaded

+if agent.knowledge is not None:

+ agent.knowledge.load()

+

+agent.print_response("How do I make chicken and galangal in coconut milk soup", stream=True)

+agent.print_response("What is the history of Thai curry?", stream=True)

```

-Run the Agent:

+Install dependencies and run the Agent:

```shell

-python image_agent.py

+pip install lancedb tantivy pypdf duckduckgo-search

+

+python agent_with_knowledge.py

```

-## Multi-Agent orchestration

+[View this example in the cookbook](./cookbook/getting_started/03_agent_with_knowledge.py)

-Phidata agents can work together as a team to achieve complex tasks, create a file `agent_team.py`

+## Example - Multi Agent Teams

+

+Agents work best when they have a singular purpose, a narrow scope and a small number of tools. When the number of tools grows beyond what the language model can handle or the tools belong to different categories, use a team of agents to spread the load.

```python

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-from phi.tools.duckduckgo import DuckDuckGo

-from phi.tools.yfinance import YFinanceTools

+from agno.agent import Agent

+from agno.models.openai import OpenAIChat

+from agno.tools.duckduckgo import DuckDuckGoTools

+from agno.tools.yfinance import YFinanceTools

web_agent = Agent(

name="Web Agent",

role="Search the web for information",

model=OpenAIChat(id="gpt-4o"),

- tools=[DuckDuckGo()],

- instructions=["Always include sources"],

+ tools=[DuckDuckGoTools()],

+ instructions="Always include sources",

show_tool_calls=True,

markdown=True,

)

@@ -160,7 +192,7 @@ finance_agent = Agent(

role="Get financial data",

model=OpenAIChat(id="gpt-4o"),

tools=[YFinanceTools(stock_price=True, analyst_recommendations=True, company_info=True)],

- instructions=["Use tables to display data"],

+ instructions="Use tables to display data",

show_tool_calls=True,

markdown=True,

)

@@ -173,381 +205,113 @@ agent_team = Agent(

markdown=True,

)

-agent_team.print_response("Summarize analyst recommendations and share the latest news for NVDA", stream=True)

+agent_team.print_response("What's the market outlook and financial performance of AI semiconductor companies?", stream=True)

```

-Run the Agent team:

+Install dependencies and run the Agent team:

```shell

-python agent_team.py

-```

-

-## A beautiful Agent UI to chat with your agents

+pip install duckduckgo-search yfinance

-Phidata provides a beautiful UI for interacting with your agents. Let's take it for a spin, create a file `playground.py`

-

-

-

-> [!NOTE]

-> Phidata does not store any data, all agent data is stored locally in a sqlite database.

-

-```python

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-from phi.storage.agent.sqlite import SqlAgentStorage

-from phi.tools.duckduckgo import DuckDuckGo

-from phi.tools.yfinance import YFinanceTools

-from phi.playground import Playground, serve_playground_app

-

-web_agent = Agent(

- name="Web Agent",

- model=OpenAIChat(id="gpt-4o"),

- tools=[DuckDuckGo()],

- instructions=["Always include sources"],

- storage=SqlAgentStorage(table_name="web_agent", db_file="agents.db"),

- add_history_to_messages=True,

- markdown=True,

-)

-

-finance_agent = Agent(

- name="Finance Agent",

- model=OpenAIChat(id="gpt-4o"),

- tools=[YFinanceTools(stock_price=True, analyst_recommendations=True, company_info=True, company_news=True)],

- instructions=["Use tables to display data"],

- storage=SqlAgentStorage(table_name="finance_agent", db_file="agents.db"),

- add_history_to_messages=True,

- markdown=True,

-)

-

-app = Playground(agents=[finance_agent, web_agent]).get_app()

-

-if __name__ == "__main__":

- serve_playground_app("playground:app", reload=True)

-```

-

-

-Authenticate with phidata by running the following command:

-

-```shell

-phi auth

-```

-

-or by exporting the `PHI_API_KEY` for your workspace from [phidata.app](https://www.phidata.app)

-

-```bash

-export PHI_API_KEY=phi-***

-```

-

-Install dependencies and run the Agent Playground:

-

-```

-pip install 'fastapi[standard]' sqlalchemy

-

-python playground.py

-```

-

-- Open the link provided or navigate to `http://phidata.app/playground`

-- Select the `localhost:7777` endpoint and start chatting with your agents!

-

-

-

-## Agentic RAG

-

-We were the first to pioneer Agentic RAG using our Auto-RAG paradigm. With Agentic RAG (or auto-rag), the Agent can search its knowledge base (vector db) for the specific information it needs to achieve its task, instead of always inserting the "context" into the prompt.

-

-This saves tokens and improves response quality. Create a file `rag_agent.py`

-

-```python

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-from phi.embedder.openai import OpenAIEmbedder

-from phi.knowledge.pdf import PDFUrlKnowledgeBase

-from phi.vectordb.lancedb import LanceDb, SearchType

-

-# Create a knowledge base from a PDF

-knowledge_base = PDFUrlKnowledgeBase(

- urls=["https://phi-public.s3.amazonaws.com/recipes/ThaiRecipes.pdf"],

- # Use LanceDB as the vector database

- vector_db=LanceDb(

- table_name="recipes",

- uri="tmp/lancedb",

- search_type=SearchType.vector,

- embedder=OpenAIEmbedder(model="text-embedding-3-small"),

- ),

-)

-# Comment out after first run as the knowledge base is loaded

-knowledge_base.load()

-

-agent = Agent(

- model=OpenAIChat(id="gpt-4o"),

- # Add the knowledge base to the agent

- knowledge=knowledge_base,

- show_tool_calls=True,

- markdown=True,

-)

-agent.print_response("How do I make chicken and galangal in coconut milk soup", stream=True)

+python agent_team.py

```

-Install libraries and run the Agent:

+[View this example in the cookbook](./cookbook/getting_started/05_agent_team.py)

-```shell

-pip install lancedb tantivy pypdf sqlalchemy

+## Performance

-python rag_agent.py

-```

-

-## Structured Outputs

+Agno is specifically designed for building high performance agentic systems:

-Agents can return their output in a structured format as a Pydantic model.

+- Agent instantiation: <5μs on average (5000x faster than LangGraph).

+- Memory footprint: <0.01Mib on average (50x less memory than LangGraph).

-Create a file `structured_output.py`

-

-```python

-from typing import List

-from pydantic import BaseModel, Field

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-

-# Define a Pydantic model to enforce the structure of the output

-class MovieScript(BaseModel):

- setting: str = Field(..., description="Provide a nice setting for a blockbuster movie.")

- ending: str = Field(..., description="Ending of the movie. If not available, provide a happy ending.")

- genre: str = Field(..., description="Genre of the movie. If not available, select action, thriller or romantic comedy.")

- name: str = Field(..., description="Give a name to this movie")

- characters: List[str] = Field(..., description="Name of characters for this movie.")

- storyline: str = Field(..., description="3 sentence storyline for the movie. Make it exciting!")

-

-# Agent that uses JSON mode

-json_mode_agent = Agent(

- model=OpenAIChat(id="gpt-4o"),

- description="You write movie scripts.",

- response_model=MovieScript,

-)

-# Agent that uses structured outputs

-structured_output_agent = Agent(

- model=OpenAIChat(id="gpt-4o"),

- description="You write movie scripts.",

- response_model=MovieScript,

- structured_outputs=True,

-)

+> Tested on an Apple M4 Mackbook Pro.

-json_mode_agent.print_response("New York")

-structured_output_agent.print_response("New York")

-```

+While an Agent's performance is bottlenecked by inference, we must do everything possible to minimize execution time, reduce memory usage, and parallelize tool calls. These numbers are may seem minimal, but they add up even at medium scale.

-- Run the `structured_output.py` file

+### Instantiation time

-```shell

-python structured_output.py

-```

+Let's measure the time it takes for an Agent with 1 tool to start up. We'll run the evaluation 1000 times to get a baseline measurement.

-- The output is an object of the `MovieScript` class, here's how it looks:

+You should run the evaluation yourself on your own machine, please, do not take these results at face value.

```shell

-MovieScript(

-│ setting='A bustling and vibrant New York City',

-│ ending='The protagonist saves the city and reconciles with their estranged family.',

-│ genre='action',

-│ name='City Pulse',

-│ characters=['Alex Mercer', 'Nina Castillo', 'Detective Mike Johnson'],

-│ storyline='In the heart of New York City, a former cop turned vigilante, Alex Mercer, teams up with a street-smart activist, Nina Castillo, to take down a corrupt political figure who threatens to destroy the city. As they navigate through the intricate web of power and deception, they uncover shocking truths that push them to the brink of their abilities. With time running out, they must race against the clock to save New York and confront their own demons.'

-)

-```

+# Setup virtual environment

+./scripts/perf_setup.sh

+# OR Install dependencies manually

+# pip install openai agno langgraph langchain_openai

-## Reasoning Agents (experimental)

+# Agno

+python evals/performance/instantiation_with_tool.py

-Reasoning helps agents work through a problem step-by-step, backtracking and correcting as needed. Create a file `reasoning_agent.py`.

-

-```python

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-

-task = (

- "Three missionaries and three cannibals need to cross a river. "

- "They have a boat that can carry up to two people at a time. "

- "If, at any time, the cannibals outnumber the missionaries on either side of the river, the cannibals will eat the missionaries. "

- "How can all six people get across the river safely? Provide a step-by-step solution and show the solutions as an ascii diagram"

-)

-

-reasoning_agent = Agent(model=OpenAIChat(id="gpt-4o"), reasoning=True, markdown=True, structured_outputs=True)

-reasoning_agent.print_response(task, stream=True, show_full_reasoning=True)

+# LangGraph

+python evals/performance/other/langgraph_instantiation.py

```

-Run the Reasoning Agent:

-

-```shell

-python reasoning_agent.py

-```

-

-> [!WARNING]

-> Reasoning is an experimental feature and will break ~20% of the time. **It is not a replacement for o1.**

->

-> It is an experiment fueled by curiosity, combining COT and tool use. Set your expectations very low for this initial release. For example: It will not be able to count ‘r’s in ‘strawberry’.

-

-## Demo Agents

+The following evaluation is run on an Apple M4 Mackbook Pro, but we'll soon be moving this to a Github actions runner for consistency.

-The Agent Playground includes a few demo agents that you can test with. If you have recommendations for other demo agents, please let us know in our [community forum](https://community.phidata.com/).

+LangGraph is on the right, **we start it first to give it a head start**.

-

+Agno is on the left, notice how it finishes before LangGraph gets 1/2 way through the runtime measurement and hasn't even started the memory measurement. That's how fast Agno is.

-## Monitoring & Debugging

+https://github.com/user-attachments/assets/ba466d45-75dd-45ac-917b-0a56c5742e23

-### Monitoring

-

-Phidata comes with built-in monitoring. You can set `monitoring=True` on any agent to track sessions or set `PHI_MONITORING=true` in your environment.

-

-> [!NOTE]

-> Run `phi auth` to authenticate your local account or export the `PHI_API_KEY`

-

-```python

-from phi.agent import Agent

-

-agent = Agent(markdown=True, monitoring=True)

-agent.print_response("Share a 2 sentence horror story")

-```

-

-Run the agent and monitor the results on [phidata.app/sessions](https://www.phidata.app/sessions)

-

-```shell

-# You can also set the environment variable

-# export PHI_MONITORING=true

+Dividing the average time of a Langgraph Agent by the average time of an Agno Agent:

-python monitoring.py

```

-

-View the agent session on [phidata.app/sessions](https://www.phidata.app/sessions)

-

-

-

-### Debugging

-

-Phidata also includes a built-in debugger that will show debug logs in the terminal. You can set `debug_mode=True` on any agent to track sessions or set `PHI_DEBUG=true` in your environment.

-

-```python

-from phi.agent import Agent

-

-agent = Agent(markdown=True, debug_mode=True)

-agent.print_response("Share a 2 sentence horror story")

+0.020526s / 0.000002s ~ 10,263

```

-

-

-## Getting help

-

-- Read the docs at docs.phidata.com

-- Post your questions on the [community forum](https://community.phidata.com/)

-- Chat with us on discord

+In this particular run, **Agno Agent instantiation is roughly 10,000 times faster than Langgraph Agent instantiation**. Sure, the runtime will be dominated by inference, but these numbers will add up as the number of Agents grows.

-## More examples

+Because there is a lot of overhead in Langgraph, the numbers will get worse as the number of tools grows and the number of Agents grows.

-### Agent that can write and run python code

+### Memory usage

-

+- **Simplicity**: No graphs, chains, or convoluted patterns — just pure python.

+- **Uncompromising Performance**: Blazing fast agents with a minimal memory footprint.

+- **Truly Agnostic**: Any model, any provider, any modality. Future-proof agents.

-## What is phidata?

+## Key features

-**Phidata is a framework for building multi-modal agents**, use phidata to:

+Here's why you should build Agents with Agno:

-- **Build multi-modal agents with memory, knowledge, tools and reasoning.**

-- **Build teams of agents that can work together to solve problems.**

-- **Chat with your agents using a beautiful Agent UI.**

+- **Lightning Fast**: Agent creation is 6000x faster than LangGraph (see [performance](#performance)).

+- **Model Agnostic**: Use any model, any provider, no lock-in.

+- **Multi Modal**: Native support for text, image, audio and video.

+- **Multi Agent**: Delegate tasks across a team of specialized agents.

+- **Memory Management**: Store user sessions and agent state in a database.

+- **Knowledge Stores**: Use vector databases for Agentic RAG or dynamic few-shot.

+- **Structured Outputs**: Make Agents respond with structured data.

+- **Monitoring**: Track agent sessions and performance in real-time on [agno.com](https://app.agno.com).

-## Install

+

+## Installation

```shell

-pip install -U phidata

+pip install -U agno

```

-## Key Features

+## What are Agents?

-- [Simple & Elegant](#simple--elegant)

-- [Powerful & Flexible](#powerful--flexible)

-- [Multi-Modal by default](#multi-modal-by-default)

-- [Multi-Agent orchestration](#multi-agent-orchestration)

-- [A beautiful Agent UI to chat with your agents](#a-beautiful-agent-ui-to-chat-with-your-agents)

-- [Agentic RAG built-in](#agentic-rag)

-- [Structured Outputs](#structured-outputs)

-- [Reasoning Agents](#reasoning-agents-experimental)

-- [Monitoring & Debugging built-in](#monitoring--debugging)

-- [Demo Agents](#demo-agents)

+Agents are autonomous programs that use language models to achieve tasks. They solve problems by running tools, accessing knowledge and memory to improve responses.

-## Simple & Elegant

+Instead of a rigid binary definition, let's think of Agents in terms of agency and autonomy.

-Phidata Agents are simple and elegant, resulting in minimal, beautiful code.

+- **Level 0**: Agents with no tools (basic inference tasks).

+- **Level 1**: Agents with tools for autonomous task execution.

+- **Level 2**: Agents with knowledge, combining memory and reasoning.

+- **Level 3**: Teams of agents collaborating on complex workflows.

-For example, you can create a web search agent in 10 lines of code, create a file `web_search.py`

+## Example - Basic Agent

```python

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-from phi.tools.duckduckgo import DuckDuckGo

+from agno.agent import Agent

+from agno.models.openai import OpenAIChat

-web_agent = Agent(

+agent = Agent(

model=OpenAIChat(id="gpt-4o"),

- tools=[DuckDuckGo()],

- instructions=["Always include sources"],

- show_tool_calls=True,

- markdown=True,

+ description="You are an enthusiastic news reporter with a flair for storytelling!",

+ markdown=True

)

-web_agent.print_response("Tell me about OpenAI Sora?", stream=True)

+agent.print_response("Tell me about a breaking news story from New York.", stream=True)

```

-Install libraries, export your `OPENAI_API_KEY` and run the Agent:

+To run the agent, install dependencies and export your `OPENAI_API_KEY`.

```shell

-pip install phidata openai duckduckgo-search

+pip install agno openai

export OPENAI_API_KEY=sk-xxxx

-python web_search.py

+python basic_agent.py

```

-## Powerful & Flexible

+[View this example in the cookbook](./cookbook/getting_started/01_basic_agent.py)

-Phidata agents can use multiple tools and follow instructions to achieve complex tasks.

+## Example - Agent with tools

-For example, you can create a finance agent with tools to query financial data, create a file `finance_agent.py`

+This basic agent will obviously make up a story, lets give it a tool to search the web.

```python

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-from phi.tools.yfinance import YFinanceTools

+from agno.agent import Agent

+from agno.models.openai import OpenAIChat

+from agno.tools.duckduckgo import DuckDuckGoTools

-finance_agent = Agent(

- name="Finance Agent",

+agent = Agent(

model=OpenAIChat(id="gpt-4o"),

- tools=[YFinanceTools(stock_price=True, analyst_recommendations=True, company_info=True, company_news=True)],

- instructions=["Use tables to display data"],

+ description="You are an enthusiastic news reporter with a flair for storytelling!",

+ tools=[DuckDuckGoTools()],

show_tool_calls=True,

- markdown=True,

+ markdown=True

)

-finance_agent.print_response("Summarize analyst recommendations for NVDA", stream=True)

+agent.print_response("Tell me about a breaking news story from New York.", stream=True)

```

-Install libraries and run the Agent:

+Install dependencies and run the Agent:

```shell

-pip install yfinance

+pip install duckduckgo-search

-python finance_agent.py

+python agent_with_tools.py

```

-## Multi-Modal by default

+Now you should see a much more relevant result.

+

+[View this example in the cookbook](./cookbook/getting_started/02_agent_with_tools.py)

-Phidata agents support text, images, audio and video.

+## Example - Agent with knowledge

-For example, you can create an image agent that can understand images and make tool calls as needed, create a file `image_agent.py`

+Agents can store knowledge in a vector database and use it for RAG or dynamic few-shot learning.

+

+**Agno agents use Agentic RAG** by default, which means they will search their knowledge base for the specific information they need to achieve their task.

```python

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-from phi.tools.duckduckgo import DuckDuckGo

+from agno.agent import Agent

+from agno.models.openai import OpenAIChat

+from agno.embedder.openai import OpenAIEmbedder

+from agno.tools.duckduckgo import DuckDuckGoTools

+from agno.knowledge.pdf_url import PDFUrlKnowledgeBase

+from agno.vectordb.lancedb import LanceDb, SearchType

agent = Agent(

model=OpenAIChat(id="gpt-4o"),

- tools=[DuckDuckGo()],

- markdown=True,

+ description="You are a Thai cuisine expert!",

+ instructions=[

+ "Search your knowledge base for Thai recipes.",

+ "If the question is better suited for the web, search the web to fill in gaps.",

+ "Prefer the information in your knowledge base over the web results."

+ ],

+ knowledge=PDFUrlKnowledgeBase(

+ urls=["https://agno-public.s3.amazonaws.com/recipes/ThaiRecipes.pdf"],

+ vector_db=LanceDb(

+ uri="tmp/lancedb",

+ table_name="recipes",

+ search_type=SearchType.hybrid,

+ embedder=OpenAIEmbedder(id="text-embedding-3-small"),

+ ),

+ ),

+ tools=[DuckDuckGoTools()],

+ show_tool_calls=True,

+ markdown=True

)

-agent.print_response(

- "Tell me about this image and give me the latest news about it.",

- images=["https://upload.wikimedia.org/wikipedia/commons/b/bf/Krakow_-_Kosciol_Mariacki.jpg"],

- stream=True,

-)

+# Comment out after the knowledge base is loaded

+if agent.knowledge is not None:

+ agent.knowledge.load()

+

+agent.print_response("How do I make chicken and galangal in coconut milk soup", stream=True)

+agent.print_response("What is the history of Thai curry?", stream=True)

```

-Run the Agent:

+Install dependencies and run the Agent:

```shell

-python image_agent.py

+pip install lancedb tantivy pypdf duckduckgo-search

+

+python agent_with_knowledge.py

```

-## Multi-Agent orchestration

+[View this example in the cookbook](./cookbook/getting_started/03_agent_with_knowledge.py)

-Phidata agents can work together as a team to achieve complex tasks, create a file `agent_team.py`

+## Example - Multi Agent Teams

+

+Agents work best when they have a singular purpose, a narrow scope and a small number of tools. When the number of tools grows beyond what the language model can handle or the tools belong to different categories, use a team of agents to spread the load.

```python

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-from phi.tools.duckduckgo import DuckDuckGo

-from phi.tools.yfinance import YFinanceTools

+from agno.agent import Agent

+from agno.models.openai import OpenAIChat

+from agno.tools.duckduckgo import DuckDuckGoTools

+from agno.tools.yfinance import YFinanceTools

web_agent = Agent(

name="Web Agent",

role="Search the web for information",

model=OpenAIChat(id="gpt-4o"),

- tools=[DuckDuckGo()],

- instructions=["Always include sources"],

+ tools=[DuckDuckGoTools()],

+ instructions="Always include sources",

show_tool_calls=True,

markdown=True,

)

@@ -160,7 +192,7 @@ finance_agent = Agent(

role="Get financial data",

model=OpenAIChat(id="gpt-4o"),

tools=[YFinanceTools(stock_price=True, analyst_recommendations=True, company_info=True)],

- instructions=["Use tables to display data"],

+ instructions="Use tables to display data",

show_tool_calls=True,

markdown=True,

)

@@ -173,381 +205,113 @@ agent_team = Agent(

markdown=True,

)

-agent_team.print_response("Summarize analyst recommendations and share the latest news for NVDA", stream=True)

+agent_team.print_response("What's the market outlook and financial performance of AI semiconductor companies?", stream=True)

```

-Run the Agent team:

+Install dependencies and run the Agent team:

```shell

-python agent_team.py

-```

-

-## A beautiful Agent UI to chat with your agents

+pip install duckduckgo-search yfinance

-Phidata provides a beautiful UI for interacting with your agents. Let's take it for a spin, create a file `playground.py`

-

-

-

-> [!NOTE]

-> Phidata does not store any data, all agent data is stored locally in a sqlite database.

-

-```python

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-from phi.storage.agent.sqlite import SqlAgentStorage

-from phi.tools.duckduckgo import DuckDuckGo

-from phi.tools.yfinance import YFinanceTools

-from phi.playground import Playground, serve_playground_app

-

-web_agent = Agent(

- name="Web Agent",

- model=OpenAIChat(id="gpt-4o"),

- tools=[DuckDuckGo()],

- instructions=["Always include sources"],

- storage=SqlAgentStorage(table_name="web_agent", db_file="agents.db"),

- add_history_to_messages=True,

- markdown=True,

-)

-

-finance_agent = Agent(

- name="Finance Agent",

- model=OpenAIChat(id="gpt-4o"),

- tools=[YFinanceTools(stock_price=True, analyst_recommendations=True, company_info=True, company_news=True)],

- instructions=["Use tables to display data"],

- storage=SqlAgentStorage(table_name="finance_agent", db_file="agents.db"),

- add_history_to_messages=True,

- markdown=True,

-)

-

-app = Playground(agents=[finance_agent, web_agent]).get_app()

-

-if __name__ == "__main__":

- serve_playground_app("playground:app", reload=True)

-```

-

-

-Authenticate with phidata by running the following command:

-

-```shell

-phi auth

-```

-

-or by exporting the `PHI_API_KEY` for your workspace from [phidata.app](https://www.phidata.app)

-

-```bash

-export PHI_API_KEY=phi-***

-```

-

-Install dependencies and run the Agent Playground:

-

-```

-pip install 'fastapi[standard]' sqlalchemy

-

-python playground.py

-```

-

-- Open the link provided or navigate to `http://phidata.app/playground`

-- Select the `localhost:7777` endpoint and start chatting with your agents!

-

-

-

-## Agentic RAG

-

-We were the first to pioneer Agentic RAG using our Auto-RAG paradigm. With Agentic RAG (or auto-rag), the Agent can search its knowledge base (vector db) for the specific information it needs to achieve its task, instead of always inserting the "context" into the prompt.

-

-This saves tokens and improves response quality. Create a file `rag_agent.py`

-

-```python

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-from phi.embedder.openai import OpenAIEmbedder

-from phi.knowledge.pdf import PDFUrlKnowledgeBase

-from phi.vectordb.lancedb import LanceDb, SearchType

-

-# Create a knowledge base from a PDF

-knowledge_base = PDFUrlKnowledgeBase(

- urls=["https://phi-public.s3.amazonaws.com/recipes/ThaiRecipes.pdf"],

- # Use LanceDB as the vector database

- vector_db=LanceDb(

- table_name="recipes",

- uri="tmp/lancedb",

- search_type=SearchType.vector,

- embedder=OpenAIEmbedder(model="text-embedding-3-small"),

- ),

-)

-# Comment out after first run as the knowledge base is loaded

-knowledge_base.load()

-

-agent = Agent(

- model=OpenAIChat(id="gpt-4o"),

- # Add the knowledge base to the agent

- knowledge=knowledge_base,

- show_tool_calls=True,

- markdown=True,

-)

-agent.print_response("How do I make chicken and galangal in coconut milk soup", stream=True)

+python agent_team.py

```

-Install libraries and run the Agent:

+[View this example in the cookbook](./cookbook/getting_started/05_agent_team.py)

-```shell

-pip install lancedb tantivy pypdf sqlalchemy

+## Performance

-python rag_agent.py

-```

-

-## Structured Outputs

+Agno is specifically designed for building high performance agentic systems:

-Agents can return their output in a structured format as a Pydantic model.

+- Agent instantiation: <5μs on average (5000x faster than LangGraph).

+- Memory footprint: <0.01Mib on average (50x less memory than LangGraph).

-Create a file `structured_output.py`

-

-```python

-from typing import List

-from pydantic import BaseModel, Field

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-

-# Define a Pydantic model to enforce the structure of the output

-class MovieScript(BaseModel):

- setting: str = Field(..., description="Provide a nice setting for a blockbuster movie.")

- ending: str = Field(..., description="Ending of the movie. If not available, provide a happy ending.")

- genre: str = Field(..., description="Genre of the movie. If not available, select action, thriller or romantic comedy.")

- name: str = Field(..., description="Give a name to this movie")

- characters: List[str] = Field(..., description="Name of characters for this movie.")

- storyline: str = Field(..., description="3 sentence storyline for the movie. Make it exciting!")

-

-# Agent that uses JSON mode

-json_mode_agent = Agent(

- model=OpenAIChat(id="gpt-4o"),

- description="You write movie scripts.",

- response_model=MovieScript,

-)

-# Agent that uses structured outputs

-structured_output_agent = Agent(

- model=OpenAIChat(id="gpt-4o"),

- description="You write movie scripts.",

- response_model=MovieScript,

- structured_outputs=True,

-)

+> Tested on an Apple M4 Mackbook Pro.

-json_mode_agent.print_response("New York")

-structured_output_agent.print_response("New York")

-```

+While an Agent's performance is bottlenecked by inference, we must do everything possible to minimize execution time, reduce memory usage, and parallelize tool calls. These numbers are may seem minimal, but they add up even at medium scale.

-- Run the `structured_output.py` file

+### Instantiation time

-```shell

-python structured_output.py

-```

+Let's measure the time it takes for an Agent with 1 tool to start up. We'll run the evaluation 1000 times to get a baseline measurement.

-- The output is an object of the `MovieScript` class, here's how it looks:

+You should run the evaluation yourself on your own machine, please, do not take these results at face value.

```shell

-MovieScript(

-│ setting='A bustling and vibrant New York City',

-│ ending='The protagonist saves the city and reconciles with their estranged family.',

-│ genre='action',

-│ name='City Pulse',

-│ characters=['Alex Mercer', 'Nina Castillo', 'Detective Mike Johnson'],

-│ storyline='In the heart of New York City, a former cop turned vigilante, Alex Mercer, teams up with a street-smart activist, Nina Castillo, to take down a corrupt political figure who threatens to destroy the city. As they navigate through the intricate web of power and deception, they uncover shocking truths that push them to the brink of their abilities. With time running out, they must race against the clock to save New York and confront their own demons.'

-)

-```

+# Setup virtual environment

+./scripts/perf_setup.sh

+# OR Install dependencies manually

+# pip install openai agno langgraph langchain_openai

-## Reasoning Agents (experimental)

+# Agno

+python evals/performance/instantiation_with_tool.py

-Reasoning helps agents work through a problem step-by-step, backtracking and correcting as needed. Create a file `reasoning_agent.py`.

-

-```python

-from phi.agent import Agent

-from phi.model.openai import OpenAIChat

-

-task = (

- "Three missionaries and three cannibals need to cross a river. "

- "They have a boat that can carry up to two people at a time. "

- "If, at any time, the cannibals outnumber the missionaries on either side of the river, the cannibals will eat the missionaries. "

- "How can all six people get across the river safely? Provide a step-by-step solution and show the solutions as an ascii diagram"

-)

-

-reasoning_agent = Agent(model=OpenAIChat(id="gpt-4o"), reasoning=True, markdown=True, structured_outputs=True)

-reasoning_agent.print_response(task, stream=True, show_full_reasoning=True)

+# LangGraph

+python evals/performance/other/langgraph_instantiation.py

```

-Run the Reasoning Agent:

-

-```shell

-python reasoning_agent.py

-```

-

-> [!WARNING]

-> Reasoning is an experimental feature and will break ~20% of the time. **It is not a replacement for o1.**

->

-> It is an experiment fueled by curiosity, combining COT and tool use. Set your expectations very low for this initial release. For example: It will not be able to count ‘r’s in ‘strawberry’.

-

-## Demo Agents

+The following evaluation is run on an Apple M4 Mackbook Pro, but we'll soon be moving this to a Github actions runner for consistency.

-The Agent Playground includes a few demo agents that you can test with. If you have recommendations for other demo agents, please let us know in our [community forum](https://community.phidata.com/).

+LangGraph is on the right, **we start it first to give it a head start**.

-

+Agno is on the left, notice how it finishes before LangGraph gets 1/2 way through the runtime measurement and hasn't even started the memory measurement. That's how fast Agno is.

-## Monitoring & Debugging

+https://github.com/user-attachments/assets/ba466d45-75dd-45ac-917b-0a56c5742e23

-### Monitoring

-

-Phidata comes with built-in monitoring. You can set `monitoring=True` on any agent to track sessions or set `PHI_MONITORING=true` in your environment.

-

-> [!NOTE]

-> Run `phi auth` to authenticate your local account or export the `PHI_API_KEY`

-

-```python

-from phi.agent import Agent

-

-agent = Agent(markdown=True, monitoring=True)

-agent.print_response("Share a 2 sentence horror story")

-```

-

-Run the agent and monitor the results on [phidata.app/sessions](https://www.phidata.app/sessions)

-

-```shell

-# You can also set the environment variable

-# export PHI_MONITORING=true

+Dividing the average time of a Langgraph Agent by the average time of an Agno Agent:

-python monitoring.py

```

-

-View the agent session on [phidata.app/sessions](https://www.phidata.app/sessions)

-

-

-

-### Debugging

-

-Phidata also includes a built-in debugger that will show debug logs in the terminal. You can set `debug_mode=True` on any agent to track sessions or set `PHI_DEBUG=true` in your environment.

-

-```python

-from phi.agent import Agent

-

-agent = Agent(markdown=True, debug_mode=True)

-agent.print_response("Share a 2 sentence horror story")

+0.020526s / 0.000002s ~ 10,263

```

-

-

-## Getting help

-

-- Read the docs at docs.phidata.com

-- Post your questions on the [community forum](https://community.phidata.com/)

-- Chat with us on discord

+In this particular run, **Agno Agent instantiation is roughly 10,000 times faster than Langgraph Agent instantiation**. Sure, the runtime will be dominated by inference, but these numbers will add up as the number of Agents grows.

-## More examples

+Because there is a lot of overhead in Langgraph, the numbers will get worse as the number of tools grows and the number of Agents grows.

-### Agent that can write and run python code

+### Memory usage

-

+To measure memory usage, we use the `tracemalloc` library. We first calculate a baseline memory usage by running an empty function, then run the Agent 1000x times and calculate the difference. This gives a (reasonably) isolated measurement of the memory usage of the Agent.

-Show code

+We recommend running the evaluation yourself on your own machine, and digging into the code to see how it works. If we've made a mistake, please let us know.

-The `PythonAgent` can achieve tasks by writing and running python code.

-

-- Create a file `python_agent.py`

-

-```python

-from phi.agent.python import PythonAgent

-from phi.model.openai import OpenAIChat

-from phi.file.local.csv import CsvFile

-

-python_agent = PythonAgent(

- model=OpenAIChat(id="gpt-4o"),

- files=[

- CsvFile(

- path="https://phidata-public.s3.amazonaws.com/demo_data/IMDB-Movie-Data.csv",

- description="Contains information about movies from IMDB.",

- )

- ],

- markdown=True,

- pip_install=True,

- show_tool_calls=True,

-)

+Dividing the average memory usage of a Langgraph Agent by the average memory usage of an Agno Agent:

-python_agent.print_response("What is the average rating of movies?")

```

-

-- Run the `python_agent.py`

-

-```shell

-python python_agent.py

+0.137273/0.002528 ~ 54.3

```

-

-

-### Agent that can analyze data using SQL

-

-

+**Langgraph Agents use ~50x more memory than Agno Agents**. In our opinion, memory usage is a much more important metric than instantiation time. As we start running thousands of Agents in production, these numbers directly start affecting the cost of running the Agents.

-Show code

+### Conclusion

-The `DuckDbAgent` can perform data analysis using SQL.

+Agno agents are designed for performance, while we share some benchmarks against other frameworks, we should be mindful that these numbers are subjective, and accuracy and reliability are more important than speed.

-- Create a file `data_analyst.py`

-

-```python

-import json

-from phi.model.openai import OpenAIChat

-from phi.agent.duckdb import DuckDbAgent

-

-data_analyst = DuckDbAgent(

- model=OpenAIChat(model="gpt-4o"),

- markdown=True,

- semantic_model=json.dumps(

- {

- "tables": [

- {

- "name": "movies",

- "description": "Contains information about movies from IMDB.",

- "path": "https://phidata-public.s3.amazonaws.com/demo_data/IMDB-Movie-Data.csv",

- }

- ]

- },

- indent=2,

- ),

-)

+We'll be publishing accuracy and reliability benchmarks running on Github actions in the coming weeks. Given that each framework is different and we won't be able to tune their performance like we do with Agno, for future benchmarks we'll only be comparing against ourselves.

-data_analyst.print_response(

- "Show me a histogram of ratings. "

- "Choose an appropriate bucket size but share how you chose it. "

- "Show me the result as a pretty ascii diagram",

- stream=True,

-)

-```

+## Cursor Setup

-- Install duckdb and run the `data_analyst.py` file

+When building Agno agents, using the Agno docs as a documentation source in Cursor is a great way to speed up your development.

-```shell

-pip install duckdb

+1. In Cursor, go to the settings or preferences section.

+2. Find the section to manage documentation sources.

+3. Add `https://docs.agno.com` to the list of documentation URLs.

+4. Save the changes.

-python data_analyst.py

-```

+Now, Cursor will have access to the Agno documentation.

-

+## Documentation, Community & More examples

-### Check out the [cookbook](https://github.com/phidatahq/phidata/tree/main/cookbook) for more examples.

+- Docs: docs.agno.com

+- Getting Started Examples: Getting Started Cookbook

+- All Examples: Cookbook

+- Community forum: community.agno.com

+- Chat: discord

## Contributions

-We're an open-source project and welcome contributions, please read the [contributing guide](https://github.com/phidatahq/phidata/blob/main/CONTRIBUTING.md) for more information.

-

-## Request a feature

-

-- If you have a feature request, please open an issue or make a pull request.

-- If you have ideas on how we can improve, please create a discussion.

+We welcome contributions, read our [contributing guide](https://github.com/agno-agi/agno/blob/main/CONTRIBUTING.md) to get started.

## Telemetry

-Phidata logs which model an agent used so we can prioritize features for the most popular models.

-

-You can disable this by setting `PHI_TELEMETRY=false` in your environment.

+Agno logs which model an agent used so we can prioritize updates to the most popular providers. You can disable this by setting `AGNO_TELEMETRY=false` in your environment.

⬆️ Back to Top

diff --git a/agno.code-workspace b/agno.code-workspace

new file mode 100644

index 0000000000..1e3fd025ca

--- /dev/null

+++ b/agno.code-workspace

@@ -0,0 +1,15 @@

+{

+ "folders": [

+ {

+ "path": "."

+ }

+ ],

+ "settings": {

+ "python.analysis.extraPaths": [

+ "libs/agno",

+ "libs/infra/agno_docker",

+ "libs/infra/agno_aws"

+ ]

+ }

+}

+

diff --git a/cookbook/README.md b/cookbook/README.md

new file mode 100644

index 0000000000..cc24ca6286

--- /dev/null

+++ b/cookbook/README.md

@@ -0,0 +1,67 @@

+# Agno Cookbooks

+

+## Getting Started

+

+The getting started guide walks through the basics of building Agents with Agno. Recipes build on each other, introducing new concepts and capabilities.

+

+## Agent Concepts

+

+The concepts cookbook walks through the core concepts of Agno.

+

+- [Async](./agent_concepts/async)

+- [RAG](./agent_concepts/rag)

+- [Knowledge](./agent_concepts/knowledge)

+- [Memory](./agent_concepts/memory)

+- [Storage](./agent_concepts/storage)

+- [Tools](./agent_concepts/tools)

+- [Reasoning](./agent_concepts/reasoning)

+- [Vector DBs](./agent_concepts/vector_dbs)

+- [Multi-modal Agents](./agent_concepts/multimodal)

+- [Agent Teams](./agent_concepts/teams)

+- [Hybrid Search](./agent_concepts/hybrid_search)

+- [Agent Session](./agent_concepts/agent_session)

+- [Other](./agent_concepts/other)

+

+## Examples

+

+The examples cookbook contains real world examples of building agents with Agno.

+

+## Playground

+

+The playground cookbook contains examples of interacting with agents using the Agno Agent UI.

+

+## Workflows

+

+The workflows cookbook contains examples of building workflows with Agno.

+

+## Scripts

+

+Just a place to store setup scripts like `run_pgvector.sh` etc

+

+## Setup

+

+### Create and activate a virtual environment

+

+```shell

+python3 -m venv .venv

+source .venv/bin/activate

+```

+

+### Install libraries

+

+```shell

+pip install -U openai agno # And all other packages you might need

+```

+

+### Export your keys

+

+```shell

+export OPENAI_API_KEY=***

+export GOOGLE_API_KEY=***

+```

+

+## Run a cookbook

+

+```shell

+python cookbook/.../example.py

+```

diff --git a/cookbook/agent_concepts/README.md b/cookbook/agent_concepts/README.md

new file mode 100644

index 0000000000..b51e1cf238

--- /dev/null

+++ b/cookbook/agent_concepts/README.md

@@ -0,0 +1,78 @@

+# Agent Concepts

+

+Application of several agent concepts using Agno.

+

+## Overview

+

+### Async

+

+Async refers to agents built with `async def` support, allowing them to seamlessly integrate into asynchronous Python applications. While async agents are not inherently parallel, they allow better handling of I/O-bound operations, improving responsiveness in Python apps.

+

+For examples of using async agents, see /cookbook/agent_concepts/async/.

+

+### Hybrid Search

+

+Hybrid Search combines multiple search paradigms—such as vector similarity search and traditional keyword-based search—to retrieve the most relevant results for a given query. This approach ensures that agents can find both semantically similar results and exact keyword matches, improving accuracy and context-awareness in diverse use cases.

+

+Hybrid search examples can be found under `/cookbook/agent_concepts/hybrid_search/`

+

+### Knowledge

+

+Agents use a knowledge base to supplement their training data with domain expertise.

+Knowledge is stored in a vector database and provides agents with business context at query time, helping them respond in a context-aware manner.

+

+Examples of Agents with knowledge can be found under `/cookbook/agent_concepts/knowledge/`

+

+### Memory

+

+Agno provides 3 types of memory for Agents:

+

+1. Chat History: The message history of the session. Agno will store the sessions in a database for you, and retrieve them when you resume a session.

+2. User Memories: Notes and insights about the user, this helps the model personalize the response to the user.

+3. Summaries: A summary of the conversation, which is added to the prompt when chat history gets too long.

+

+Examples of Agents using different memory types can be found under `/cookbook/agent_concepts/memory/`

+

+### Multimodal

+

+In addition to text, Agno agents support image, audio, and video inputs and can generate image and audio outputs.

+

+Examples with multimodal input and outputs using Agno can be found under `/cookbook/agent_concepts/storage/`

+

+### RAG

+

+RAG (Retrieval-Augmented Generation) integrates external data sources with AI's generation processes to produce context-aware, accurate, and relevant responses. It leverages vector databases for retrieved information and enhances agent memory components like chat history and summaries to provide coherent and informed answers.

+

+Examples of agentic RAG can be found under `/cookbook/agent_concepts/rag/`

+

+### Reasoning

+

+Reasoning is an *experimental feature* that enables an Agent to think through a problem step-by-step before jumping into a response. The Agent works through different ideas, validating and correcting as needed. Once it reaches a final answer, it will validate and provide a response.

+

+Examples of agentic shwowing their reasoning can be found under `/cookbook/agent_concepts/reasoning/`

+

+### Storage

+

+Agents use storage to persist sessions and session state by storing them in a database.

+

+Agents come with built-in memory, but it only lasts while the session is active. To continue conversations across sessions, we store agent sessions in a database like Sqllite or PostgreSQL.

+

+Examples of using storage with Agno agents can be found under `/cookbook/agent_concepts/storage/`

+

+### Teams

+

+Multiple agents can be combined to form a team and complete complicated tasks as a cohesive unit.

+

+Examples of using agent teams with Agno can be found under `/cookbook/agent_concepts/teams/`

+

+### Tools

+

+Agents use tools to take actions and interact with external systems. A tool is a function that an Agent can use to achieve a task. For example: searching the web, running SQL, sending an email or calling APIs.

+

+Examples of using tools with Agno agents can be found under `/cookbook/agent_concepts/tools/`

+

+### Vector DB's

+

+Vector databases enable us to store information as embeddings and search for “results similar” to our input query using cosine similarity or full text search. These results are then provided to the Agent as context so it can respond in a context-aware manner using Retrieval Augmented Generation (RAG).

+

+Examples of using vector databases with Agno can be found under `/cookbook/agent_concepts/vector_dbs/`

diff --git a/cookbook/agents/__init__.py b/cookbook/agent_concepts/__init__.py

similarity index 100%

rename from cookbook/agents/__init__.py

rename to cookbook/agent_concepts/__init__.py

diff --git a/cookbook/agents_101/__init__.py b/cookbook/agent_concepts/async/__init__.py

similarity index 100%

rename from cookbook/agents_101/__init__.py

rename to cookbook/agent_concepts/async/__init__.py

diff --git a/cookbook/agent_concepts/async/basic.py b/cookbook/agent_concepts/async/basic.py

new file mode 100644

index 0000000000..fc456ba462

--- /dev/null

+++ b/cookbook/agent_concepts/async/basic.py

@@ -0,0 +1,13 @@

+import asyncio

+

+from agno.agent import Agent

+from agno.models.openai import OpenAIChat

+

+agent = Agent(

+ model=OpenAIChat(id="gpt-4o"),

+ description="You help people with their health and fitness goals.",

+ instructions=["Recipes should be under 5 ingredients"],

+ markdown=True,

+)

+# -*- Print a response to the cli

+asyncio.run(agent.aprint_response("Share a breakfast recipe.", stream=True))

diff --git a/cookbook/agent_concepts/async/data_analyst.py b/cookbook/agent_concepts/async/data_analyst.py

new file mode 100644

index 0000000000..d419497faa

--- /dev/null

+++ b/cookbook/agent_concepts/async/data_analyst.py

@@ -0,0 +1,30 @@

+"""Run `pip install duckdb` to install dependencies."""

+

+import asyncio

+from textwrap import dedent

+

+from agno.agent import Agent

+from agno.models.openai import OpenAIChat

+from agno.tools.duckdb import DuckDbTools

+

+duckdb_tools = DuckDbTools(

+ create_tables=False, export_tables=False, summarize_tables=False

+)

+duckdb_tools.create_table_from_path(

+ path="https://agno-public.s3.amazonaws.com/demo_data/IMDB-Movie-Data.csv",

+ table="movies",

+)

+

+agent = Agent(

+ model=OpenAIChat(id="gpt-4o"),

+ tools=[duckdb_tools],

+ markdown=True,

+ show_tool_calls=True,

+ additional_context=dedent("""\

+ You have access to the following tables:

+ - movies: contains information about movies from IMDB.

+ """),

+)

+asyncio.run(

+ agent.aprint_response("What is the average rating of movies?", stream=False)

+)

diff --git a/cookbook/agent_concepts/async/gather_agents.py b/cookbook/agent_concepts/async/gather_agents.py

new file mode 100644

index 0000000000..8b33eff600

--- /dev/null

+++ b/cookbook/agent_concepts/async/gather_agents.py

@@ -0,0 +1,41 @@

+import asyncio

+

+from agno.agent import Agent

+from agno.models.openai import OpenAIChat

+from agno.tools.duckduckgo import DuckDuckGoTools

+from rich.pretty import pprint

+

+providers = ["openai", "anthropic", "ollama", "cohere", "google"]

+instructions = [

+ "Your task is to write a well researched report on AI providers.",

+ "The report should be unbiased and factual.",

+]

+

+

+async def get_reports():

+ tasks = []

+ for provider in providers:

+ agent = Agent(

+ model=OpenAIChat(id="gpt-4"),

+ instructions=instructions,

+ tools=[DuckDuckGoTools()],

+ )

+ tasks.append(

+ agent.arun(f"Write a report on the following AI provider: {provider}")

+ )

+

+ results = await asyncio.gather(*tasks)

+ return results

+

+

+async def main():

+ results = await get_reports()

+ for result in results:

+ print("************")

+ pprint(result.content)

+ print("************")

+ print("\n")

+

+

+if __name__ == "__main__":

+ asyncio.run(main())

diff --git a/cookbook/agent_concepts/async/reasoning.py b/cookbook/agent_concepts/async/reasoning.py

new file mode 100644

index 0000000000..013547c299

--- /dev/null

+++ b/cookbook/agent_concepts/async/reasoning.py

@@ -0,0 +1,22 @@

+import asyncio

+

+from agno.agent import Agent

+from agno.cli.console import console

+from agno.models.openai import OpenAIChat

+

+task = "9.11 and 9.9 -- which is bigger?"

+

+regular_agent = Agent(model=OpenAIChat(id="gpt-4o"), markdown=True)

+reasoning_agent = Agent(

+ model=OpenAIChat(id="gpt-4o"),

+ reasoning=True,

+ markdown=True,

+ structured_outputs=True,

+)

+

+console.rule("[bold green]Regular Agent[/bold green]")

+asyncio.run(regular_agent.aprint_response(task, stream=True))

+console.rule("[bold yellow]Reasoning Agent[/bold yellow]")

+asyncio.run(

+ reasoning_agent.aprint_response(task, stream=True, show_full_reasoning=True)

+)

diff --git a/cookbook/agent_concepts/async/structured_output.py b/cookbook/agent_concepts/async/structured_output.py

new file mode 100644

index 0000000000..db9bd57be4

--- /dev/null

+++ b/cookbook/agent_concepts/async/structured_output.py

@@ -0,0 +1,52 @@

+import asyncio

+from typing import List

+

+from agno.agent import Agent, RunResponse # noqa

+from agno.models.openai import OpenAIChat

+from pydantic import BaseModel, Field

+from rich.pretty import pprint # noqa

+

+

+class MovieScript(BaseModel):

+ setting: str = Field(

+ ..., description="Provide a nice setting for a blockbuster movie."

+ )

+ ending: str = Field(

+ ...,

+ description="Ending of the movie. If not available, provide a happy ending.",

+ )

+ genre: str = Field(

+ ...,

+ description="Genre of the movie. If not available, select action, thriller or romantic comedy.",

+ )

+ name: str = Field(..., description="Give a name to this movie")

+ characters: List[str] = Field(..., description="Name of characters for this movie.")

+ storyline: str = Field(

+ ..., description="3 sentence storyline for the movie. Make it exciting!"

+ )

+

+

+# Agent that uses JSON mode

+json_mode_agent = Agent(

+ model=OpenAIChat(id="gpt-4o"),

+ description="You write movie scripts.",

+ response_model=MovieScript,

+)

+

+# Agent that uses structured outputs

+structured_output_agent = Agent(

+ model=OpenAIChat(id="gpt-4o-2024-08-06"),

+ description="You write movie scripts.",

+ response_model=MovieScript,

+ structured_outputs=True,

+)

+

+

+# Get the response in a variable

+# json_mode_response: RunResponse = json_mode_agent.arun("New York")

+# pprint(json_mode_response.content)

+# structured_output_response: RunResponse = structured_output_agent.arun("New York")

+# pprint(structured_output_response.content)

+

+asyncio.run(json_mode_agent.aprint_response("New York"))

+asyncio.run(structured_output_agent.aprint_response("New York"))

diff --git a/cookbook/agent_concepts/async/tool_use.py b/cookbook/agent_concepts/async/tool_use.py

new file mode 100644

index 0000000000..5f92b3bfa2

--- /dev/null

+++ b/cookbook/agent_concepts/async/tool_use.py

@@ -0,0 +1,13 @@

+import asyncio

+

+from agno.agent import Agent

+from agno.models.openai import OpenAIChat

+from agno.tools.duckduckgo import DuckDuckGoTools

+

+agent = Agent(

+ model=OpenAIChat(id="gpt-4o"),

+ tools=[DuckDuckGoTools()],

+ show_tool_calls=True,

+ markdown=True,

+)

+asyncio.run(agent.aprint_response("Whats happening in UK and in USA?"))

diff --git a/cookbook/assistants/__init__.py b/cookbook/agent_concepts/hybrid_search/__init__.py

similarity index 100%

rename from cookbook/assistants/__init__.py

rename to cookbook/agent_concepts/hybrid_search/__init__.py

diff --git a/cookbook/agent_concepts/hybrid_search/lancedb/README.md b/cookbook/agent_concepts/hybrid_search/lancedb/README.md

new file mode 100644

index 0000000000..4938b9aba4

--- /dev/null

+++ b/cookbook/agent_concepts/hybrid_search/lancedb/README.md

@@ -0,0 +1,20 @@

+## LanceDB Hybrid Search

+

+### 1. Create a virtual environment

+

+```shell

+python3 -m venv ~/.venvs/aienv

+source ~/.venvs/aienv/bin/activate

+```

+

+### 2. Install libraries

+

+```shell

+pip install -U lancedb tantivy pypdf openai agno

+```

+

+### 3. Run LanceDB Hybrid Search Agent

+

+```shell

+python cookbook/agent_concepts/hybrid_search/lancedb/agent.py

+```

diff --git a/cookbook/assistants/advanced_rag/__init__.py b/cookbook/agent_concepts/hybrid_search/lancedb/__init__.py

similarity index 100%

rename from cookbook/assistants/advanced_rag/__init__.py

rename to cookbook/agent_concepts/hybrid_search/lancedb/__init__.py

diff --git a/cookbook/agent_concepts/hybrid_search/lancedb/agent.py b/cookbook/agent_concepts/hybrid_search/lancedb/agent.py

new file mode 100644

index 0000000000..563b185a16

--- /dev/null

+++ b/cookbook/agent_concepts/hybrid_search/lancedb/agent.py

@@ -0,0 +1,44 @@

+from typing import Optional

+

+import typer

+from agno.agent import Agent

+from agno.knowledge.pdf_url import PDFUrlKnowledgeBase

+from agno.vectordb.lancedb import LanceDb

+from agno.vectordb.search import SearchType

+from rich.prompt import Prompt

+

+# LanceDB Vector DB

+vector_db = LanceDb(

+ table_name="recipes",

+ uri="tmp/lancedb",

+ search_type=SearchType.hybrid,

+)

+

+# Knowledge Base

+knowledge_base = PDFUrlKnowledgeBase(

+ urls=["https://agno-public.s3.amazonaws.com/recipes/ThaiRecipes.pdf"],

+ vector_db=vector_db,

+)

+

+

+def lancedb_agent(user: str = "user"):

+ agent = Agent(

+ user_id=user,

+ knowledge=knowledge_base,

+ search_knowledge=True,

+ show_tool_calls=True,

+ debug_mode=True,

+ )

+

+ while True:

+ message = Prompt.ask(f"[bold] :sunglasses: {user} [/bold]")

+ if message in ("exit", "bye"):

+ break

+ agent.print_response(message)

+

+

+if __name__ == "__main__":

+ # Comment out after first run

+ knowledge_base.load(recreate=False)

+

+ typer.run(lancedb_agent)

diff --git a/cookbook/agent_concepts/hybrid_search/pgvector/README.md b/cookbook/agent_concepts/hybrid_search/pgvector/README.md

new file mode 100644

index 0000000000..29eb9b02b4

--- /dev/null

+++ b/cookbook/agent_concepts/hybrid_search/pgvector/README.md

@@ -0,0 +1,26 @@

+## Pgvector Hybrid Search

+

+### 1. Create a virtual environment

+

+```shell

+python3 -m venv ~/.venvs/aienv

+source ~/.venvs/aienv/bin/activate

+```

+

+### 2. Install libraries

+

+```shell

+pip install -U pgvector pypdf "psycopg[binary]" sqlalchemy openai agno

+```

+

+### 3. Run PgVector

+

+```shell

+./cookbook/scripts/run_pgvector.sh

+```

+

+### 4. Run PgVector Hybrid Search Agent

+

+```shell

+python cookbook/agent_concepts/hybrid_search/pgvector/agent.py

+```

diff --git a/cookbook/assistants/advanced_rag/hybrid_search/__init__.py b/cookbook/agent_concepts/hybrid_search/pgvector/__init__.py

similarity index 100%

rename from cookbook/assistants/advanced_rag/hybrid_search/__init__.py

rename to cookbook/agent_concepts/hybrid_search/pgvector/__init__.py

diff --git a/cookbook/agent_concepts/hybrid_search/pgvector/agent.py b/cookbook/agent_concepts/hybrid_search/pgvector/agent.py

new file mode 100644

index 0000000000..ece1dab42b

--- /dev/null

+++ b/cookbook/agent_concepts/hybrid_search/pgvector/agent.py

@@ -0,0 +1,27 @@

+from agno.agent import Agent

+from agno.knowledge.pdf_url import PDFUrlKnowledgeBase

+from agno.models.openai import OpenAIChat

+from agno.vectordb.pgvector import PgVector, SearchType

+

+db_url = "postgresql+psycopg://ai:ai@localhost:5532/ai"

+knowledge_base = PDFUrlKnowledgeBase(

+ urls=["https://agno-public.s3.amazonaws.com/recipes/ThaiRecipes.pdf"],

+ vector_db=PgVector(

+ table_name="recipes", db_url=db_url, search_type=SearchType.hybrid

+ ),

+)

+# Load the knowledge base: Comment out after first run

+knowledge_base.load(recreate=False)

+

+agent = Agent(

+ model=OpenAIChat(id="gpt-4o"),

+ knowledge=knowledge_base,

+ search_knowledge=True,

+ read_chat_history=True,

+ show_tool_calls=True,

+ markdown=True,

+)

+agent.print_response(

+ "How do I make chicken and galangal in coconut milk soup", stream=True

+)

+agent.print_response("What was my last question?", stream=True)

diff --git a/cookbook/agent_concepts/hybrid_search/pinecone/README.md b/cookbook/agent_concepts/hybrid_search/pinecone/README.md

new file mode 100644

index 0000000000..3015993f0e

--- /dev/null

+++ b/cookbook/agent_concepts/hybrid_search/pinecone/README.md

@@ -0,0 +1,26 @@

+## Pinecone Hybrid Search Agent

+

+### 1. Create a virtual environment

+

+```shell

+python3 -m venv ~/.venvs/aienv

+source ~/.venvs/aienv/bin/activate

+```

+

+### 2. Install libraries

+

+```shell

+pip install -U pinecone pinecone-text pypdf openai agno

+```

+

+### 3. Set Pinecone API Key

+

+```shell

+export PINECONE_API_KEY=***

+```

+

+### 4. Run Pinecone Hybrid Search Agent

+

+```shell

+python cookbook/agent_concepts/hybrid_search/pinecone/agent.py

+```

diff --git a/cookbook/assistants/advanced_rag/image_search/__init__.py b/cookbook/agent_concepts/hybrid_search/pinecone/__init__.py

similarity index 100%

rename from cookbook/assistants/advanced_rag/image_search/__init__.py

rename to cookbook/agent_concepts/hybrid_search/pinecone/__init__.py

diff --git a/cookbook/agent_concepts/hybrid_search/pinecone/agent.py b/cookbook/agent_concepts/hybrid_search/pinecone/agent.py

new file mode 100644

index 0000000000..e8fec8540a

--- /dev/null

+++ b/cookbook/agent_concepts/hybrid_search/pinecone/agent.py

@@ -0,0 +1,52 @@

+import os

+from typing import Optional

+

+import nltk # type: ignore

+import typer

+from agno.agent import Agent

+from agno.knowledge.pdf_url import PDFUrlKnowledgeBase

+from agno.vectordb.pineconedb import PineconeDb

+from rich.prompt import Prompt

+

+nltk.download("punkt")

+nltk.download("punkt_tab")

+

+api_key = os.getenv("PINECONE_API_KEY")

+index_name = "thai-recipe-hybrid-search"

+

+vector_db = PineconeDb(

+ name=index_name,

+ dimension=1536,

+ metric="cosine",

+ spec={"serverless": {"cloud": "aws", "region": "us-east-1"}},

+ api_key=api_key,

+ use_hybrid_search=True,

+ hybrid_alpha=0.5,

+)

+

+knowledge_base = PDFUrlKnowledgeBase(

+ urls=["https://agno-public.s3.amazonaws.com/recipes/ThaiRecipes.pdf"],

+ vector_db=vector_db,

+)

+

+

+def pinecone_agent(user: str = "user"):

+ agent = Agent(

+ user_id=user,

+ knowledge=knowledge_base,

+ search_knowledge=True,

+ show_tool_calls=True,

+ )

+

+ while True:

+ message = Prompt.ask(f"[bold] :sunglasses: {user} [/bold]")

+ if message in ("exit", "bye"):

+ break

+ agent.print_response(message)

+

+

+if __name__ == "__main__":

+ # Comment out after first run

+ knowledge_base.load(recreate=False, upsert=True)

+

+ typer.run(pinecone_agent)

diff --git a/cookbook/agent_concepts/knowledge/README.md b/cookbook/agent_concepts/knowledge/README.md

new file mode 100644

index 0000000000..e25e821232

--- /dev/null

+++ b/cookbook/agent_concepts/knowledge/README.md

@@ -0,0 +1,58 @@

+# Agent Knowledge

+

+**Knowledge Base:** is information that the Agent can search to improve its responses. This directory contains a series of cookbooks that demonstrate how to build a knowledge base for the Agent.

+

+> Note: Fork and clone this repository if needed

+

+### 1. Create a virtual environment

+

+```shell

+python3 -m venv ~/.venvs/aienv

+source ~/.venvs/aienv/bin/activate

+```

+

+### 2. Install libraries

+

+```shell

+pip install -U pgvector "psycopg[binary]" sqlalchemy openai agno

+```

+

+### 3. Run PgVector

+

+> Install [docker desktop](https://docs.docker.com/desktop/install/mac-install/) first.

+

+- Run using a helper script

+

+```shell

+./cookbook/run_pgvector.sh

+```

+

+- OR run using the docker run command

+

+```shell

+docker run -d \

+ -e POSTGRES_DB=ai \

+ -e POSTGRES_USER=ai \

+ -e POSTGRES_PASSWORD=ai \

+ -e PGDATA=/var/lib/postgresql/data/pgdata \

+ -v pgvolume:/var/lib/postgresql/data \

+ -p 5532:5432 \

+ --name pgvector \

+ agnohq/pgvector:16

+```

+

+### 4. Test Knowledge Cookbooks

+

+Eg: PDF URL Knowledge Base

+

+- Install libraries

+

+```shell

+pip install -U pypdf bs4

+```

+

+- Run the PDF URL script

+

+```shell

+python cookbook/agent_concepts/knowledge/pdf_url.py

+```

diff --git a/cookbook/assistants/advanced_rag/pinecone_hybrid_search/__init__.py b/cookbook/agent_concepts/knowledge/__init__.py

similarity index 100%

rename from cookbook/assistants/advanced_rag/pinecone_hybrid_search/__init__.py

rename to cookbook/agent_concepts/knowledge/__init__.py

diff --git a/cookbook/agent_concepts/knowledge/arxiv_kb.py b/cookbook/agent_concepts/knowledge/arxiv_kb.py

new file mode 100644

index 0000000000..b07cfe2def

--- /dev/null