A data pipeline for analyzing Open edX data. This is a batch analysis engine that is capable of running complex data processing workflows.

Based on the official implements, replace hadoop tools from code, only use mongo+pandas+msyql implement analytics tasks for small scale user.

QQ Group:106781163

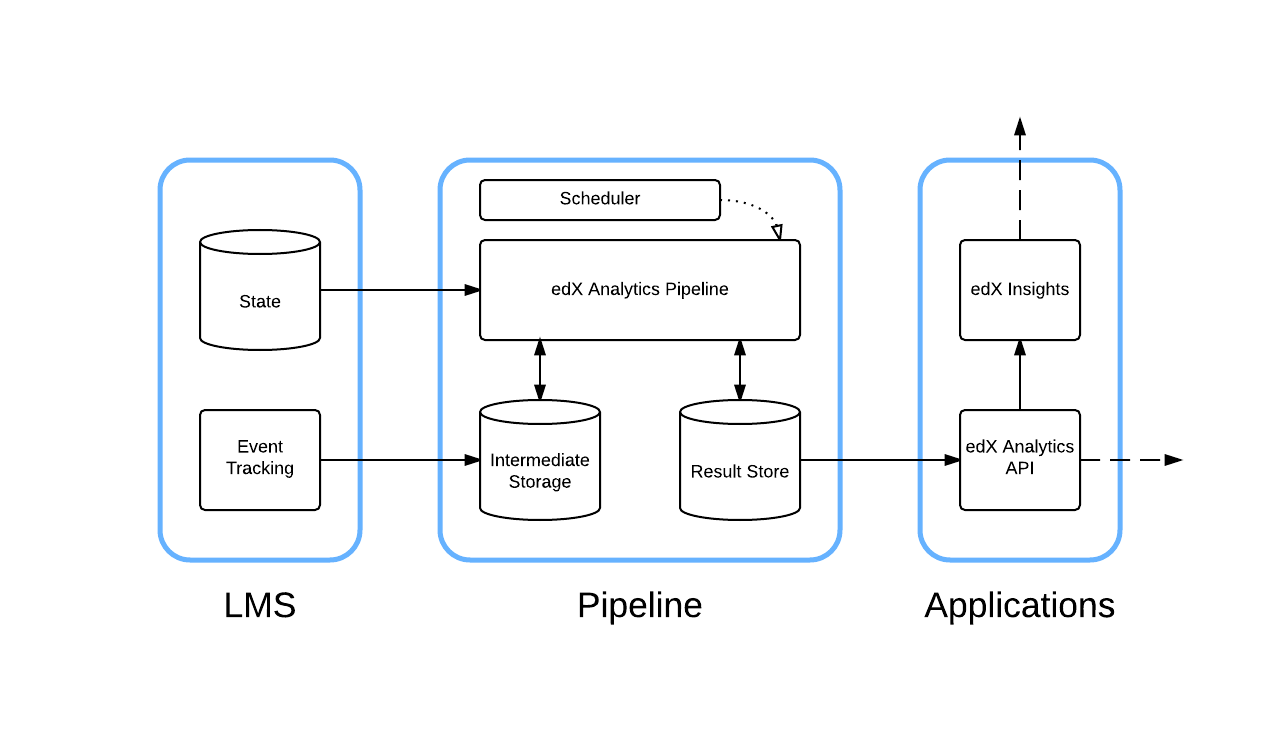

The data pipeline takes large amounts of raw data, analyzes it and produces higher value outputs that are used by various downstream tools.

The primary consumer of this data is Open edX Insights.

This tool uses spotify/luigi as the core of the workflow engine.

Data transformation and analysis is performed with the assistance of the following third party tools (among others):

The data pipeline is designed to be invoked on a periodic basis by an external scheduler. This can be cron, jenkins or any other system that can periodically run shell commands.

Here is a simplified, high level, view of the architecture:

We call this environment the "analyticstack". It contains many of the services needed to develop new features for Insights and the data pipeline.

A few of the services included are:

- LMS (edx-platform)

- Studio (edx-platform)

- Insights (edx-analytics-dashboard)

- Analytics API (edx-analytics-data-api)

We currently have a separate development from the core edx-platform devstack because the data pipeline depends on several services that dramatically increase the footprint of the virtual machine. Given that a small fraction of Open edX contributors are looking to develop features that leverage the data pipeline, we chose to build a variant of the devstack that includes them. In the future we hope to adopt OEP-5 which would allow developers to mix and match the services they are using for development at a much more granular level. In the meantime, you will need to do some juggling if you are also running a traditional Open edX devstack to ensure that both it and the analyticstack are not trying to run at the same time (they compete for the same ports).

If you are running a generic Open edX devstack, navigate to the directory that contains the Vagrantfile for it and run vagrant halt.

Please follow the analyticstack installation guide.

For small installations, you may want to use our single instance installation guide.

For larger installations, we do not have a similarly detailed guide, you can start with our installation guide.

Contributions are very welcome, but for legal reasons, you must submit a signed individual contributor's agreement before we can accept your contribution. See our CONTRIBUTING file for more information -- it also contains guidelines for how to maintain high code quality, which will make your contribution more likely to be accepted.

#!/bin/bash

LMS_HOSTNAME="https://lms.mysite.org"

INSIGHTS_HOSTNAME="http://127.0.0.1:8110" # Change this to the externally visible domain and scheme for your Insights install, ideally HTTPS

DB_USERNAME="read_only"

DB_HOST="localhost"

DB_PASSWORD="password"

DB_PORT="3306"

# Use Tsinghua mirror

PIP_MIRROR="https://pypi.tuna.tsinghua.edu.cn/simple"

# Run this script to set up the analytics pipeline

echo "Assumes that there's a tracking.log file in \$HOME"

sleep 2

echo "Create ssh key"

ssh-keygen -t rsa -f ~/.ssh/id_rsa -P ''

echo >> ~/.ssh/authorized_keys # Make sure there's a newline at the end

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

# check: ssh localhost "echo It worked!" -- make sure it works.

echo "Install needed packages"

sudo apt-get update

sudo apt-get install -y git python-pip python-dev libmysqlclient-dev

sudo pip install -i $PIP_MIRROR virtualenv

# Make a new virtualenv -- otherwise will have conflicts

echo "Make pipeline virtualenv"

virtualenv pipeline

. pipeline/bin/activate

echo "Check out pipeline"

git clone https://github.com/956237586/edx-analytics-pipeline.git

cd edx-analytics-pipeline

make bootstrap

# HACK: make ansible do this

cat <<EOF > /edx/etc/edx-analytics-pipeline/input.json

{"username": $DB_USERNAME, "host": $DB_HOST, "password": $DB_PASSWORD, "port": $DB_PORT}

EOF

echo "Run the pipeline"

# Ensure you're in the pipeline virtualenv

# --user ssh username

# sudo user need no password

# --sudo-user sudoUser

remote-task SetupTest \

--repo https://github.com/956237586/edx-analytics-pipeline.git \

--branch master \

--host localhost \

--user edustack \

--sudo-user edustack \

--remote-name analyticstack \

--local-scheduler \

--wait

# point to override-config

# --override-config $HOME/edx-analytics-pipeline/config/devstack.cfg

INTERVAL = yyyy-MM-DD-yyyy-MM-DD

N_DAYS = X

/var/lib/analytics-tasks/analyticstack/venv/bin/launch-task HylImportEnrollmentsIntoMysql --interval $INTERVAL --overwrite-n-days $N_DAYS --overwrite-mysql --local-scheduler

/var/lib/analytics-tasks/analyticstack/venv/bin/launch-task HylInsertToMysqlCourseActivityTask --end-date $INTERVAL --overwrite-n-days $N_DAYS --overwrite-mysql --local-scheduler

/var/lib/analytics-tasks/analyticstack/venv/bin/launch-task HylInsertToMysqlAllVideoTask --interval $INTERVAL --overwrite-n-days $N_DAYS --local-scheduler

/var/lib/analytics-tasks/analyticstack/venv/bin/launch-task HylInsertToMysqlCourseEnrollByCountryWorkflow --interval $INTERVAL --overwrite-n-days $N_DAYS --overwrite --local-scheduler

TASK_NAME and TASK_ARGS same as local script

# be sure in virtual env

remote-task TASK_NAME TASK_ARGS \

--repo https://github.com/956237586/edx-analytics-pipeline.git \

--branch master \

--host localhost \

--user edustack \

--sudo-user edustack \

--remote-name analyticstack \

--local-scheduler \

--wait

1.enable lms oauth2

/edx/app/edxapp/lms.env.json

"ENABLE_OAUTH2_PROVIDER": true,

"OAUTH_OIDC_ISSUER": "http://HOST/oauth2"

"OAUTH_ENFORCE_SECURE": false,

2.add oauth2 client and trust it MUST BE SUPER USER http://LMS_HOST/admin/oauth2/client/ http://LMS_HOST/admin/edx_oauth2_provider/trustedclient/

3.change insights oauth2 conf /edx/etc/insights.yml SOCIAL_AUTH_EDX_OIDC_ID_TOKEN_DECRYPTION_KEY: SECRET SOCIAL_AUTH_EDX_OIDC_ISSUER: http://HOST/oauth2 SOCIAL_AUTH_EDX_OIDC_KEY: OAUTH_2_KEY SOCIAL_AUTH_EDX_OIDC_LOGOUT_URL: http://HOST/logout SOCIAL_AUTH_EDX_OIDC_SECRET: SECRET SOCIAL_AUTH_EDX_OIDC_URL_ROOT: http://HOST/oauth2

4.create mongo collection for tracking log

edit /etc/mongod.conf disable auth

auth = false

sudo service mongod restart

mongo

use insights

db.createUser(

{

user: "insights",

pwd: "PASSWORD",

roles: [{ role: "readWrite", db: "insights" }]

}

)

REMEMBER CHANGE auth=true AFTER COLLECTION CREATED

5.create auth files /edx/etc/edx-analytics-pipeline/mongo.json {"username": "insights", "host": "localhost", "password": "PASSWORD", "port": 27017} /edx/etc/edx-analytics-pipeline/input.json {"username": "read_only", "host": "localhost", "password": "PASSWORD", "port": 3306} /edx/etc/edx-analytics-pipeline/output.json {"username": "pipeline001", "host": "localhost", "password": "PASSWORD", "port": 3306}

6.add missing table in dashboard

mysql -u root -p

use dashboard;

CREATE TABLE `soapbox_message` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`message` longtext NOT NULL,

`is_global` tinyint(1) NOT NULL,

`is_active` tinyint(1) NOT NULL,

`url` varchar(255) DEFAULT NULL,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8

7.create pipeline configuration file

override.cfg

you can find template in config/prod.cfg

IMPORTANT SETTINGS BELOW

[database-export]

database = reports

credentials = /edx/etc/edx-analytics-pipeline/output.json

[database-import]

database = edxapp

credentials = /edx/etc/edx-analytics-pipeline/input.json

destination = s3://fake/warehouse/

[mongo]

credentials = /edx/etc/edx-analytics-pipeline/mongo.json